In NetWorker, staging refers to moving savesets from one piece of media to another. The move operation is two-fold, consisting of:

- A clone operation (source -> target)

- A delete operation (source)

Both of these operations are done automatically as part of the staging process, with the delete being defined as the maximum supported operation for the media that the source was on; for tape based media, this means deleting the media database entries for the saveset(s) staged, and for disk this means both the media database delete and the filesystem delete of the saveset from the disk backup unit.

There’s a few reasons why you use staging policies in NetWorker:

- To free up space on disk backup units.

- To move backups/clones from a previous media type to a new media type.

- To move backups/clones from older, expiring media to new media for long-term retention.

The second two options usually refer to tape -> tape staging, which these days is the far less common use of staging in NetWorker. The most common use it now for managing used space on disk backup units, and that’s what we’ll consider here.

There’s two ways you can stage within NetWorker – either as a scheduled task, or as a manual task.

Scheduled Staging

Scheduled staging occurs by creating one or more staging policies. Typically in a standard configuration for disk backup units, you’ll have one staging policy per disk backup unit. For example:

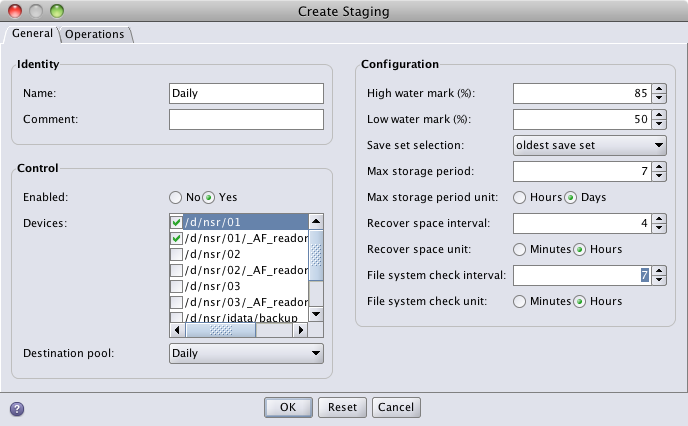

The staging policy consists of settings that define:

- Name/Comment – Identification details for the staging policy, as you’d expect.

- Enabled – Default is no, set to Yes if you want the staging policy to run. (Note that you can’t start a disabled staging policy manually – or you couldn’t, last time I checked.)

- Which disk backup units (devices) will be read from. Choose both the read-only and the read-write version of the disk backup units. (Unless there are significant issues, NetWorker will always read from the read-only version of the disk backup unit anyway.)

- Destination pool – where savesets will be written to.

- High water mark – this is expressed as a percentage of the total filesystem capacity that the disk backup resides on (which is why each disk backup unit should be on its own filesystem!). It basically means “if the occupied space in savesets reaches <nominated> percent, then start staging data off”.

- Low water mark – again, a percentage of the total filesystem capacity that the disk backup resides on. If staging is initiated due to a high watermark value, then staging will continue until the disk backup unit can be freed up such that the used space at the end of the staging is equal to or less than the low water mark.

- Save set selection – will be one of oldest/largest/youngest/smallest. For most disk backup units, the choice is normally between oldest saveset or largest saveset.

- Max storage period / period unit – defines the maximum amount of time, in either days or hours, that savesets can remain on disk before they must be staged out. This will occur irrespective of any watermarks (and the watermarks, similarly, will occur irrespective of any maximum storage period).

- Recover space interval / interval unit – defines how frequently NetWorker will check to see if there are any recyclable savesets that can be removed from disk. (Aborted savesets, while checked for in this, should be automatically cleaned up when they are aborted.)

- Filesystem check interval / interval unit – defines how frequently NetWorker will check to see whether it should actually perform any staging.

While there’s a lot of numbers/settings there in a small dialog, they actually all make sense. For instance, let’s consider the staging policy defined in the above picture. It shows a policy called “Daily” that will move savesets out from /d/nsr/01 to the Daily pool, with the following criteria:

- If the disk backup unit becomes 85% full, it will commence staging until it has moved enough savesets to return the disk backup unit to 50% capacity.

- Any saveset that is older than 7 days will be staged.

- Whenever savesets are staged, they will be picked in order of oldest to newest.

- It will check for recyclable/removable savesets every 4 hours.

- It will check to see if staging can be run every 7 hours.

Note that if the disk backup unit becomes full, staging by watermark will be automatically kicked off, even if the 7 hour wait between staging checks hasn’t been reached.

One important note here – If you have multiple disk backup units with volumes labelled into the same pool, you can choose to either have them all in one staging policy, have one staging policy per disk backup unit, or have a series of staging policies with one or more disk backup unit in each policy. There’s risks/costs associated with each option. If you have too many defined under a single staging policy, then staging becomes very “single-threaded” in terms of disks read from; this can significantly slow down the staging policy. Alternatively, if you have one staging policy per disk backup unit, but the number of disk backup units exceeds the number of tape drives, you can end up with significant contention between staging, cloning and tape-recovery operations. It’s a fine balancing act.

Manual staging

Manual staging is accomplished by running the nsrstage command. That, incidentally, is what happens in the background for scheduled staging – the staging policy runs, evaluates what needs to be staged, then runs nsrstage.

The standard way of invoking nsrstage is:

# nsrstage -b destinationPool -m -v -S ssid/cloneid

or

# nsrstage -b destinationPool -m -v -S -f /path/to/file.txt

In the first, you’re staging a single saveset instance. NOTE: You must always specify ssid/cloneid; if you don’t – if you just specify the saveset ID, then when NetWorker cleans up at the end of the staging operation, it will delete all other instances of the saveset. So if you’ve got a clone, you’ll lose reference to the clone!

In the second instance, you’re staging multiple sssid/cloneid combinations, specified one per line in a plain text file.

(There are alternate mechanisms for calling nsrstage to either clean the disk backup unit, or stage by volume. These aren’t covered here.)

As with all pools these days in NetWorker, you can either have the savesets staged with their original retention period in place, or stage them to a pool with a defined retention policy, in which case their retention policy will be adjusted accordingly when they are staged.

Scripting

You can of course script staging operations; particularly when running manually you’ll likely first run mminfo, then run nsrstage against a bunch of savesets. Alternatively, you may want to check out dbufree, a utility within the IDATA Tools utility suite; this offers considerable enhancements over regular staging, including (but not limited to):

- Stage savesets selected on a disk backup unit by any valid mminfo query (e.g., stage by group…)

- Specify how much space you want to free up, rather than watermark based

- Stage in chunks – stage enough space to free up a nominated amount of capacity, stop to allow reclamation to take place, then start staging again.

- Only stage savesets that have clones.

- Enhanced saveset order options – in addition to biggest/smallest/oldest/newest, there’s options to order savesets by client (to logically assist in speeding up recovery from multiple backups) in any of the ‘primary’ sort methods as a sub-order.

Cloning

Talking about staging wouldn’t be complete if we also didn’t mention cloning. In an ideal configuration for disk backup units, you should:

- Backup to disk

- Clone to tape

- (Later), stage to tape.

This can create some recovery implications. I’ve covered that previously in this post.

Hi,

I have a one question: what will happen with browse/retention policy of save sets after staging to the archive pool ? I think, that save sets will not have browse/retention policies ? correct me if I’m not right.

Best Regards,

Damiano

I’m not sure and I’ve just moved so I don’t yet have a lab to test in.

I’d guess though that browse would be dropped (since Archive pools keep no indices), and retention would be extended to ‘forever’.