When it comes to backup and data protection, I like to think of myself as being somewhat of a stickler for accuracy. After all, without accuracy, you don’t have specificity, and without specificity, you can’t reliably say that you have what you think you have.

So on the basis of wanting vendors to be more accurate, I really do wish vendors would stop talking about archive when they actually mean hierarchical storage management (HSM). It confuses journalists, technologists, managers and storage administrators, and (I must admit to some level of cynicism here) appears to be mainly driven from some thinking that “HSM” sounds either too scary or too complex.

HSM is neither scary nor complex – it’s just a variant of tiered storage, which is something that any site with 3+ TB of presented primary production data should be at least aware of, if not actively implementing and using. (Indeed, one might argue that HSM is the original form of tiered storage.)

By “presented primary production”, I’m referring to available-to-the-OS high speed, high cost storage presented in high performance LUN configurations. At this point, storage costs are high enough that tiered storage solutions start to make sense. (Bear in mind that 3+ TB of presented storage in such configurations may represent between 6 and 10TB of raw high speed, high cost storage. Thus, while it may not sound all that expensive initially, the disk-to-data ratio increases the cost substantially.) It should be noted that whether that tiering is done with a combination of different speeds of disks and levels of RAID, or with disk vs tape, or some combination of the two, is largely irrelevant to the notion of HSM.

Not only is HSM easy to understand and shouldn’t have any fear associated with it, the difference between HSM and archive is also equally easy to understand. It can even be explained with diagrams.

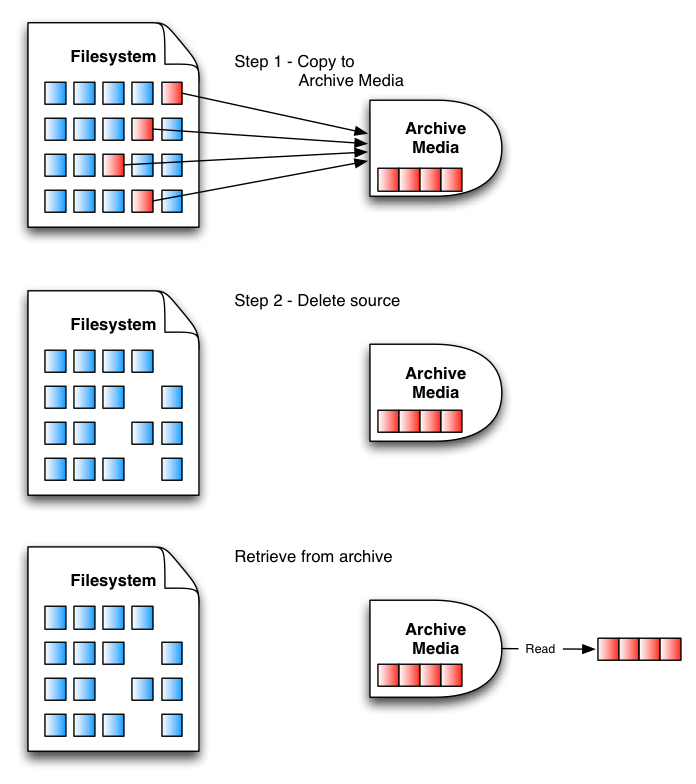

Here’s what archive looks like:

So, when we archive files, we first copy them out to archive media, then delete them from the source. Thus, if we need to access the archived data, we must read it back directly from the archive media. There is no reference left to the archived data on the filesystem, and data access must be managed independently from previous access methods.

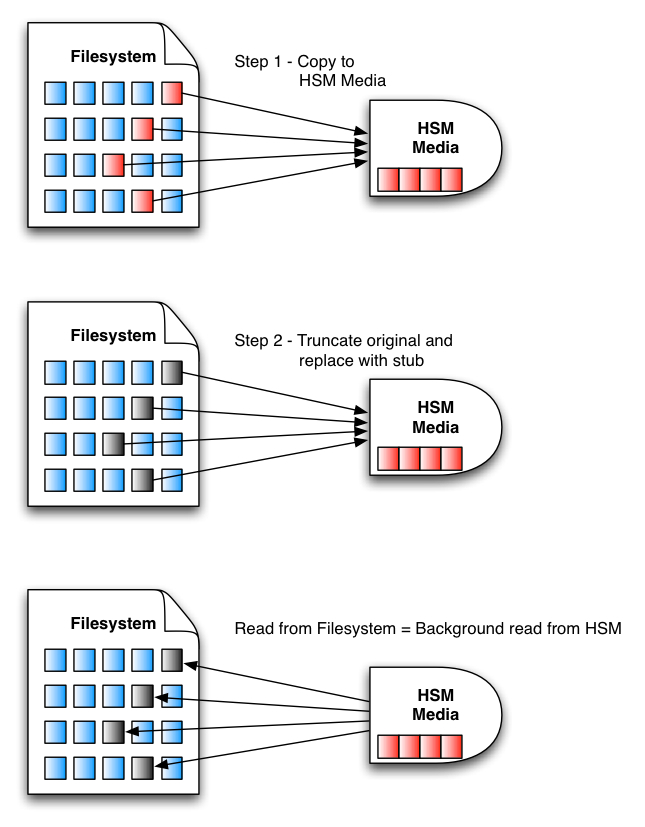

On the other hand, here’s what the HSM process looks like:

So when we use HSM on files, we first copy them out to HSM media, then delete (or truncate) the original file but put in its place a stub file. This stub file has the same file name as the original file, and should a user attempt to access the stub, the HSM system silently and invisibly retrieves the original file from the HSM media, providing it back to the end user. If the user saves the file back to the same source, the stub is replaced with the original+updated data; if the user doesn’t save the file, the stub is left in place.

Or if you’re looking for an even simpler distinction: archive deletes, HSM leaves a stub. If a vendor talks to you about archive, but their product leaves a stub, you can know for sure that they actually mean HSM.

Honestly, these two concepts aren’t difficult, and they aren’t the same. In the never ending quest to save user bytes, you’d think vendors would appreciate that it’s cheaper to refer to HSM as HSM rather than Archive. Honestly, that’s a 4 byte space saving alone, every time the correct term is used!

[Edit – 2009-09-23]

OK, so it’s been pointed out by Scott Waterhouse that the official SNIA definition for archive doesn’t mention having to delete the source files, so I’ll accept that I was being stubbornly NetWorker-centric on this blog article. So I’ll accept that I’m wrong and (grudgingly yes) be prepared to refer to HSM as archive. But I won’t like it. Is that a fair compromise? 🙂

I won’t give up on ILP though!

Well this is a very “NetWorker” view to the problem of “archives”. Since this is the way NW handles them.

But in my experience “archive” tends to mean “final resting place” to the data by the people using archives.

And this is where HSM really shines. It becomes a really good data protection solution for archives (if we leave the NW centric view of what constitutes an archive at least?)

Usually you get at least two copies of the data (good to have on tape). Abilities to move the data around (to ensure readability). In some cases checksums to have some safeguard against “data rot”.

But HSM alone does not maketh an archive. At a minimum you need some metadata handling. Archives without metadata is near nothing. Some sort of front-end is needed to convert a HSM-system to a full fledged archive solution.

I think I’m entitled to some NetWorker-centric views given that this is “the NetWorker blog”; however, that being said I do suggest that to a lot of people archive does mean complete removal from original system. Therefore I’m not prepared to refer to HSM as archive, and archive HSM for the sake of the discussion…

That being said, I agree completely that HSM certainly needs more in order to be fully usable for an organisation. Currently vendors have been concentrating on information discovery – full content indexing of the HSM region. If we look at EMC for instance, their recent acquisition of Kazeon should continue to assist eDiscovery and content search against their HSM products – SourceOne, etc. However, as HSM solutions continue to grow in size – indeed, as all data storage continues to grow in size, regardless of primary/secondary/tertiary/near-line, there needs to also be greater attention paid to data visualisation as well.

Of course you are, it was not at all intended as criticism. I smiled as I visualized the archive commands before me 🙂

One thing I have encountered twice in the last week is “How do we make the HSM slow enough if we upgrade the hardware?”. For these customers the problem they’re facing is to prevent “abuse” of the system. HSM systems may work very well but as soon as it gets “too good” end-users start to use it as a cheap fileserver with “unlimited” amount of space.

Like many data systems it is something that has the potential to be abused – e.g., like backup, where you get “smart” users who decide they can delete entire realms of data whenever they need some free space temporarily, knowing that the backup system will bring that data back. For HSM though my approach is that users shouldn’t be able to push something into lower tiered storage – it needs to be managed as a policy.

I will certainly agree though that HSM storage does have the potential to introduce other slow-downs into the system though – in particular, I still dislike the filesystem density that it can introduce into systems where large amounts of data has been stubbed and more data has been added. (One reason for HSM’ing from a NAS, I guess – they do handle dense filesystems easier than regular filesystems…)

Preston;

From the SNIA website:

Archive:

1. [Data Management] A collection of data objects, perhaps with associated metadata, in a storage system whose primary purpose is the long-term preservation and retention of that data.

2. [Data Management] Synonym for data ingestion.

So, from the source, archive does not entail delete (necessarily). It does not do so for TSM users, nor Symantec eVault users, nor NW users. I think you are swimming against the current here. 🙂

Well it’s worked for untold years for salmon, so swimming against the stream isn’t necessarily a bad thing 🙂