In many environments with storage nodes, a common requirement is to share backup devices between the server and/or storage nodes (regardless of whether the storage nodes are dedicated or full). The primary goal is to reduce the number of devices, or the number of tape libraries required in order to minimise cost while still maximising flexibility of the environment.

There are two mechanisms available for device sharing. These are:

- Library sharing – free from any licensing, this is the cheapest but least flexible

- Dynamic drive sharing – requiring additional licenses, this is more flexible but comes at a higher cost in terms of maintenance, debugging and complexity.

It’s easiest to gain an understanding of how these two options work with some diagrams.

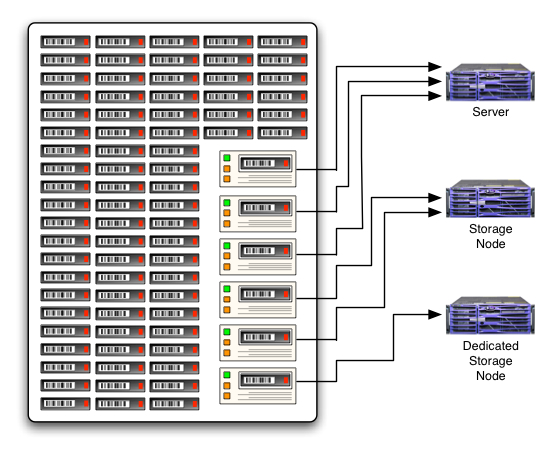

First, let’s consider library sharing:

(Not shown: connection to tape robot head – i.e., control port connection.)

In this configuration, more than one host connects to specific devices in the tape library. These are hard or permanent connections; that is, once a device has been allocated to one server/storage node, it stays allocated to that host until the library is reconfigured.

This is a static allocation of resources that has the backup administrator allocate a specific number of devices per server/storage node based on the expected requirements of the environment. For instance, in the example above, the server has permanent mappings to 3 of the 6 tape drives in the library; the full storage node has permanent mappings to 2 of the drives, and the dedicated storage node has a permanent mapping to the one remaining drive in the tape library.

The key advantages of this allocation method are:

- Zero licensing cost,

- Guaranteed device availability,

- Per-host/device isolation, preventing faults on one system from cascading to another.

The disadvantages of this allocation method are:

- No dynamic reallocation of resources in the event of requirement spikes that were not anticipated

- Can’t be reconfigured “on the fly”

- If a backup device fails and a host only has access to one device, it won’t be able to backup or recover without configuration changes.

Where you would typically use this allocation method:

- In VTLs – since NetWorker licenses VTLs by capacity*, you can allocate as many virtual drives as you want, providing each host with more than a certain amount of data in the datazone with one or more virtual drives, significantly reducing LAN impact of backup.

- In PTLs where backup/recovery load is shared reasonably equally by two or more storage nodes (counting the server as a storage node in this context) and having only one library is desirable.

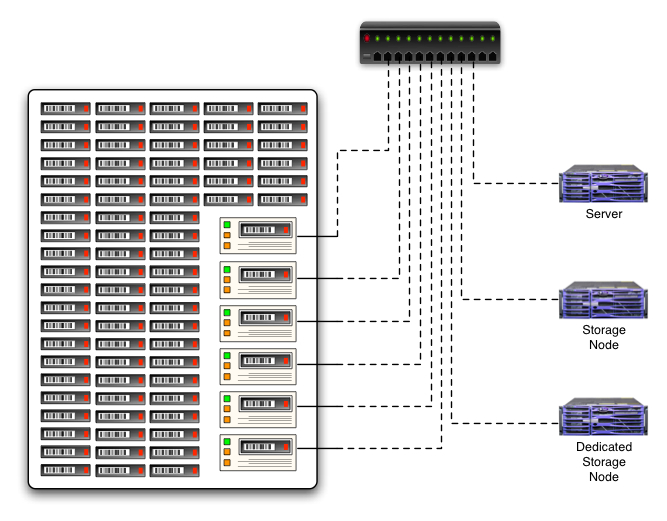

Moving on to dynamic drive sharing, this model resembles the following:

In this model, licenses are purchased, on a per-drive basis, for dynamic sharing. (So if you have 6 tape drives, such as in the above example, you would need up to 6 dynamic drive sharing licenses – you don’t have to share every device in the library – some could remain statically mapped if desired.)

When a library with dynamically shared drives is setup within NetWorker, the correct path to the device, on a per-host basis, will be established for that device within the configuration. This might mean that the device “/dev/nst0” on the backup server might be known in the configuration as being all three of the following:

- /dev/nsto

- rd=stnode:/dev/rmt/0cbn

- rd=dedstnode:\.Tape0

When a host with dynamic access to drives needs a device (for either backup or recovery), the NetWorker server (or whichever host has control over the actual tape robot) will load a tape into a free, mappable drive, then notify the storage node which device it should use to access media. The storage node will then use the media until it no longer needs to, with the host that controls the robot handling any post-use unmounts or media changes.

The advantages of dynamic drive sharing are:

- With maximal device sharing enabled, resource spikes can be handled by dynamically allocating a useful number of drives to host(s) that need them at any given time,

- Fewer drives are typically required than would be in a library sharing/statically mapped allocation method.

The disadvantages of dynamic drive sharing are:

- With multiple hosts able to see the same devices, isolating devices from SCSI resets and other SAN events from non-accessing hosts requires constant vigilance. HBAs and SAN settings must be configured appropriately, and these settings must be migrated/checked every time drivers change, systems are updated, etc.

- It is relatively easy to misconfigure dynamic drive sharing by planning to use too few physical tape drives than are really necessary. (I.e., it’s not that cheap, from a hardware perspective either.)

- Each drive that is dynamically shared requires a license.

- Unlike products such as say, NetBackup, due to the way nsrmmd’s work and don’t share nicely with each other, volumes must be unmounted before devices are transferred from one host with dynamic drive sharing to another, even if both hosts will be using the same volume. (This falls into the “lame” feature category.)

Where you would typically use dynamic drive sharing includes areas such as:

- A small, select number of hosts with significant volumes of data require LAN-free backups,

- With small/isolated storage nodes that are still SAN connected (e.g., DMZ storage nodes).

A lot of the architectural reasons as to why dynamic drive sharing was originally developed has in some senses gone away with greater penetration of VTLs into the backup arena. Given that it’s a straight forward proposition to configure a large number of virtual tape drives, instead of messing about with dynamic drive sharing one can instead choose to just use library sharing in VTL environments to achieve the best of both worlds.

—

* Currently non-EMC VTLs, while still licensed by capacity, typically co-receive unlimited autochanger licenses. Even so, such licenses are not limited by the the number of virtual tape drives.

thank you very interesting post.

Is there any doc about possible scsi reset ? a list of softwares causing it?

thanks

The documentation is maintained almost on a HBA by HBA and driver-by-driver basis. Different operating systems require different levels of intervention as well. For instance, in Windows systems a key requirement seems to ensure that the driver doesn’t send a ResetTPRLO.

Some pages that may get you started include:

This QLogic Configuration File

This HP Discussion

This old Legato Tech Bulletin

In order to best work out what you need to do, I’d suggest to run some Google searches on the keywords: tape mask SCSI reset SAN — but also include the OS and HBA(s) that you are using.

Isometimes networker tries to load tapes to some drives but it shows (device busy) however that drive is not writing.

I have Emulex HBA am looking for tapereset parameter that I cannot find.

Since my server is connected to some clariion I installed the Emulex driver from EMC tab. May Be I have to install the IBM one.

What do you think ?

thanks a lot

I can’t really say for sure – it’s been a while since I’ve used Emulex HBAs … normally though if supplied by EMC I tended to stick to the EMC drivers.

On Windows systems at least the parameters to mask/hide SCSI resets were usually found in the configuration utility for the card. Unfortunately again, since it’s been a while since I’ve worked with Emulex cards, I can’t point you at where those settings are…