In a previous article, I discussed how deduplication is one of those technologies that still straddles the gap between bleeding edge and leading edge, and thus needs to be classified as bleeding edge.

Putting aside the bleeding edge/leading edge argument for the moment (though my view there remains the same), a growing concern I have for deduplication is that it’s popping up everywhere in little islands rather than as a fully integrated option.

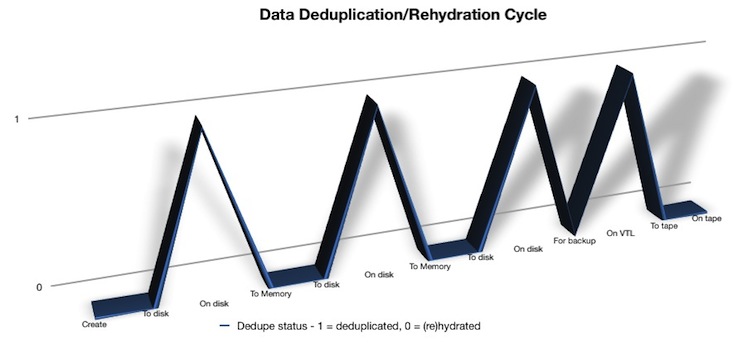

The net result? Dedupe on primary storage. Rehydrate to access. Modify, then dedupe to save again. Rehydrate for next access. Dedupe for saved changes. Rehydrate to backup. Dedupe the backup. Rehydrate for recovery.

All this dedupe is making me thirsty. Worse, it’s starting to look like a roller-coaster ride, and I always have the same reaction to them – horror, then an urge to throw up a little. The cycle doesn’t even look nice:

So, what’s the solution?

There’s certainly no easy solution – and currently no integrated solution. Not without some serious consideration to standards. Let’s accept, for the moment, that there’s no real option to keep in-OS/RAM data deduplicated. (I.e., at the per-operating system level – maybe there would be at a cross-OS virtualisation level within the hypervisor, but we’re not really there yet.)

One obvious factor that springs to mind is that the first, best approach to some normalisation would be to come up with a technique to transfer deduped primary storage in its deduped format to a deduped backup storage. There are already techniques for synchronising deduplicated data (e.g., when replicating between say, two Data Domain hosts). Why rehydrate when the next step is going to be a new dedupe algorithm being applied, for instance?

If we look at NetWorker, there are a number of places where dedupe can happen, either as part of the backup cycle, or a larger strategy:

- Primary storage deduplication via say, a Data Domain storage box or something along those lines.

- Archive/single instance deduplication for less frequently accessed files (say, Centera).

- Source based dedupe backup (via an Avamar node).

- Dedupe VTL (data domain or the DL4000 with a deduplication add-on).

(No, I won’t put dedupe backup to disk there. Not until ADV_FILE starts working better.)

Within the EMC product kit, there’s a lot of chance for interoperability of deduplicated data without the need to rehydrate. If anything, EMC is one of the few vendors out there (HP and IBM are the only others that spring to mind) that offer reasonably complete verticals on storage, running from the base array to the backup solution.

Based on EMC’s strong focus on deduplication with the acquisition of both Avamar and Data Domain, it seems a distinct possibility that this is at least a part of their planning. Shifting deduplicated data between disparate products without needing to rehydrate does have potential to be a game changer in terms of how we work with data, but I’ll promise you this: you won’t see this level of integration this year, and possibly not for the next few years. That level of integration is not going to be easy, it’s not going to come quick, and it’s going to require extreme levels of testing to make sure that it actually works when it is implemented.

So for the time being, we’ll have to continue to put up with deduplication being done in little islands within our IT environments, and continue to ride the deduplication/rehydration roller-coaster. Let’s hope we all don’t get sick before solutions start to appear.