Introduction

I was lucky enough to get to work on the beta testing programme for NetWorker 7.6 SP1. While there are a bunch of new features in NW 7.6 SP1 (with the one most discussed by EMC being the Data Domain integration), I want to talk about three new features that I think are quite important, long term, in core functionality within the product for the average Joe.

These are:

- Scheduled Cloning

- AFTD enhancements

- Checkpoint restarts

Each of these on their own represents significant benefit to the average NetWorker site, and I’d like to spend some time discussing the functionality they bring.

[Edit/Aside: You can find the documentation for NetWorker 7.6 SP1 available in the updated Documentation area on nsrd.info]

Scheduled Cloning

In some senses, cloning has been the bane of the NetWorker administrator’s life. Up until NW 7.6 SP1, NetWorker has had two forms of cloning:

- Per-group cloning, immediately following completion of backups;

- Scripted cloning.

A lot of sites use scripted cloning, simply due to the device/media contention frequently caused in per-group cloning. I know this well; since starting working with NetWorker in 1996, I’ve written countless numbers of NetWorker cloning scripts, and currently am the primary programmer for IDATA Tools, which includes what I can only describe as a cloning utility on steroids (‘sslocate’).

Will those scripts and utilities go away with scheduled cloning? Well, I don’t think they’re always going to go away – but I do think that they’ll be treated more as utilities rather than core code for the average site, since scheduled cloning will be able to achieve much of the cloning requirements for companies.

I had heard that scheduled cloning was on the way long before the 7.6 SP1 beta, thanks mainly to one day getting a cryptic email along the lines of “if we were to do scheduled cloning, what would you like to see in it…” – so it was pleasing, when it arrived, to see that much of my wish list had made it in there. As a first-round implementation of the process, it’s fabulous.

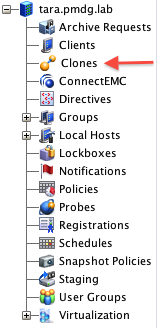

So, let’s look at how we configure scheduled clones. First off, in NMC, you’ll notice a new menu item in the configuration section:

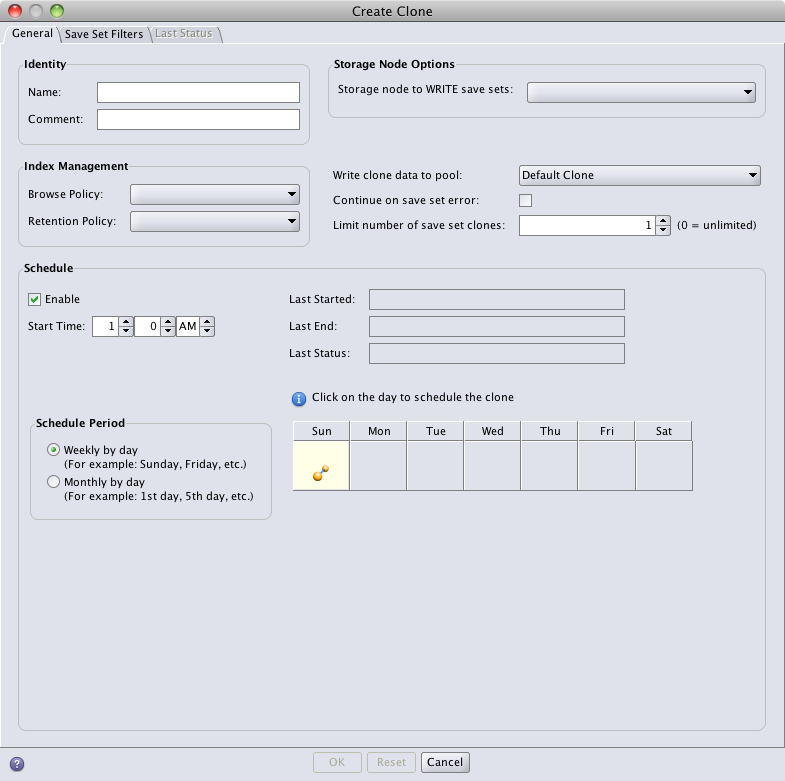

This will undoubtedly bring joy to the heart of many a NetWorker administrator. If we then choose to create a new scheduled clone resource, we can create a highly refined schedule:

Let’s look at those options first before moving onto the second tab:

- Name and comment is pretty self explanatory – nothing to see there.

- Browse and retention – define, for the clone schedule, both the browse and retention time of the savesets that will be cloned.

- Start time – Specify exactly what time the cloning is to start.

- Schedule period – Weekly allows you to specify which days of the week the cloning is to run. Monthly allows you to specify which dates of the month the cloning will run.

- Storage node – Allows you to specify to which storage node the clone will write to. Great for situations where you have say, an off-site storage node and you want the data streamed directly across to it.

- Clone pool – Which pool you want to write the clones to – fairly expected.

- Continue on save set error – This is a big help. Standard scripting of cloning will fail if one of the savesets selected to clone has an error (regardless of whether that’s a read error, or it disappears (e.g., is staged off) before it gets cloned, etc.) and you haven’t used the ‘-F’ option. Click this check box and the cloning will at least continue and finish all savesets it can hit in one session.

- Limit number of save set clones – By default this is 1, meaning NetWorker won’t create more than one copy of the saveset in the target pool. This can be increased to a higher number if you want multiple clones, or it can be set to zero (for unlimited), which I wouldn’t see many sites having a need for.

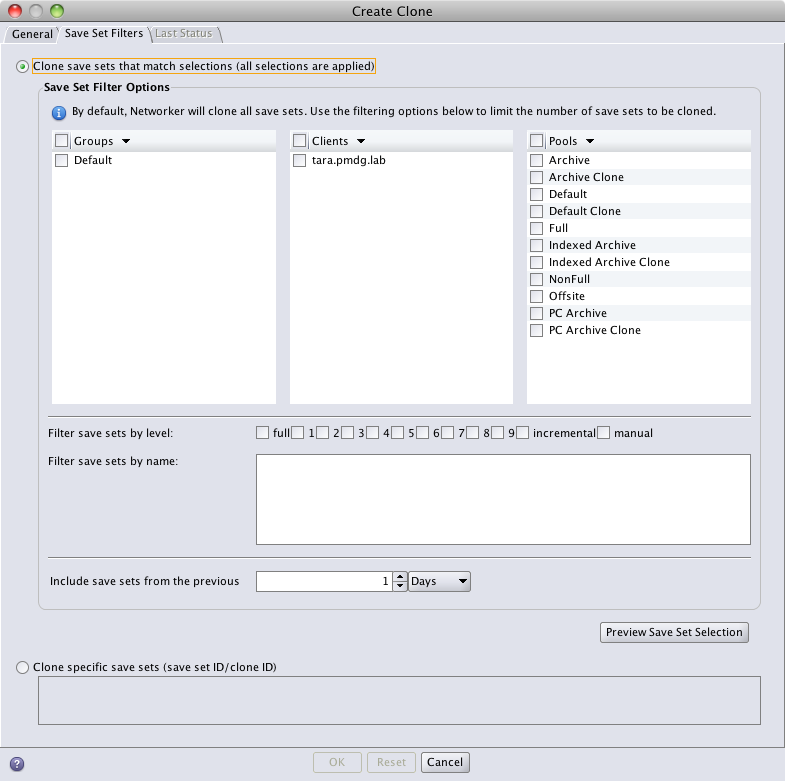

Once you’ve got the basics of how often and when the scheduled clone runs, etc., you can move on to selecting what you want cloned:

I’ve only just refreshed my lab server, so you can see that a bit of imagination is required in the above screen shot to flesh out what this may look in a normal site. But, you can choose savesets to clone based on:

- Group

- Client

- Source Pool

- Level

- Name

or

- Specific saveset ID/clone IDs

When specifying savesets based on group/client/level/etc., you can also specify how far back NetWorker is to look for savesets to clone. This avoids a situation whereby you might say, enable scheduled cloning and suddenly have media from 3 years ago requested.

You might wonder about the practicality of being able to schedule a clone for specific SSID/CloneID combos. I can imagine this would be particularly useful if you need to do ad-hoc cloning of a particularly large saveset. E.g., if you’ve got a saveset that’s say, 10TB, you might want to configure a schedule that would start specifically cloning this at 1am Saturday morning, with your intent being to then delete the scheduled clone after it’s finished. In other words, it’s to replace having to do a scheduled cron or at job just for a single clone.

Once configured, and enabled, scheduled cloning runs like a dream. In fact, it was one of the first things I tackled while doing beta testing, and almost every subsequent day found myself thinking at 3pm “why is my lab server suddenly cloning? – ah yes, that’s why…”

AFTD Enhancements

There’s not a huge amount to cover in terms of AFTD enhancements – they’re effectively exactly the same enhancements that have been run into NetWorker 7.5 SP3, which I’ve previously covered here. So, that means there’s a better volume selection criteria for AFTD backups, but we don’t yet have backups being able to continue from one AFTD device to another. (That’s still in the pipeline and being actively worked on, so it will come.)

Even this one key change – the way in which volumes are picked in AFTDs for new backups – will be a big boon for a lot of NetWorker sites. It will allow administrators to not focus so much on the “AFTD data shuffle”, as I like to consider it, and instead focus on higher level administration of the backup environment.

(These changes are effectively “under the hood”, so there’s not much I can show in the way of screen-shots.)

Checkpoint Restarting

When I first learned NetBackup, I immediately saw the usefulness of checkpoint restarting, and have been eager to see it appear in NetWorker since that point. I’m glad to say it’s appeared in (what I consider to be) a much more usable form. So what is checkpoint restarting? If you’re not familiar with the term, it’s where the backup product has regular points at which it can restart from, rather than having to restart an entire backup. Previously NetWorker has only done this at the saveset level, but that’s not really what the average administrator would think of when ‘checkpoints’ are discussed. NetBackup, last I looked at it, does this at periodic intervals – e.g., every 15 minutes or so.

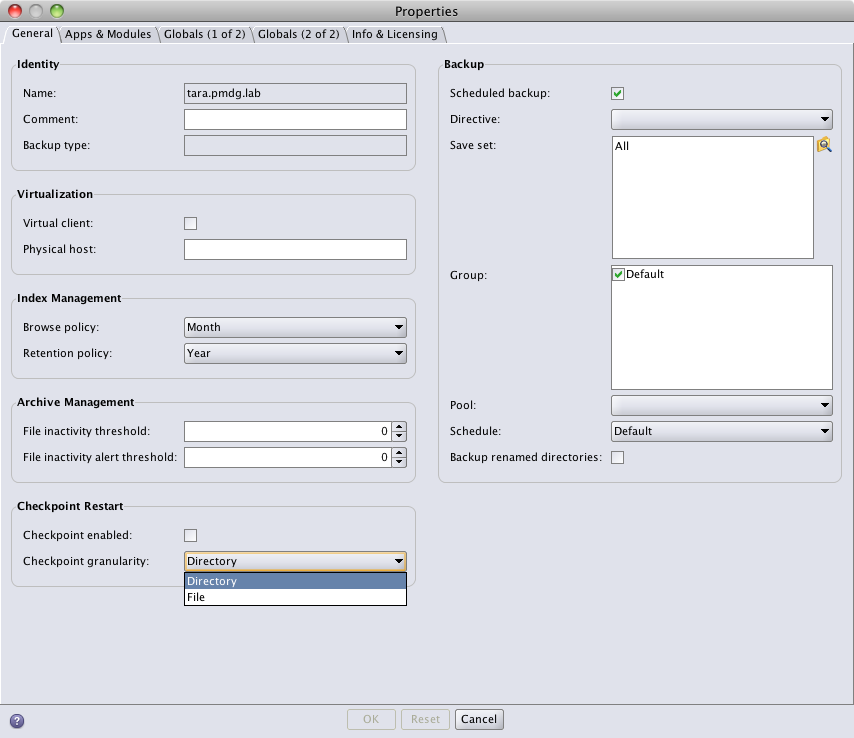

Like almost everything in NetWorker, we get more than one way to run a checkpoint:

Within any single client instance you can choose to enable checkpoint restarting, with the restart options being:

- Directory – If a backup failure occurs, restart from the last directory that NetWorker had started processing.

- File – If a backup failure occurs, restart from the last file NetWorker had started processing.

Now, the documentation warns that with checkpoint enabled, you’ll get a slight performance hit on the backup process. However, that performance hit is nothing compared to the performance and potentially media hit you’d take if you’re 9.8TB through a 10TB saveset and the client is accidentally rebooted!

Furthermore, in my testing (which admittedly focused on savesets smaller than 10GB), I inevitably found that with either file or directory level checkpointing enabled, the backup actually ran faster than the normal backup. So maybe it’s also based on the hardware you’re running on, or maybe that performance hit doesn’t come in until you’re backing up millions of files, but either way, I’m not prepared to say it’s going to be a huge performance hit for anyone yet.

Note what I said earlier though – this can be configured on a single client instance. This lets you configure checkpoint restarting even on the individual client level to suit the data structure. For example, let’s consider a fileserver that offers both departmental/home directories, and research areas:

- The departmental/home directories will have thousands and thousands of directories – have a client instance for this area, set for directory level checkpointing.

- The research area might feature files that are hundreds of gigabytes each – use file level checkpointing here.

When I’d previously done a blog entry wishing for checkpoint restarts (“Sub saveset checkpointing would be good“), I’d envisaged the checkpointing being done via the continuation savesets – e.g., “C:”, “<1>C:”, “<2>C:”, etc. It hasn’t been implemented this way; instead, each time the saveset is restarted, a new saveset is generated of the same level, catering to whatever got backed up during that saveset. On reflection, I’m not the slightest bit hung up over how it’s been implemented, I’m just overjoyed to see that it has been implemented.

Now you’re probably wondering – does the checkpointing work? Does it create any headaches for recoveries? Yes, and no. As in, yes it works, and no, throughout all my testing, I wasn’t able to create any headaches in the recovery process. I would feel very safe about having checkpoint restarts enabled on production filesystems right now.

Bonus: Mac OS X 10.6 (“Snow Leopard”) Support

For some time, NetWorker has had some issues supporting Mac OS X 10.6, and it’s certainly caused some problems for various customers as this operating system continues to get market share in the enterprise. I was particularly pleased during a beta refresh to see an updated Mac OS X client for NetWorker. This works excellently for backup, recovery, installation, uninstallation, etc. I’d suggest on the basis of the testing I did that any site with OS X should immediately upgrade those clients at least to 7.6 SP1.

In Summary

The only major glaring question for me, looking at NetWorker 7.6 SP1 is the obvious: this has so many updates, and so many new features, way more than we’d see in a normal service pack – why the heck isn’t it NetWorker v8?

Preston,

Thanks for the write up. 7.6.1 was a big release for us and as you say, it has a lot of new features. I just want to add that scheduled cloning was never on a requirements list when we started the program. Thanks to customers like you and a willingness by product management we were able to drive it into the product. This is only the beginning however, so keep the feedback coming and help us drive more innovation and features in the future. We are listening. Thank you again.

Cheers,

Skip

NetWorker Usability

I was hoping to see support for vStorage APIs in that specific release… I do have many customers what are awaiting for this feature, which is available in many backup products already…

I’m looking forward to play with the schedule cloning and checkmark feature though….

Eric

Preston –

I’d been told that 7.6.1 would allow VCB proxy servers to change transport mode from one client to another. Did you see anything about this?

thanks for the preview

Thanks for providing the useful analysis of these features.

I have two Networker servers running in a university setting, and I’ve been going back and forth about updating to 7.6.1 sooner or waiting a while. One of our Networker servers (running on RHEL4) is at 7.5.1 and whenever I update to 7.5.2 or 7.5.3 (any build, it seems), I inexplicably get kernel errors “Out of memory”, and as well nsrjobd processes die, this happens at various moments when multiple savegroups are running.

I want to upgrade to something past 7.5.1 in order to get better support for Windows 2008 and Windows 7 clients among other things, but this out of memory problem – EMC support has not yet given useful suggestions on resolving it – is keeping me at 7.5.1.

Now, I’m looking at the list of fixes for 7.6.1 and wondering if there’s an outside chance upgrading to 7.6.1 may fix this problem. The other thing I wonder is if it is just as easy to roll back from 7.6.x to 7.5.1 if the error doesn’t resolve and/or new issues arise. Any thoughts?

joe

Hi Joe,

Moving from 7.6.x to 7.5.1 should be possible – I’m not aware of any major changes to the media database format, and if I recall correctly, I was advised recently it was possible to drop down from 7.6.0.x to 7.5.1 without issue.

Out of curiosity, do you have any disparity in RHEL4 between the 32/64-bitness of the OS and of NetWorker?

I also have some recollection of there being some compatibility/library issues with RHEL4 that were resolved with RHEL5 – have you got any way of testing a deployment on RHEL5?

Cheers,

Preston.

Mike, sorry, I’m not too across the VCB components in 7.6 SP1, so I can’t say one way or the other.

Eric,

Look for vStorage API support in 7.6.2 due out in the December time frame.

Cheers,

Skip Hanson

NetWorker Usability

networker_usability@emc.com

Hi Skip.

Will NetWorker 7.6.2 include support for Changed Block Tracking in vStorage API ?

And will 7.6.2 include support for DR restore of Windows Server 2008 ?

Johannes

Wish I could say I’m having the same success with Scheduled Clones as you had. I run 5 separate groups, and wanted to do the clones once they were all done. After installing I ran through the setup of a scheduled clone, and asked it to show me all the backup sets from “1” day, and when I ran the preview, it showed me practically nothing. I’m still experimenting, but something just doesn’t seem to be working right.

FYI – I just upgraded to 7.6.1 and the alternate VCB transport mode works. You just put VCB_TRANSPORT_MODE=nbd in the client’s application information. I’m very pleased!

I have NetWorker versions 7.6 on down to 6.3.

The latest JAVA breaks NMC’s ability to control older NetWorker servers, the interface locks up.

Trying to get around this I loaded NMC from the latest 7.6.1.1.

This version won’t even connect to the 6.3 servers.

I rolled java back, no help, looks like I have to roll back NMC too.

Too bad, it has nicer graphics in the reporting.

Blair,

Preview option doesn’t seem to be working for me either. But the scheduled clone job are running fine.

Is there a way to stop the scheduled clones once they’re running? We have set this up with a few different jobs, but now they’ve taken all of the tape drives and we can’t run restores

In NMC->Monitoring, it should be possible to jump to the “Clones” tab (see the top window pane – it has tabs of “Groups” and “Clones”), then right-click on the group you wish to stop and choose Stop from the menu.

you’d think so, I can see the Stop option, but it is greyed out once the clone has started. I have the option to start ones that aren’t running, but not stop the ones that are

I am logged in with the default Administrator account in Networker, so it shouldn’t be a matter of permissions either

Hmm, I have used that functionality before, with success. Have you disabled the clone sessions? I don’t have a lab machine I can test on right now, so I’m just supposing – maybe if its disabled you can do a manual start but it won’t let you do a manual stop.

The other possibility is that nsrjobd is somehow not tracking it, which would likely need an actual restart of NetWorker then to stop it.

Also – what version of NetWorker are you using? I.e., obviously 7.6 SP1, but are you using that by itself, or cumulative release 1, cumulative release 2 or cumulative release 3 by any chance?

I have the same problem with my running clones, I cannot Stop or Restart or select anything besides Show Details. Everything is greyed out. Currently running 7.6.1.Build.397.

Are you using one of the cumulative patch releases for 7.6 SP1? I’d be interested to know if this problem still exists against 7.6.1.3, for instance.

I have been running 7.6.1.3 for a few weeks now and I am able to manually start clone jobs. It also looks like stopping schedule started jobs can be stopped. NMC -> Monitoring -> Clones then right click the schedule item to start it. To stop you also righ click and select “Stop Manual Clone Operation”. I know it says manual clone but it looks to also stop auto started jobs.

The problem I’m having is that scheduled jobs on a given day will start just after midnight on the first day it is sceduled. I run full backups starting Friday night and then cloning is scheduled to start Sat night. But what I keep observing is that the schedules for Sat all start just after midnight Friday so it appeasr the start time is irnored and on the start date is followed.

Hi Anthony,

So you’re actually seeing the options to stop the manual jobs not as an inactive menu item, but one you can select on?

(FWIW, check here for the safe way of stopping a cloning job from the command line.)

Re: the scheduled cloning jobs starting straight after midnight – what’s the schedule you’ve got established?

Cheers,

Preston.

Preston – yes it is an active menu item I can select. I have tried and it does appear to have stopped the clone job.

I have 30+ clone jobs that run over the weekend. As an example, i have a job that set to run Saturday, Sunday and Monday at 10 pm and repeating every 12 hours. So I figured the first time it would start would have been 10 pm Saturday night. When I checked NMC Saturday afternoon (before 10 pm) the history showed the job as having started at 10am. And yes I’m sure it was scheduled for PM and not AM since the time shows as 22:00 and not 10:00.

checkpoints i would like to know how it works and here is my queries,

I mean if we enable that and choose check point granularity “directory” where will the backup info that has been stopped for some reasons will be storing?

If we resume the backup later and if that gets succeeded will that backup is completely restorable?

Do we need to do any other changes for making this checkpoint restart working? ? Any tests made with other customers where they are satisfied?

Also when temp backups stored in a path(backup server) how much space will it take and say for example if the mountpoint where temp backup info gets stored does not have enough memory then I hope server might crash?

I’m not quite sure how the checkpoint metadata is stored, so I can’t answer whether it’s stored on the server or client.

I’ve used both directory and file level checkpointing on many sites without issue when it comes to restoration.

Checkpoint data is preserved across client reboots, so that’s not an issue for you.

Other than enabling checkpoints for filesystem type backups only and ensuring any client for which checkpoints are enabled are on NetWorker 7.6 SP1 or higher, there’s no additional work to be done.

Hello

Is there any different on starting the failed group from monitoring or left pane at groups? which would resume from last if checkpoint enabled? thanks…

Hi Thierry,

As long as you choose ‘restart’ instead of ‘start’, there should be no difference in behaviour based on where you issue the command from.

I’m not sure though how well the checkpoint restart works within the context of this situation in general though. It’s more for a situation where the backup fails mid-way through the execution – I’ve not done any testing to see whether it works after the actual backup has been completely stopped by NetWorker.

Cheers,

Preston.