Introduction

Those of us who have been using NetWorker for many years know it’s been a long time between major version numbers. That’s not to say that NetWorker has sat still; after an earlier period of slower development earlier in the 7.x tree, we saw in NetWorker 7.6 that EMC had well and truly re-committed to the product. At the time of its release, many of us wondered why NetWorker 7.6 hadn’t been numbered NetWorker 8. The number of changes from 7.5 to 7.6 were quite large, and the service packs for 7.6 also turned out to be big incremental improvements of some form or another.

Now though, NetWorker 8 has arrived, and one thing is abundantly clear: it most certainly is a big enough collection of improvements and changes to warrant a major version number increase. More importantly, and beyond the number of changes, it warrants the v8 moniker on the basis of the type of changes: this is not really NetWorker v7+1 under the hood, but a significantly newer beast.

I’ve gone back and forth on whether I should try to do a single blog post outlining NetWorker 8, or a series of posts. The solution? Both. This piece will be a brief overview; I’ll then do some deeper dives into specific areas of changes within the product.

If you’re interested in the EMC official press release for NetWorker 8, by the way, you’ll find it here. EMC have also released a video about the new version, available here.

Upgrading

The first, most important thing I’ll say about NetWorker 8 is that you must take time to read both the release notes and the upgrade guide before you go about updating your system to the new version. I cannot stress this enough: if you are not prepared to carefully, thoroughly read the release notes and the upgrade guide, do not upgrade at all.

(To find those documents, mosey over to PowerLink – I expect it will be updated within the next 12-24 hours with the official documents and downloads.)

An upgrade technique which I’ve always advocated, as of NetWorker 8, now becomes mandatory: you must upgrade all your storage nodes prior to upgrading the NetWorker server. I.e., after preliminary index and bootstrap backups, your upgrade sequence should look like the following:

- Stop NetWorker on server and storage nodes.

- Remove the nsr/tmp directory on the server and the storage nodes.

- Upgrade NetWorker on all storage nodes.

- Start NetWorker on storage nodes.

- Upgrade NetWorker on server.

- Start NetWorker on the server.

- Perform post-upgrade tests.

- Schedule client upgrades.

Any storage node not upgraded to NetWorker 8 will not be able to communicate with the NetWorker v8 server for device access. This has obvious consequences if your environment is using storage nodes locked by reasons of client compatibility, release compatibility or embedded functionality, to a 7.x release. E.g., customers using the EDL embedded storage node will not be upgrade to NetWorker 8 unless EMC comes through with an 8.0 EDL storage node update. (I genuinely do not know if this will happen, for what it’s worth.)

If you have a lot of storage nodes, and upgrading both the server and the storage nodes in the same day is not an option, then you can rest assured that a NetWorker 8 storage node is backwards compatible with a NetWorker 7.6.x server. That is, you can over however many days you need to, upgrade your storage nodes to v8, before upgrading your NetWorker server. I’d still suggest though that for best operations you should upgrade the storage node(s) and server all on the same day.

The upgrade guide also states that you can’t jump from a NetWorker 7.5.x (or lower, presumably) release to NetWorker 8. You must first upgrade the NetWorker server to 7.6.x, before subsequently upgrading to NetWorker 8. (In this case, the upgrade will at bare minimum involve upgrading the server to 7.6, starting it and allowing the daemons to fully stabilise, then shutting it down and continuing the upgrade process.)

The enhancements

Working through the release notes, I’m categorising the NetWorker updates into 5 core categories:

- Architectural – Deep functionality and/or design changes to how NetWorker operates. This will likely also result in some changes of the types listed below, but the core detail of the change should be considered architectural.

- Interface – Changes to how you can interact with NetWorker. Usually this means changes to NMC.

- Operational – Changes which primarily affect backup and recovery operations, or may represent a reduction in functionality between this and the previous version.

- Reporting – Changes to how NetWorker reports information, either in logs, the GUI, or via the command line.

- Security – Changes that directly affect or relate to system security.

For the most part, documentation updates have been limited to updates based on the changes in the software, so I’m disinclined to treat that as a separate category of updates in and of itself.

Architectural Changes

As you may imagine with a major version change, this area saw, in my opinion, the most, and biggest changes to NetWorker for quite some time.

When NetWorker 7.0 came out, the implementation of AFTD was a huge change compared to the old file-type devices. We finally had disk backup that behaved like disk, and many long-term NetWorker users, myself included, were excited about the potential it offered.

Yet, as the years went on, the limitations of the AFTD architecture caused just as much angst as the improvements had solved. While the read-write standard volume and read-only shadow volume implementation were useful, they were in actual fact just trickery to get around read-write concurrency. There were also several other issues, most notably the inability to have savesets move from one disk backup unit to another if an AFTD volume filled, and the perennial problem of not being able to simultaneously clone (or stage) from a volume whilst recovering from it.

Now, if you’re looking through the release notes and hoping to see reference to savesets being able to fill one disk backup unit and continue seamlessly onto another disk backup unit, you’re in for a shock: it’s not there. If like me, you’ve been waiting quite a long time for that feature to arrive, you’re probably as disappointed as I was when I participated in the Alpha for NetWorker 8. However, all is not lost! The reason it’s not there is that it’s no longer required at all.

Consider why we wanted savesets that fill one AFTD volume to fail over to another. Typically it was because:

- Due to inability to have concurrent clone/read operations, data couldn’t be staged from them fast enough;

- AFTDs did not play well with each other when more than one device shared the same underlying disk;

- Backup performance was insufficient when writing a large number of savesets to a single very large disk backup unit.

In essence, if you had 10TB of disk space (formatted) to present to NetWorker for disk backup, a “best practice” configuration would have typically seen this presented as 5 x 2TB filesystems, and one AFTD created per filesystem. So, we were all clamouring for saveset continuation options because of the underlying design.

With version 8, the underlying design has been changed. It’s likely going to necessitate a phased transition of your disk backup capacity if you’re currently using AFTDs, but the benefits are very likely to outweigh that inconvenience. The new design allows for multiple nsrmmd processes to concurrently access (read and write) an AFTD. Revisiting that sample 10TB disk backup allocation, you’d go from 5 x 2TB filesystems to a single 10TB filesystem instead, while being able to simultaneously backup, clone, recover, stage, and do it more efficiently.

The changes here are big enough that I’ll post a deep dive article specifically on it within the next 24 hours. For now, I’ll move on.

Something I didn’t pickup during the alpha and beta testing relates to client read performance – previous versions of NetWorker always read chunks of data from the client at a fixed, 64KB size. (Note, this isn’t related to the device write block size.) You can imagine that a decade or so ago, this would have been a good middle ground in terms of efficiency. However, these days clients in an enterprise backup environment are just as likely to be powerhouse machines attached to high speed networking and high speed storage. So now, a NetWorker client will dynamically alter the block sized it uses to read data, based on an ongoing NetWorker measurement of its performance.

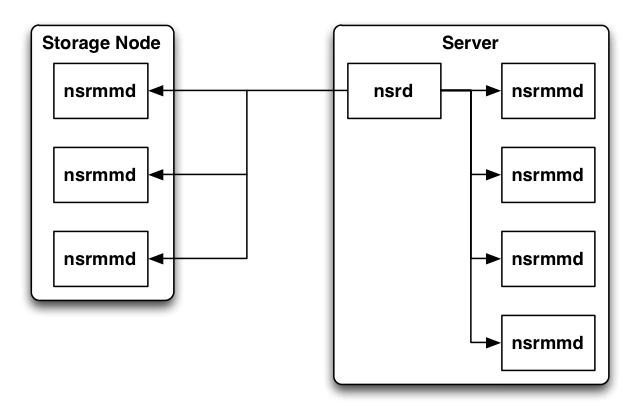

NetWorker interprocess communications have been improved where devices are concerned. For version 7.x and lower, the NetWorker server daemon (nsrd) has always been directly responsible with communicating with and controlling individual nsrmmd processes, either locally or on storage nodes. As the number of devices increase within an environment, this has seen a considerable load placed on the backup server – particularly during highly busy times. In simple terms, the communication has looked like this:

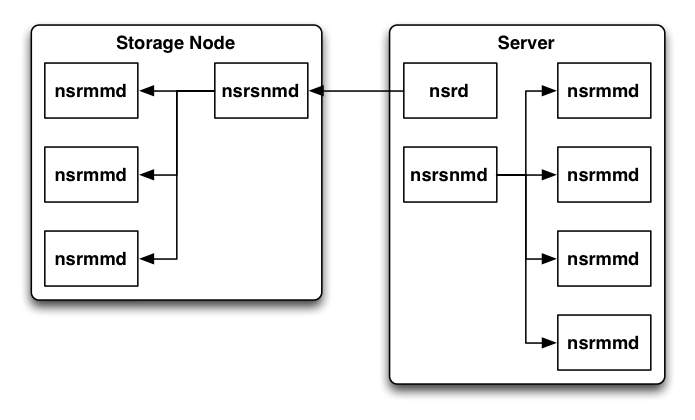

While no doubt simpler to manage, this has resulted in nsrd having to service a lot more interrupts, reducing its efficiency, and thereby reducing the efficiency of the overall backup environment. So, as of v8, a new daemon has been introduced – nsrsnmd, which is a storage node media multiplexor daemon manager. The communications path now sees nsrmmd processes on each storage node managed by a local nsrsnmd, which in turn receives overall instruction by NetWorker. The benefit of this is that nsrd now has far fewer interruptions, particularly in larger environments, to deal with:

Undoubtedly, larger datazones will see benefit from this change.

The client direct feature has been expanded considerably in NetWorker 8. You can nominate a client to do client-direct backups, update the paths details for an AFTD to include the client pathname to the device, and the client can subsequently write directly to the AFTD itself. This, too, I’ll cover more in the deep-dive on AFTD changes. By the way, that isn’t just for AFTDs – if you’ve got a Data Domain Boost system available, the Client Direct attribute equally works there, too. Previously this had been pushed out to NetWorker storage nodes – now it extends to the end clients.

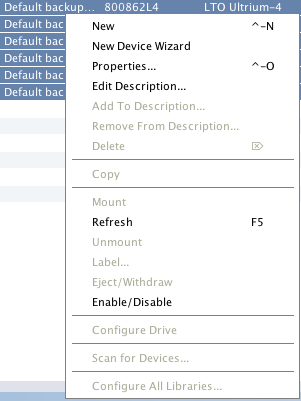

There’s some useful changes for storage nodes now – you can control at the storage node level whether the system is enabled or disabled: much easier than say, enabling or disabling all the devices attached to the storage node. Further, you can now specify, at the storage node level, what are the clone storage nodes for backups on that storage node. In short: configuration and maintenance relating to storage nodes has become less fiddly.

The previous consolidated backup level has been thrown away and rewritten as synthetic fulls. A bit like source based deduplication this can hide real issues – i.e., while it makes it faster to achieve a full backup, it doesn’t necessarily improve recovery performance (aside from reducing the number of backups required to achieve a recovery.) I’ll do a deep dive on synthetic fulls, too – though in a couple of weeks.

An architectural change I disagree with: while not many of us used ConnectEMC, it appears to be going away (though is still technically present in NetWorker), and is now replaced by a “Report Home” functionality. That, I don’t mind. What I do mind is that it is enabled by default. Yes, your server will automatically email a third party company (i.e., EMC) when a particular fault occurs in NetWorker under v8 and higher. Companies that have rigorous security requirements will undoubtedly be less than pleased over this. There is however, a way to disable it, though not as I’ve noticed as yet, from within NMC. From within nsradmin, you’ll find a resource type called “NSR report home”; change the email address for this from NetWorkerProfile@emc.com to an internal email address for your own company, if you have concerns. (E.g., Sunday morning, just past, at 8am: I just had an entire copy of my lab server’s configuration database emailed to me, having changed the email address. I would argue that this feature, being turned on by default, is a potential security issue.)

A long-term nice to have change is the introduction of a daemon environment file. Previously it was necessary to establish system-wide environment variables, which where easy to forget about – or, if on Unix, it was tempting to insert NetWorker specific environment variables into the NetWorker startup script (/etc/init.d/networker). However, if they were inserted there, they’d equally be lost when you upgraded NetWorker and forgot to save a copy of that file. That’s been changed now with a nsr/nsrrc file, which is read on daemon startup.

Home Base has been removed in NetWorker 8 – and why wouldn’t it? EMC has dropped the product. Personally I think this isn’t necessarily a great decision, and EMC will end up being marketed against for having made it. I think the smarter choice here would have been to either open source Home Base, or make it a free component of NetWorker and Avamar getting only minimal upgrades to remain compatible with new OS releases. While BMR is no longer as big a thing as it used to be before x86 virtualisation, Home Base supported more than just the x86 platform, and it seems like a short-sighted decision.

Something I’m sure will be popular in environments with a great many tape drives (physical or virtual) is that preference is now given in recovery situations towards loading volumes for recovery into read-only devices if they’re available. It’s always been a good idea when you have a strong presence of tape in an environment to keep at least one drive marked as read-only so it’s free for recoveries, but NetWorker would just as likely load a recovery volume into a read-write enabled volume. No big deal, unless it then disrupts backups. That should happen less often now.

There’s also a few minor changes too – the SQL Anywhere release included with NMC has been upgraded, as has Apache, and in what will be a boon to companies that have NetWorker running for very long periods of time without a shutdown: the daemon.raw log can now be rolled in real-time, rather than on daemon restart. Finally on the architectural front, Linux gets a boost in terms of support for persistent named paths to devices: there can now be more than 1024 of them. I don’t think this will have much impact for most companies, but clearly there’s at least one big customer of EMC who needed it…

Interface Changes

Work has commenced to allow NMC to have more granular control over backups. You can now right-click on a save session, regardless of whether it originates from a group that has been started from the command line or automatically. However, you still can’t (as yet) stop a manual save, nor can you stop a cloning session. More work needs to be done here – but it is a start. Given the discrepancies between what the release notes say you should be able to stop, and what NMC actually lets you stop, I expect we’ll see further changes on this in a patch or service pack for NetWorker 8.

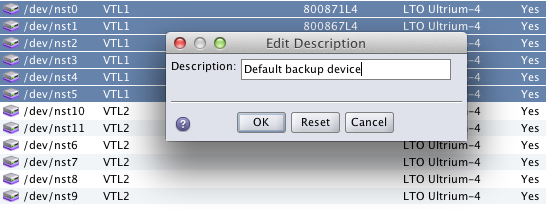

Multiple resource editing comes into NMC. You can select a bunch of resources, right-click on the column that you wish to change to the same value for each of them, and make the change to all at once:

While this may not seem like a big change, I’m sure there’s a lot of administrators who work chiefly in NMC that’ll be happy to see the above.

While I’ve not personally used the NetWorker Module for Microsoft Applications (NMM) much, everyone who does use it regularly says that it doesn’t necessarily become easier to use with time. Hopefully now, with NMM configuration options added to the New Client Wizard in NMC, NMM will become easier to deal with.

Operational Changes

There’s been a variety of changes around bare metal recovery. The most noticeable, as I mentioned under architecture, was the removal of EMC Home Base. However, EMC have introduced custom recovery ISOs for the x86 and x86_64 Windows platforms (Windows 7, Windows 2008 R2 onwards). Equally though, Windows XP/2003 ASR based recovery is no longer supported for NetWorker clients running NetWorker v8. However, given the age of these platforms I’d suggest it was time to start phasing out functionality anyway. While we’re talking about reduction in functionality, be advised that NMM 2.3 will not work with NetWorker 8 – you need to make sure that you upgrade your NMM installs first (or concurrently) to v2.4, which has also become recently available.

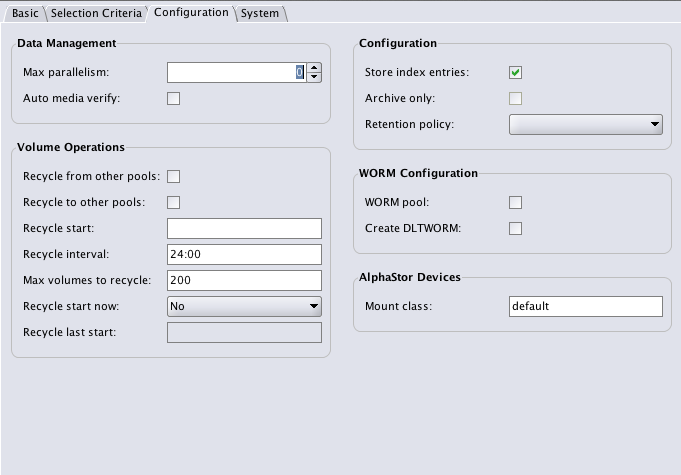

While NetWorker has always recycled volumes automatically (despite ongoing misconceptions about the purpose of auto media management), it does it on an as-needs basis, during operations that require writable media. This may not always be desirable for some organisations, who would like to see media freshly labeled and waiting for NetWorker when it comes time to backup or clone. This can now be scheduled as part of the pool settings:

Of course, as with anything to do with NetWorker pools, you can’t adjust the bootstrap pools, so if you want to use this functionality you should be using your own custom pools. (Or to be more specific: you should be using your own custom pools, no matter what.) When recycling occurs, standard volume label deletion/labelling details are logged, and a log message is produced at the start of the process along the lines of:

tara.pmdg.lab nsrd RAP notice 4 volumes will be recycled for pool `Tape' in jukebox `VTL1'.

NDMP doesn’t get left out – so long as you’ve entered the NDMP password details into a client resource, you can now select savesets by browsing the NDMP system, which will be a handy feature for some administrators. If you’re using NetApp filers, you get another NDMP benefit: checkpoint restarts are now supported on that platform.

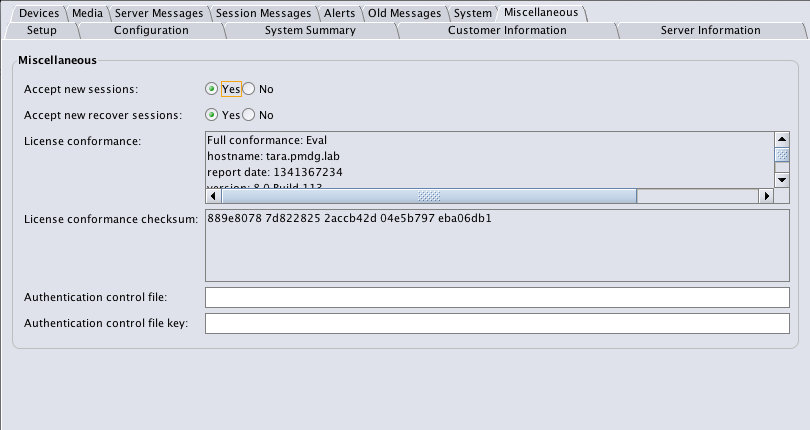

NetWorker now gets a maintenance mode – you can either tell NetWorker to stop accepting new backup sessions, new recovery sessions, or both. This is configured in the NetWorker server resource:

Even recently I’d suggested on the NetWorker mailing list that this wasn’t a hugely necessary feature, but plenty of other people disagreed, and they’ve convinced me of its relevance – particularly in larger NetWorker configurations.

For those doing NetWorker client side compression, you can all shout “Huzzah!”, for EMC have introduced new client side compression directives: gzip and bzip2, with allowance to specify the level, as well, of the compression. Never fear though – the old compressasm is still there, and the default. While using these new compression options can result in a longer backup at higher CPU utilisation, it can have a significant decrease in the amount of data to be sent across the wire, which will be of great benefit to anyone backing up through problematic firewalls or across WANs. I’ll have a follow-up article which outlines the options and results in the next day or so.

Reporting Changes

Probably the biggest potential reporting change in NetWorker 8 is the introduction of nsrscm_filter, an EMC provided savegroup filtering system. I’m yet to get my head around this – the documentation for it is minimal to the point where I wonder why it’s been included at all, and it looks like it’s been written by the developer for developers, rather than administrators. Sorry EMC, but for a tool to be fundamentally useful, it should be well documented. To be fair, I’m going through a period of high intensity work and not always of a mood to trawl through documentation when I’m done for the day, so maybe it’s not as difficult as it looks – but it shouldn’t look that difficult, either.

On the flip-side, checking (from the command line) what went on during a backup now is a lot simpler thanks to a new utility, nsrsgrpcomp. If you’ve ever spent time winnowing through /nsr/tmp/sg to dig out specific details for a savegroup, I think you’ll fall in love with this new utility:

[root@nox ~]# nsrsgrpcomp Usage: nsrsgrpcomp [ -s server ] groupname nsrsgrpcomp [ -s server ] -L [ groupname ] nsrsgrpcomp [ -s server ] [ -HNaior ] [ -b num_bytes | -l num_lines ] [ -c clientname ] [ -n jobname ] [ -t start_time ] groupname nsrsgrpcomp [ -s server ] -R jobid

[root@nox ~]# nsrsgrpcomp belle belle, Probe, "succeeded:Probe" * belle:Probe + PATH=/usr/gnu/bin:/usr/local/bin:/bin:/usr/bin:.:/bin:/sbin:/usr/sbin:/usr/bin * belle:Probe + CHKDIR=/nsr/bckchk * belle:Probe + README= <snip> * belle:/Volumes/Secure Store 90543:save: The save operation will be slower because the directory entry cache has been discarded. * belle:/Volumes/Secure Store belle: /Volumes/Secure Store level=incr, 16 MB 00:00:09 45 files * belle:/Volumes/Secure Store completed savetime=1341446557 belle, index, "succeeded:9:index" * belle:index 86705:save: Successfully established DFA session with adv_file device for save-set ID '3388266923' (nox:index:aeb3cb67-00000004-4fdfcfec-4fdfcfeb-00011a00-3d2a4f4b). * belle:index nox: index:belle level=9, 29 MB 00:00:02 27 files * belle:index completed savetime=1341446571

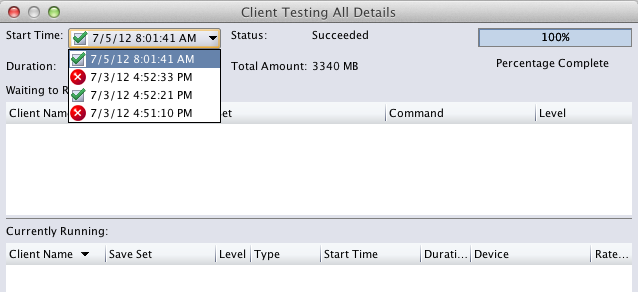

Similarly, within NMC, you can now view the notification for a group not only for the most recent group execution, but a previous one – very useful if you need to do a quick visual comparison between executions:

There’s a new notification for when a backup fails to start at the scheduled time – I haven’t played around with this yet, but it’s certainly something I know a lot of admins have been asking to see for quite a while.

I’ve not really played around with NLM license administration in NMC. I have to admit, I’m old-school here, and “grew up” using LLM from the command line with all its somewhat interesting command line options. So I can’t say what NMC used to offer, off-hand, but the release notes tell us that NMC now reports NLM license usage, which is certainly does.

Security Changes

Multi-tenancy has come to NetWorker v8. With it, you can have restricted datazones that give specified users access to a part of NetWorker, but not the overall setup. Unsurprisingly, it looks a lot like what I proposed in November 2009 in “Enhancing NetWorker Security: A theoretical architecture“. There’s only so many ways you could get this security model in place, and EMC obviously agreed with me on it. Except on the naming: I suggested a resource type called “NSR admin zone”; instead, it got named “NSR restricted data zone”. The EMC name may be more accurate, but mine was less verbose. (Unlike this blog post.)

I’d suggest while the aim is for multi-tenancy, at the moment the term operational isolation is probably a closer fit. There’s still a chunk of visibility across restricted datazones unless you lock down the user(s) in the restricted data zone to only being able to run an ad-hoc backup or request a recovery. A user in a restricted datazone, as soon as he/she has the ability to monitor the restricted datazone, for instance, can still run nsrwatch against the NetWorker server and see details about clients they should not have visibility on (e.g., “Client X has had NetWorker Y installed” style messages shouldn’t appear, when X is not in the restricted datazone.) I would say this will be resolved in time.

As such, my personal take on the current state of multi-tenancy within NetWorker is that it’s useful for the following situations:

- Where a company has to provide administrative access to subsets of the configuration to specific groups or individuals – e.g., in order for DBAs to agree to having their backups centralised, they may want the ability to edit the configuration for their hosts;

- Where a company intends to provide multi-tenancy backups and allow a customer to only be able to restore their data – no monitoring, no querying, just running the relevant recovery tool.

In the first scenario, it wouldn’t matter if an administrator of a restricted datazone can see details about hosts outside of his/her datazone; in the second instance, a user in a restricted datazone won’t get to see anything outside of their datazone, but it assumes the core administrators/operators of the NetWorker environment will handle all aspects of monitoring, maintaining and checking – and possibly even run more complicated recoveries for the customers themselves.

There’s been a variety of other security enhancements; there’s tighter integration on offer now between NetWorker and LDAP services, with the option to map LDAP user groups to NetWorker user groups. As part of the multi-tenancy design, there’s some additional user groups, too: Security Administrators, Application Administrators, and Database Administrators. The old “Administrators” user group goes away: for this reason you’ll need to make sure that your NMC server is also upgraded to NetWorker v8. On that front, nsrauth (i.e., strong authentication, not the legacy ‘oldauth’) authentication is now mandatory between the backup server and the NMC server when they’re on separate hosts.

Finally, if you’re using Linux in a secure environment, NetWorker now supports SELinux out of the box – while there’s been workarounds available for a while, they’ve not been officially sanctioned ones, so this makes an improvement in terms of support.

In Summary

No major release of software comes out without some quirks, so it would be disingenuous of me to suggest that NetWorker 8.0 doesn’t have any. For instance, I’m having highly variable success in getting NetWorker 8 to function correctly with OS X 10.7/Lion. On one Mac within my test environment, it’s worked flawlessly from the start. On another, after it installed, nsrexecd refused to start, but then after 7.6.3.x was reinstalled, then v8 was upgraded, it did successfully run for a few days. However, another reboot saw nsrexecd refusing to start, citing errors along the lines of “nsrexecd is already running under PID = <x>. Only one instance at a time can be run“, where the cited PID was a completely different process (ranging from Sophos to PostgreSQL to internal OS X processes). In other words, if you’re running a Mac OS X client, don’t upgrade without first making sure you’ve got the old client software also available: you may need it.

Yet, since every backup vendor ends up with some quirks in a dot-0 release (even those who cheat and give it a dot-5 release number), I don’t think you can hold this against NetWorker, and we have to consider the overall benefits brought to the table.

Overall, NetWorker 8 represents a huge overhaul from the NetWorker 7.x tree: significant AFTD changes, significant performance enhancements, the start of a fully integrated multi-tenancy design, better access to backup results, and changes to daemon architecture to allow the system to scale to even larger environments means that it will be eagerly accepted by many organisations. My gut feel is that we’ll have a faster adoption of NetWorker 8 than the standard bell-curve introduction – even in areas where features are a little unpolished, the overall enhancements brought to the table will make it a very attractive upgrade proposition.

Over the coming couple of weeks, I’ll publish some deep dives on specific areas of NetWorker, and put some links at the bottom of this article so they’re all centrally accessible.

Should you upgrade? Well, I can’t answer that question: only you can. So, get to the release notes and the upgrade notes now that I’ve whet your appetite, and you’ll be in a position to make an informed decision on it.

Deep Dives

The first deep dive is available: NetWorker 8 Advanced File Type Devices. (There’ll be a pause of a week or so before the next one, but I’ll have another, briefer article about some NetWorker 8 enhancements in a few days time.)

For an overview of the new synthetic full backup options in NetWorker 8, check out the article “NetWorker 8: Synthetic Fulls“.

Shorter Posts

A quick overview of the changes in client side compression.

“While using these new compression options can result in a longer backup at higher CPU utilisation, it can have a significant decrease in the amount of data to be sent across the wire . . . “. True, as will the use of the DDBoost device.

GREAT article; thanks, Preston!

Thanks for the feedback, Terry. I hope I’m providing some useful information on NW8 to readers.

Hi Preston

How was the upgrade went in your case as most recommendation is to uninstall and install from fresh?

Is the upgrade reliable in NW8?

Hi Thierry,

My upgrades from v7.6.[2,3] went fairly smoothly; since my servers were all Unix based for testing, yes, I went through an uninstall/reinstall – I can’t comment on Windows, I’m sorry.

Cheers.