As mentioned in my main NetWorker 8 introduction article, one of the biggest architectural enhancements in NetWorker v8 is a complete overhaul of the backup to disk architecture. This isn’t an update to AFTDs, it’s a complete restart – the architecture that was is no longer, and it’s been replaced by something newer, fresher, and more scalable.

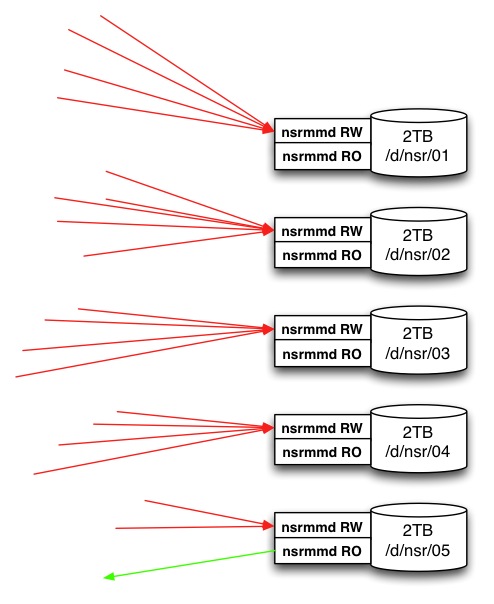

In order to understand what’s involved in the changes, we first need to step back and consider how the AFTD architecture works in NetWorker v7.x. For the purposes of my examples, I’m going to consider a theoretical 10TB of disk backup capacity available to a NetWorker server. Now, under v7.x, you’d typically end up with filesystems and AFTDs that look like the following:

(In the above diagram, and those to follow, a red line/arrow indicates a write session coming into the AFTD, and a green line/arrow indicates a read session coming out of the AFTD.)

That is, you’d slice and dice that theoretical 10TB of disk capacity into a bunch of smaller sized filesystems, with typically one AFTD per filesystem. In 7.x, there are two nsrmmd processes per AFTD – one to handle write operations (the main/real path to the AFTD), and one to handle read operations from the AFTD device – the shadow or _AF_readonly path on the volume.

So AFTDs under this scenario delivered simultaneous backup and recovery operations by a bit of sleight-of-hand; in some ways, NetWorker was tricked into thinking it was dealing with two different volumes. In fact, that’s what lead to there being two instances of a saveset in the media database for any saveset created on AFTD – one for the read/write volume, and one of the read-only volume, with a clone ID of 1 less than the instance on the read/write volume. This didn’t double the storage; the “trick” was largely maintained in the media database, with just a few meta files maintained in the _AF_readonly path of the device.

Despite the improved backup options offered by v7.x AFTDs, there were several challenges introduced that somewhat limited the applicability of AFTDs in larger backup scenarios. Much as I’ve never particularly liked virtual tape libraries (seeing them as a solution to a problem that shouldn’t exist), I found myself typically recommending a VTL for disk backup in NetWorker ahead of AFTDs. The challenges in particular, as I saw them, were:

- Limits on concurrency between staging, cloning and recovery from AFTDs meant that businesses often struggled to clone and reclaim space non-disruptively. Despite the inherent risks, this lead to many decisions not to clone data first, meaning only one copy was ever kept;

- Because of those limits, disk backup units would need to be sliced into smaller allotments – such as the 5 x 2TB devices cited in the above diagram, so that space reclamation would be for smaller, more discrete chunks of data, but spread across more devices simultaneously;

- A saveset could never exceed the amount of free space on an AFTD – NetWorker doesn’t support continuing a saveset from one full AFTD to another;

- Larger savesets would be manually sliced up by the administrator to fit on an AFTD, introducing human error, or would be sent direct to tape, potentially introducing shoe-shining back into the backup configuration.

As a result of this, a lot of AFTD layouts saw them more being used as glorified staging units, rather than providing a significant amount of near-line recoverability options.

Another, more subtle problem from this architecture was that nsrmmd itself is not a process geared towards a high amount of concurrency; while based on its name (media multiplexor daemon) we know that it’s always been designed to deal with a certain number of concurrent streams for tape based multiplexing, there are limits to how many savesets an nsrmmd process can handle simultaneously before it starts to dip in efficiency. This was never so much an issue with physical tape – as most administrators would agree, using multiplexing above 4 for any physical tape will continue to work, but may result in backups which are much slower to recover from if a full filesystem/saveset recovery is required, rather than a small random chunk of data.

NetWorker administrators who have been using AFTD for a while will equally agree that pointing a large number of savesets at an AFTD doesn’t guarantee high performance – while disk doesn’t shoe-shine, the drop-off in nsrmmd efficiency per saveset would start to be quite noticeable at a saveset concurrency of around 8 if client and network performance were not the bottleneck in the environment.

This further encouraged slicing and dicing disk backup capacity – why allocate 10TB of capacity to a single AFTD if you’d get better concurrency out of 5 x 2TB AFTDs? To minimise the risk of any individual AFTD filling while others still had capacity, you’d configure the AFTDs to each have target sessions of 1 – effectively round-robbining the starting of savesets across all the units.

I think that pretty much provides a good enough overview about AFTDs under v7.x that can talk about AFTDs in NetWorker v8.

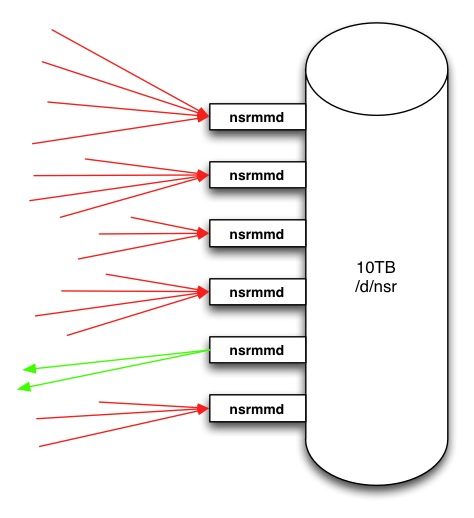

Keeping that 10TB of disk backup capacity in play, under NetWorker v8, you’d optimally end up with the following configuration:

You’re seeing that right – multiple nsrmmd processes for a single AFTD – and I don’t mean a read/write nsrmmd and a shadow-volume read-only nsrmmd as per v7.x. In fact, the entire concept and implementation of the shadow volume goes away under NetWorker v8. It’s not needed any longer. Huzzah!

Assuming dynamic nsrmmd spawning (yes), and up to certain limits, NetWorker will now spawn one nsrmmd process for an AFTD each time it hits the target sessions setting for the AFTD volume. That raises one immediate change – for a v8.x AFTD configuration, bump up the target sessions for the devices. Otherwise you’ll end up spawning a lot more nsrmmd processes than are appropriate. Based on feedback from EMC, it would seem that the optimum target setting for a consolidate AFTD is 4. Assuming linear growth, this would mean that you’d have the following spawning rate:

- 1 saveset, 1 x nsrmmd

- 2 savesets, 1 x nsrmmd

- 3 savesets, 1 x nsrmmd

- 4 savesets, 1 x nsrmmd

- 5 savesets, 2 x nsrmmd

- 6 savesets, 2 x nsrmmd

- 7 savesets, 2 x nsrmmd

- 8 savesets, 2 x nsrmmd

- 9 savesets, 3 x nsrmmd

Your actual rate may vary of course, depending on cloning, staging and recovery operations also being performed at the same time. Indeed, for the time being at least, NetWorker dedicates a single nsrmmd process to any nsrclone or nsrstage operation that is run (either manually or as a scheduled task), and yes, you can actually simultaneously recover, stage and clone all at the same time. NetWorker handles staging in that equation by blocking capacity reclamation while a process is reading from the AFTD – this prevents a staging operation removing a saveset that is needed for recovery or cloning. In this situation, a staging operation will report as per:

[root@tara usr]# nsrstage -b Default -v -m -S 4244365627

Obtaining media database information on server tara.pmdg.lab

80470:nsrstage: Following volumes are needed for cloning

80471:nsrstage: AFTD-B-1 (Regular)

5874:nsrstage: Automatically copying save sets(s) to other volume(s)

79633:nsrstage:

Starting migration operation for Regular save sets...

6217:nsrstage: ...from storage node: tara.pmdg.lab

81542:nsrstage: Successfully cloned all requested Regular save sets (with new cloneid)

4244365627/1341909730

79629:nsrstage: Clones were written to the following volume(s) for Regular save sets:

800941L4

6359:nsrstage: Deleting the successfully cloned save set 4244365627

Recovering space from volume 15867081 failed with the error 'volume

mounted on ADV_FILE disk AFTD1 is reading'.

Refer to the NetWorker log for details.

89216:nsrd: volume mounted on ADV_FILE disk AFTD1 is reading

When a space reclamation is subsequently run (either as part of overnight reclamation, or an explicit execution of nsrim -X), the something along the following lines will get logged:

nsrd NSR info Index Notice: nsrim has finished crosschecking the media db nsrd NSR info Media Info: No space was recovered from device AFTD2 since there was no saveset eligible for deletion. nsrd NSR info Media Info: No space was recovered from device AFTD4 since there was no saveset eligible for deletion. nsrd NSR info Media Info: Deleted 87 MB from save set 4244365627 on volume AFTD-B-1 nsrd NSR info Media Info: Recovered 87 MB by deleting 1 savesets from device AFTD1. nsrsnmd NSR warning volume (AFTD-B-1) size is now set to 2868 MB

Thus, you shouldn’t need to worry about concurrency any longer.

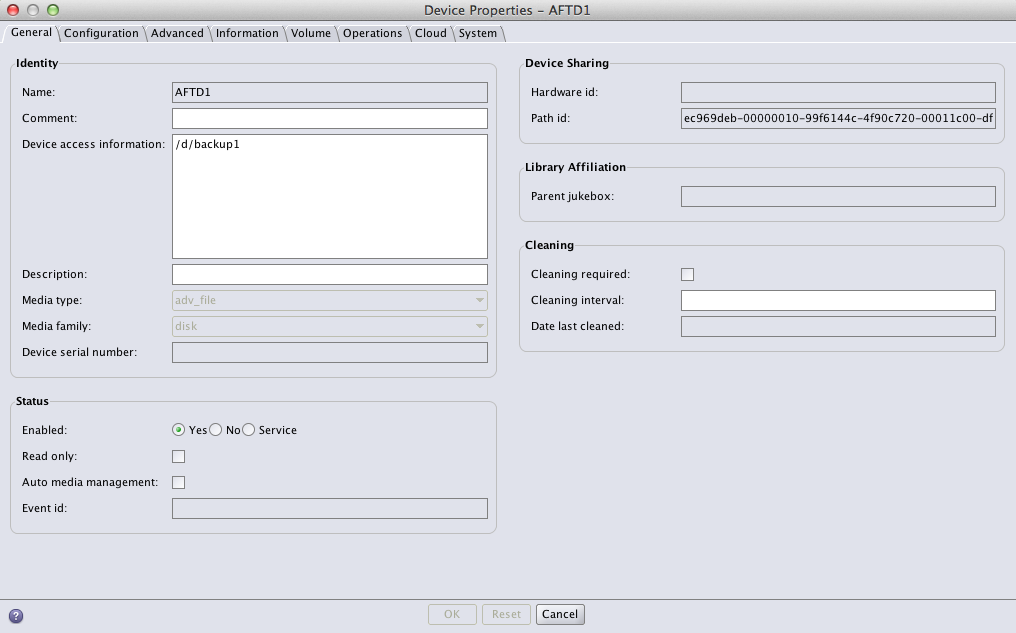

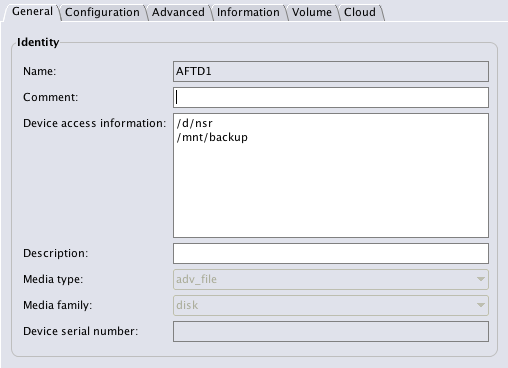

Another change introduced to the AFTD architecture is the device name is now divorced from the path. This is stored in a different field. For example:

In the above example, the AFTD device has a device name of AFTD1, and the path to it is /d/backup1 – or to be more accurate, the first path to it is /d/backup1. I’ll get to the real import of that in a moment.

There is a simpler management benefit: previously if you setup an AFTD and needed to later change the path that it was mounted from, you had to do the following:

- Unmount the disk backup unit within NetWorker

- Delete the AFTD definition within NetWorker

- Create a new AFTD definition pointing to the new location

- At the OS, remount the AFTD filesystem at the new location

- Mount the AFTD volume from its new location in NetWorker

This was a tedious process, and the notion of “deleting” an AFTD, even though it had no bearing on the actual data stored on it, did not appeal to a lot of administrators.

However, the first path specified to an AFTD in the “Device Access Information” field refers to the mount point on the owner storage node, so under v8, all you’ve got to do in order to relocate the AFTD is:

- Unmount the disk backup unit within NetWorker.

- Remount the AFTD filesystem in its new location.

- Adjust the first entry in the “Device Access Information” field.

- Remount the AFTD volume in its new location.

This may not seem like a big change, but it’s both useful and more logical. Obviously another benefit of this is that you no longer have to remember device paths when performing manual nsrmm operations against AFTDs – you just specify the volume name. So your nsrmm commands would go from:

# nsrmm -u -f /d/backup1

to, in the above example:

# nsrmm -u -f AFTD1

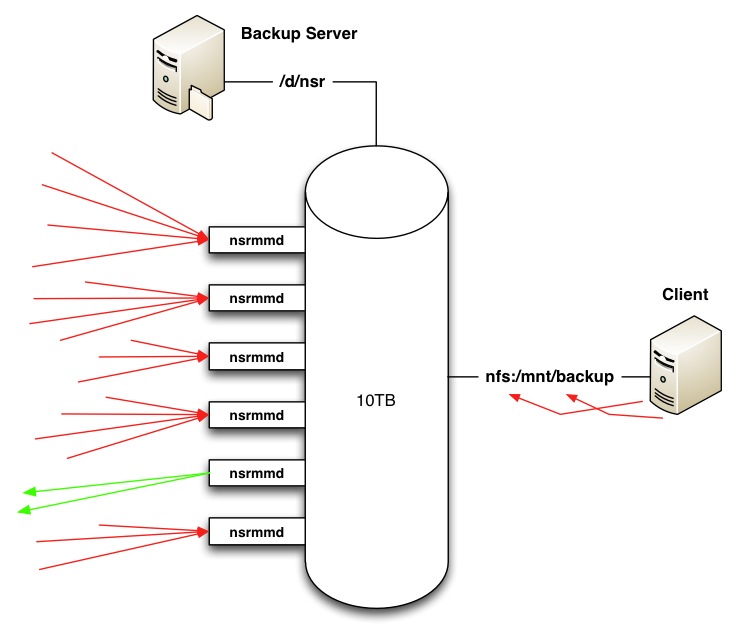

The real benefit of this though is what I’ve been alluding to by talking about the first mount point specified. You can specify alternate mount points for the device. However, these aren’t additional volumes on the server – they’re mount points as seen by clients. This allows a bypass of using nsrmmd to perform a write to a disk backup unit from the client, and instead sees the client write via whatever operating system mount mechanism it’s used (CIFS or NFS).

In this configuration, your disk backup environment can start to look like the following:

You may be wondering what advantage this offers, given the backup is still written across the network – well, past an initial negotiation with nsrmmd on the storage node/server for the file to write to, the client then handles the rest of the write itself, not bothering nsrmmd again. In other words – so long as the performance of the underlying filesystem can scale, the number of streams you can write to an AFTD can expand beyond the maximum number of nsrmmd processes an AFTD or storage node can handle.

At this point, the “Device Access Information” is used as a multi-line field, with each subsequent line representing an alternate path the AFTD is visible at:

So, the backup process will work such that if the pool allows the specified AFTD to be used for backup, and the AFTD volume is visible to a client with “Client direct” setting enabled (a new option in the Client resource), on one of the paths specified, then the client will negotiate access to the device through a device-owner nsrmmd process, and go on to write the backup itself.

Note that this isn’t designed for sharing AFTDs between storage nodes – just between a server/storage node and clients.

Also, in case you’re skeptical, if your OS supports gathering statistics from the network-mount mechanism in use, you can fairly readily see whether NetWorker is honouring the direct access option. For instance, in the above configuration, I had a client called ‘nimrod’ mounting the disk backup unit via NFS from the backup server; before the backup started on a new, fresh mount, nfsstat showed no activity. After the backup, nfsstat yielded the following:

[root@nimrod ~]# nfsstat Client rpc stats: calls retrans authrefrsh 834 0 0

Client nfs v3: null getattr setattr lookup access readlink 0 0% 7 0% 3 0% 4 0% 9 1% 0 0% read write create mkdir symlink mknod 0 0% 790 94% 1 0% 0 0% 0 0% 0 0% remove rmdir rename link readdir readdirplus 1 0% 0 0% 0 0% 0 0% 0 0% 0 0% fsstat fsinfo pathconf commit 10 1% 2 0% 0 0% 6 0%

NetWorker also reports in the savegroup completion report that a client successfully negotiated direct file access (DFA) for a backup, too:

* nimrod:All savefs nimrod: succeeded. * nimrod:/boot 86705:save: Successfully established DFA session with adv_file device for save-set ID '1694225141' (nimrod:/boot). V nimrod: /boot level=5, 0 KB 00:00:01 0 files * nimrod:/boot completed savetime=1341899894 * nimrod:/ 86705:save: Successfully established DFA session with adv_file device for save-set ID '1677447928' (nimrod:/). V nimrod: / level=5, 25 MB 00:01:16 327 files * nimrod:/ completed savetime=1341899897 * nimrod:index 86705:save: Successfully established DFA session with adv_file device for save-set ID '1660670793' (nox:index:65768fd6-00000004-4fdfcfef-4fdfd013-00041a00-3d2a4f4b). nox: index:nimrod level=5, 397 KB 00:00:00 25 files * nimrod:index completed savetime=1341903689

Finally, logging is done in the server daemon.raw to also indicate that a DFA save has been negotiated:

91787 07/10/2012 05:00:05 PM 1 5 0 1626601168 4832 0 nox nsrmmd NSR notice Save-set ID '1694225141' (nimrod:/boot) is using direct file save with adv_file device 'AFTD1'.

The net result of all these architectural changes to AFTDs is a significant improvement over v7.x AFTD handling, performance and efficiency.

(As noted previously though, this doesn’t apply just to AFTDs, for what it’s worth – that client direct functionality also applies to Data Domain Boost devices, which allows a Data Domain system to integrate even more closely into a NetWorker environment. Scaling, scaling, scaling: it’s all about scaling.)

In order to get the full benefit, I believe sites currently using AFTDs will probably go through the most pain; those who have been using VTLs may have a cost involved in the transition, but they’ll be able to transition to an optimal architecture almost immediately. However, sites with multiple smaller AFTDs won’t see the full benefits of the new architecture until they redesign their backup to disk environment, increasing the size of backup volumes. That being said, the change pain will be worth it for the enhancements received.

Great Article. May I suggest you to put all of your NSR 8 articles within a single doc/pdf file ?

Continue going on !

Thierry

Thanks for the suggestion, Thierry; I’ll keep that in mind.

Hello,

I found this in NetWorker v8 admin guide :

AFTD concurrent operations and device formats

The following operations can be performed concurrently on a single storage node with an

AFTD:

? Multiple backups and multiple recover operations

? Multiple backups and one manual clone operation

? Multiple backups and one automatic or manual staging operation

so, question is, can we have multiple automatic clone operations on an AFTD ?

Hi Thierry,

I believe this is a documentation error. You can indeed run multiple clones – the concurrency effectively refers to per nsrmmd, and you can have multiple nsrmmd’s per device.

For instance, here’s 4-way cloning from one AFTD:

Cheers,

Preston.

Good article

one question:

“2.Remount the AFTD filesystem in its new location.”

What does this mean? How to do that?

I’m referring to whatever operating system commands or actions you use to unmount a filesystem from one location and mount it in another location.

Hello Preston,

I have a question regarding DDBOOST device sharing with Networker 8.

We are currently sharing the DDBOOST device between our 2 storage node, is it fine to use it like that or not?

(Note: We have 2 Datacenter which has 2 Storage nodes in each DC which is also use as proxy servers for Vadp backups.)

ex: we have say one ddboost device created with name “vmware”, this device is mapped to both the storage node STG1 and STG2. The above storage node is also acting as a proxy for vadp backups.

We are running vmware backups on this pool. Some runs from One storage node and some runs from other.

so whenever backup runs its use same volume for our Vmware backups.( its a part of same pool)

We have simliar setup for other groups.( same volume is shared or say mapped to both the storage nodes.

Regards,

Mangesh

I would not share DDBOOST devices between storage nodes – instead, create at least one unique DDBOOST device per storage node.

Hi

How can the staging be spread out to several tape devices now in NW 8, before I used about the same AFTD’s as I had tape devices.

Is it wise to create several ones even now ?

/Lars

NW8 supports reading multiple streams simultaneously from an AFTD, even for cloning and staging streams. Just stage/clone different data (e.g., by group, or client, etc.)