The caution about keeping all of ones eggs in the one basket is a fairly common one.

It’s also a fairly sensible one; after all, eggs are fragile things and putting all of them into a single basket without protection is not necessarily a good thing.

Yet, there’s an area of backup where many smaller companies easily forget the lesson of eggs-in-baskets, and that area is deduplication.

The mistake made is assuming there’s no need for replication. After all, no matter what the deduplication system, there’s RAID protection, right? Looking just at EMC, with either Avamar or Data Domain, you can’t deploy the systems without RAID*.

As we all know, RAID doesn’t protect you from accidental deletion of data – in mirrored terms, deleting a file from one side of the mirror doesn’t even commit the operation until it’s been completed on the other side of the mirror. It’s the same for all other RAID.

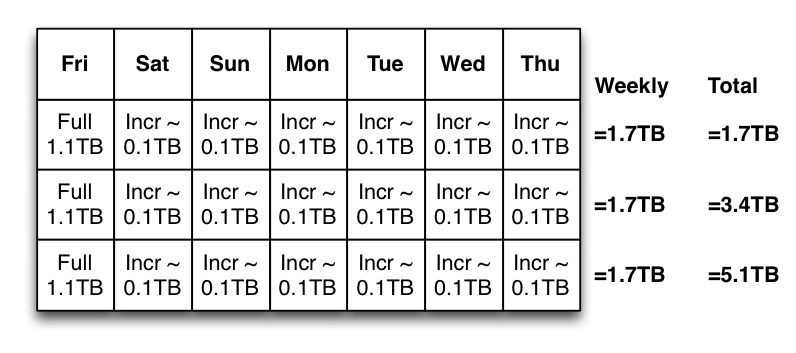

Yet deduplication is potentially very much like putting all ones eggs in one basket when comparing to conventional storage of backups. Consider the following scenario in a non-deduplication environment:

In this scenario, imagine you’re doing a full backup once a week of 1.1TB, and incrementals all other days, with each incremental averaging around 0.1TB. So at the end of each week you’ll have backed up 1.7TB. However, cumulatively you keep multiple backups over the retention period, so those backups will add up, week after week, until after just 3 weeks you’re storing 5.1TB of backup.

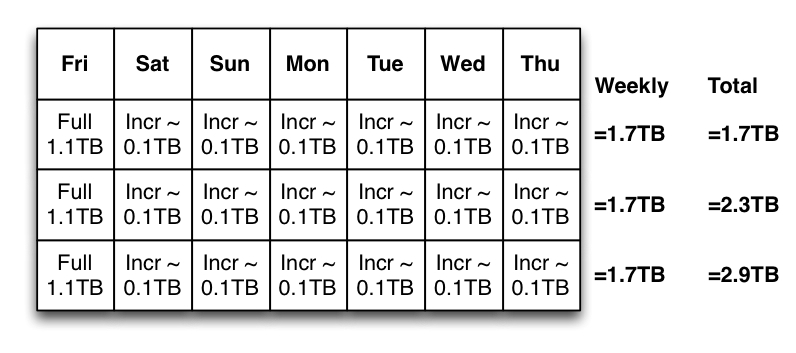

Now, again keeping the model, imagine a similar scenario but with deduplication involved (and not accounting for any deduplication occurring within any individual backup):

Now, again, I’m keeping things really simple and not necessarily corresponding to a real-world model. However, while each week may see 1.7TB backed up, cumulatively, week after week, the amount of data stored by the deduplication system will be much lower; 1.7TB at the end of the first week, 2.3TB at the end of the second, 2.9TB at the end of the third.

Cumulatively, where do those savings come from? By not storing extra copies of data. Deduplication is about eliminating redundancy.

On a single system, deduplication is putting all your eggs in one basket. If you accidentally delete a backup (and it gets scrubbed in a housekeeping operation), or if the entire unit fails, it’s like dropping the basket. It’s not just one backup you lose, but all backups that referred to the specific data lost. It’s something that you’ve got to be much more careful about. Don’t treat RAID as a blank cheque.

The solution?

It’s trivially simple, and it’s something every vendor and system integrator worth their salt will tell you: when you’re deduplicating, you must replicate (or clone, in a worse case scenario), so you’re protected. You’ve got to start storing those twin eggs in another basket.

Cloning of course is important in non-deduplicated backups, but if you’ve come from a non-deduplicated backup world, you’re used to having at least a patchy safety net involved – with multiple copies of most data generated, even in an uncloned situation if a recovery from week 2 fails, you might be able to go back to the week 3 backup and recover what you need, or at least enough to save the day.

The message is simple:

Deduplication = Replication

If you’re not replicating or otherwise similarly protecting your deduplication environment, you’re doing it wrong. You’ve put your eggs all in one basket, and forgotten that you can’t unbreak an egg.

—

Well, technically, you could probably sneak in an AVE deployment without RAID, but you’d be getting fairly desperate.