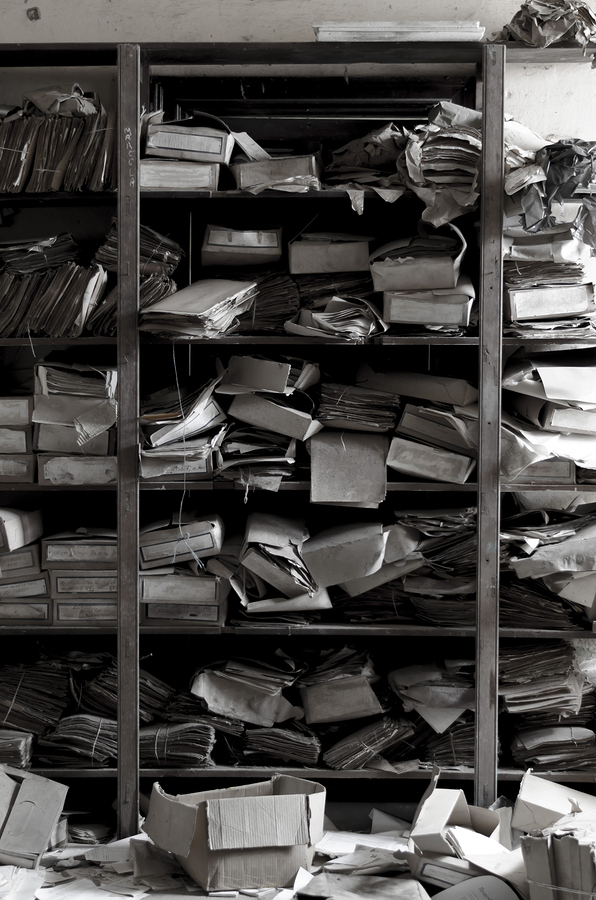

I’ve seen a lot of talk lately of data hoarding. Data can bring us new insights, new business opportunities, and…

Backup, archive and HSM are funny old concepts in technology and business. We’ve been doing backup for literally decades, and…

Introduction In Data Protection: Ensuring Data Availability, I talk quite a lot about what you need to understand and plan…

When I first started working with backup and recovery systems in 1996, one of the more frustrating statements I’d hear…

This is the fifth and final part of our four part series “Data Lifecycle Management”. (By slipping in an aside…

This is the third post in the four part series, “Data lifecycle management”. The series started with “A basic lifecycle“,…

This is an adjunct post to the current series, “Data lifecycle management“, and is intended to provide a little more…

This is part 2 in the series, “Data Lifecycle Management“. Penny-wise data lifecycle management refers to a situation where companies…

I’m going to run a few posts about overall data management, and central to the notion of data management is…

My boss, on his blog, has raised a pertinent question – if it’s so important, according to some vendors, that…