In the various backup/data protection groups I hang around on, I periodically see the “I’m new to backup, what do…

Introduction What goes into making a backup? What are the traits that essential in order for it to be something…

Years ago, back in the days when TV shows would take months, if not years, to appear in Australia after…

Another year rolls around, and I yet again find myself facing an onslaught of “world backup day” posts. So yet…

In November last year I posted a script and descriptions for use to allow NetWorker to backup RedHat/CentOS KVM images…

I assume you’ve heard of the wheat and the chessboard problem. It often gets presented as part of the history of chess, or as…

Do you want a backup system, or a staging system? This might seem like an odd question, but I’m asking…

Backup, archive and HSM are funny old concepts in technology and business. We’ve been doing backup for literally decades, and…

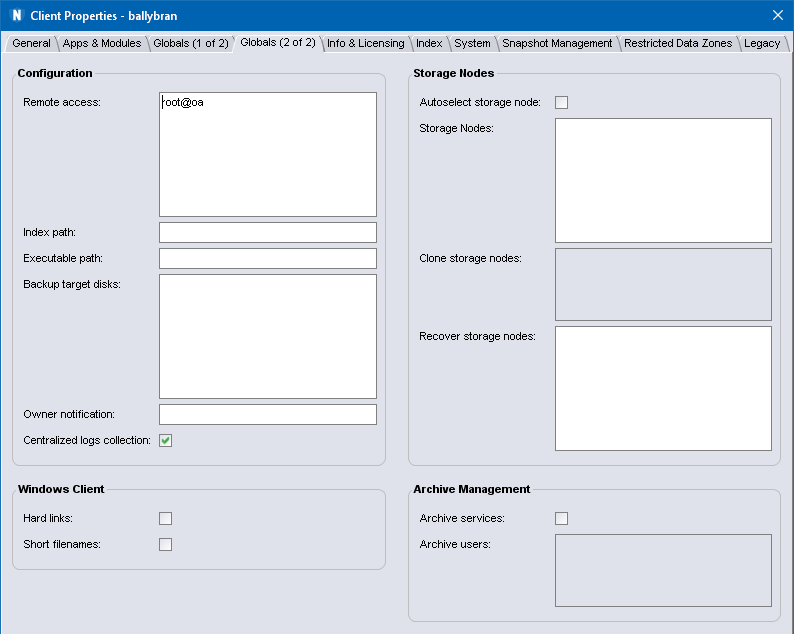

One of the things that I forgot to mention earlier that’s included in the NetWorker 18.1 release is a function…

In late 2016, I wrote a post, Falling in love with the IRS. In that post, I provided a bit…