Introduction Here’s a question I get asked quite frequently: “If we have a vault, how will the vault team know…

Bad Things Happened at OVH If you work in infrastructure, you probably saw some reference in the last week to…

“In these strange times…” “In these interesting times…” “In these very different times…” “As we come to grips with the…

Not every business can afford a fully functional disaster recovery location, particularly if it’s a smaller environment with a tighter…

In late 2016, I wrote a post, Falling in love with the IRS. In that post, I provided a bit…

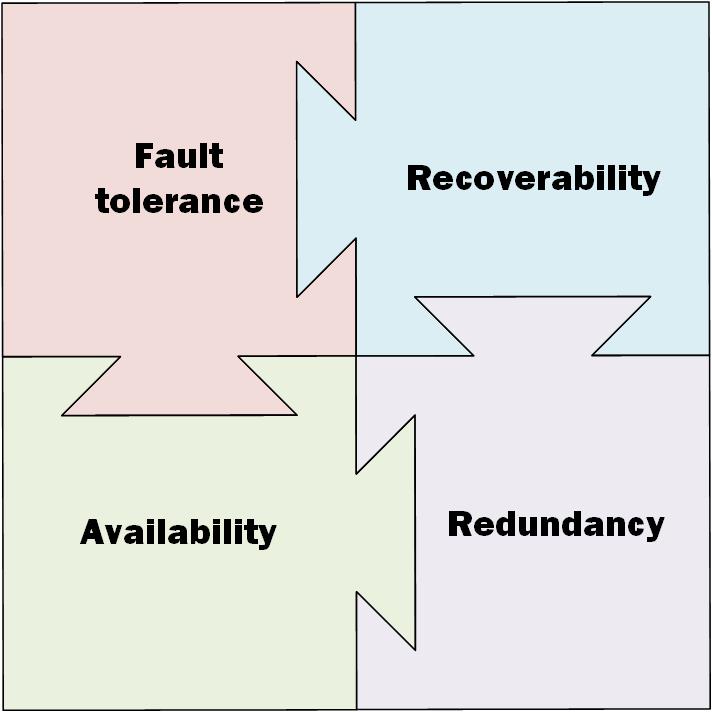

Introduction In Data Protection: Ensuring Data Availability, I talk quite a lot about what you need to understand and plan…

In the 90s, if you wanted to define a “disaster recovery” policy for your datacentre, part of that policy revolved…

Are your backup administrators people who are naturally paranoid? What about your Data Protection Advocate? What about the members of…

How many datacentres do you have across your organisation? It’s a simple enough question, but the answer is sometimes not…

When I first started working with backup and recovery systems in 1996, one of the more frustrating statements I’d hear…