If you’ve been around in IT for a while, you’ll have undoubtedly heard of Data Gravity. To me it’s a…

Introduction In Data Protection: Ensuring Data Availability, I talk quite a lot about what you need to understand and plan…

Data protection lessons from a tomato? Have I gone mad? Bear with me. If you’ve done any ITIL training, the…

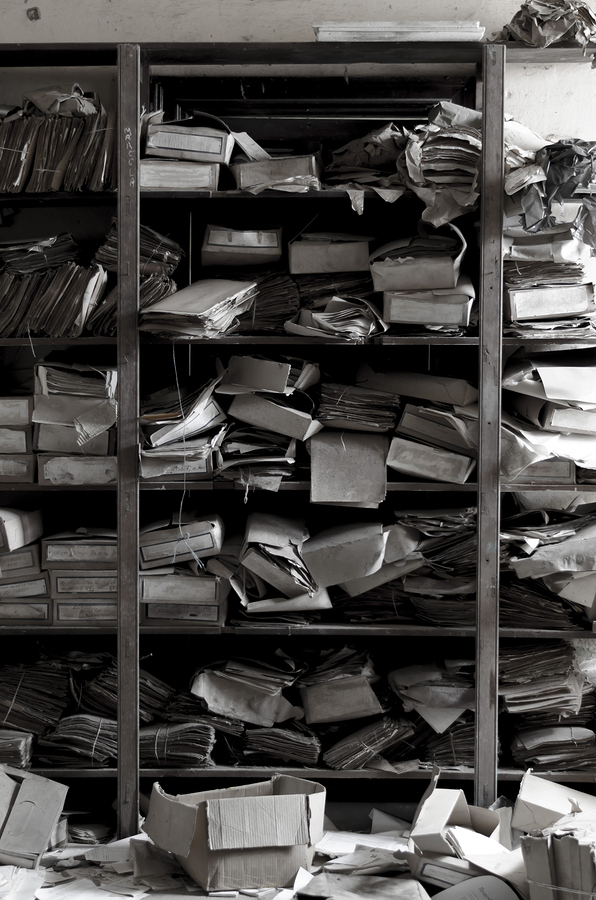

This is the fifth and final part of our four part series “Data Lifecycle Management”. (By slipping in an aside…

This is the third post in the four part series, “Data lifecycle management”. The series started with “A basic lifecycle“,…

This is part 2 in the series, “Data Lifecycle Management“. Penny-wise data lifecycle management refers to a situation where companies…

I’m going to run a few posts about overall data management, and central to the notion of data management is…

Within NetWorker, data (savesets) can go through several stages in its lifecycle. Here’s a simple overview of those stages: The…