It’s been almost a month since my last post — sorry about that! I’ve been a bit busy. And I…

“It is a truth universally acknowledged that a computer in possession of good data, must be in want of a…

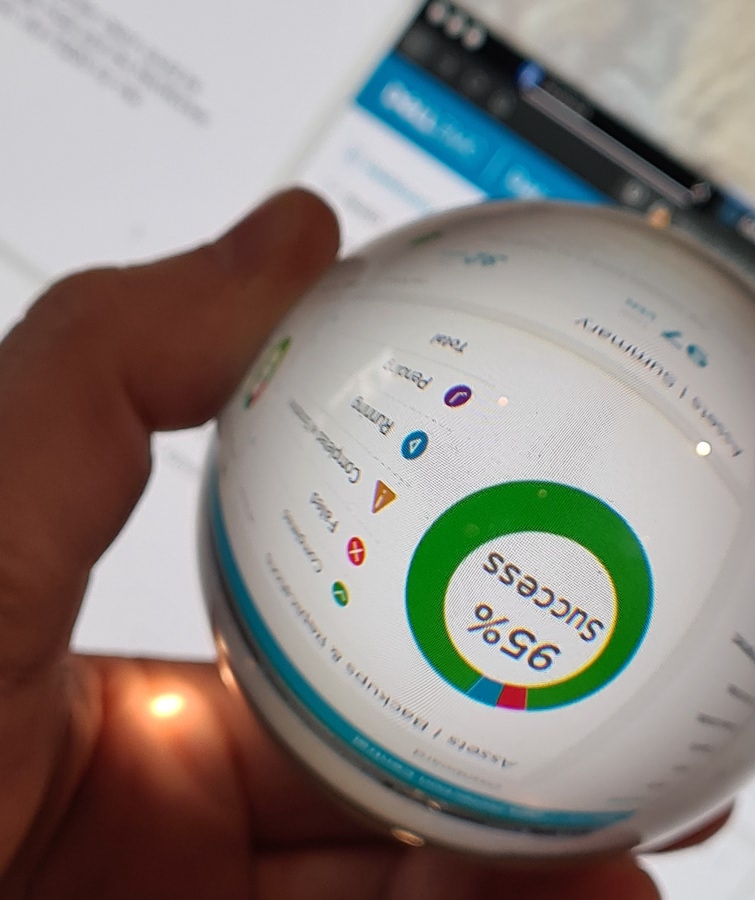

Last week saw the release of version 19.3 of Dell Data Protection Software – Avamar, NetWorker, Data Protection Advisor and…

OK, let’s look at common data protection challenges through the eyes of memes again! I’m a big fan of backup…

It’s that time! Data Protection Software 19.1 has been released. With new versions released simultaneously, that means there’s updates available…

Introduction Earlier this month, Data Protection Suite 18.2 was released. This covers: Avamar Data Protection Advisor (DPA) Data Protection Central…

In Australia for many people, Christmas comes twice a year. There’s the official Christmas, December 25, but there’s also Christmas in…

The world is changing, and data protection is changing with it. (OK, that sounds like an ad for Veridian Dynamics,…

I’d like to take a little while to talk to you about licensing. I know it’s not normally considered an exciting subject (usually at…

The cockatrice was a legendary beast that was a two-legged dragon, with the head of a rooster that could, amongst…