This is my blog entry about the SNIA blogfest on 4 November that I attended, but it’s not what I originally intended to be my blog entry. I was intending just to do a slightly more structured dump of all the details I took down during the meetings with the four vendors, but I don’t think this would be fair to my readers.

At the same time, I’m not a storage expert like the other bloggers who were at the event. In particular, Rodney Haywood and Justin Warren have been dedicating their blogs to strong amounts of information of the details discussed by the vendors. Ben Di Qual tweeted a huge amount during the session as well, and he tweeted towards the end of the day that maybe it’s time for him to launch a blog as well. I’m presuming Graeme Elliott will post something as well (maybe here?)

Perhaps the delays I’ve experienced in finding time to blog about the event have been useful, because it’s made me realise I want to summarise my experience in a different way.

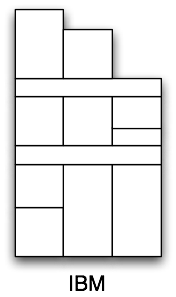

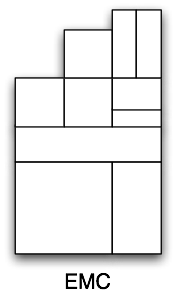

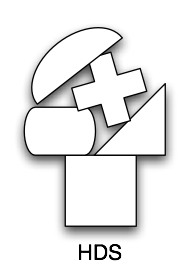

For that, I need an illustration. Having met with the four vendors, and heard their “how we do things” story, I can now visualise each vendor as a tower. And to me, here’s what they look like:

I don’t want to talk about the speeds and feeds of any of the products of any of the vendors. To me, that’s the boring side of storage – though I still recognise its importance. I don’t really care how many IOPs a storage system can do for instance, so long as it can do enough for the purposes of the business that’s deploying it. Though I will say that a common message from vendors was that while companies buy storage initially for capacity, they’ll end up replacing it for performance. (This certainly mirrors what I’ve seen over the years.)

When I got my book published, the first lesson was that it wasn’t so to speak a technical book, but an IT management book. Having learnt that lesson, it subsequently reminded me that my passion in IT is about management, processes and holistic strategies. This is partly why backup is my forté, I think; backup is something that touches on everything within an environment, and needs a total business strategy as opposed to an IT policy.

So the message I got out of the vendors was business strategy, not storage options. This is equally as important a message though – in fact, it’s arguably far more in-depth a message than a single storage message.

I’m hoping the diagrams help to explain the business strategy message I got from the four vendors.

EMC and IBM to me presented the most comprehensive business strategy of the four vendors. This was a mix of what they presented and prior understanding of their overall product range. If I were to rate the actual on-the-day presentations, IBM certainly were the best at communicating their “total business strategy”. IBM though also said that they didn’t quite know what information to present, so they just went for the big picture overview of as much as anything. Whether EMC, as a more active social media company were treating the event as a literal “storage” event, or just through a lack of (foresight? time taken to prepare?), concentrated just on storage. But I still know much of the EMC story. To be fair to IBM, too, they’ve been doing it for a lot, lot longer than any of the other storage companies – so they’re pretty good communicators.

Neither IBM nor EMC provide a total strategy; they both have gaps, of course – I wouldn’t claim that any vendor out there today provides a total strategy. IBM and EMC though are closer to that full picture than either of the other two vendors we met with on that day.

But what about HDS and NetApp?

I don’t believe HDS have a total business strategy story to tell. They want to; they try to, but the message comes out all jumbled and patch-work. They contribute disks and some storage systems themselves, and try to contribute a unifying management layer, and then add an assortment of diverse and not necessarily compatible products into the mix. To be fair: they’re trying to provide a total business strategy, but if I were to put an “IT Manager” hat on, I’m not convinced I’d be happy with their story – as an IT manager I may very well choose to buy one or two products from them, but their total strategy is so piecemeal that there’s no technology reason to try to source everything from them.

For what it’s worth, their “universal management” interface message is as patchy as their product set. It started with (paraphrasing only!) “we provide a unifying management system” and boiled down to “our goal is to provide a unifying management system, when we can”.

To me, that’s not a unified management system.

I should note at this point that I’m working on the blog entry now on battery power, as all of Bunbury appears to have lost power tonight. (Let me speak another time of the appalling lack of redundancy built into Australian power distribution systems.)

On to NetApp. NetApp try to present a total business strategy story. They speak of unified storage where you don’t need to buy product A for function X, product B for function Y and product C for function Z – instead, you just buy über product A that will handle X, Y and Z.

But what about functions P, Q and R? Coming at the process from a “I’m not into storage”, the first thing that jars me with NetApp is the “old backup is dead [edit: John Martin has rightly corrected me – he said ‘backup is evil’]” story. NetApp focus incredibly hard on trying to point out that you never need to backup again so long as you have the right snapshot configuration.

Like IBM’s “incrementals forever are really cool” story, NetApp’s “with snapshot you never need to backup again” strikes me as being almost at 90 degree angles to reality.

(Aside: Of course I’m interested in hearing counter arguments from IBM, and I’ve actually requested trial access to their TSM FastBack product, since I am interested in what it may be able to do – but being told that it doesn’t matter how many incremental backups you’ve done because (paraphrasing!) “a relational database ensures that the recovery is really fast by tracking the backups” isn’t something that convinces me.)

NetApp’s “you never need to backup again” story is at times, from my conservative approach to data protection, a bit like The Wizard of Oz: “Pay no attention to that man behind the curtain!” Yet having a company repeatedly insist that X is true because X is true doesn’t make me for one moment believe that X is true: and so, I remain unconvinced that “snapshots forever = backups never” is a valid business strategy except in the most extreme of cases.

Or to put it another way: HDS trotted out an example about achieving only a 4% saving by doing dedupe against 350 virtual machines. I’d call this trying to use an extreme example as a generic one: I’m sure they did encounter a situation where they only got a 4% storage saving by applying dedupe against 350 virtual machines; but I’m equally sure that one example can’t be used as proof that you can’t dedupe against virtual machines.

Likewise, I call shenanigans on NetApp’s bid to declare traditional backup dead [edit: evil]. Sure the biggest of the big companies with the biggest of budgets might be able to do a mix of snapshots and replication and … etc., but very, very few companies can completely eliminate traditional backup. They may of course reduce their need for it in all situations. As soon as you’ve got snapshot capable storage, particularly in the NAS market, you can let users recover data from snaps and then focus backups on longer term protection etc. But that’s not eliminating traditional backup altogether.

[Edit: Following on from correction; I’d like to see NetApp’s “how backup fits into our picture” strategy in better detail, and based on John’s comments, I’m sure he’ll assist!]

So in that sense, we have four vendors who each try, in their own way, to provide a total business strategy. IBM and EMC are the ones that get closest to that strategy, and both NetApp and HDS in their own way were unable to convince me they’re able to do that.

That doesn’t mean to say they should be ignored, of course – but they’re clearly the underdogs in terms of complete offerings. They both clearly have a more complete story to tell than say, one of the niche storage vendors (e.g., Xiotech or Compellent), but their stories are no where near as complete as the vendors who aim for the total vertical.

It was a fascinating day, and I’d like to thank all those involved: Paul Talbut and Simon Sharwood from SNIA; Clive Gold and Mark Oakey from EMC; John Martin from NetApp; Adrian De Luca from HDS; and Craig McKenna, Anna Wells and Joe Cho from IBM. (There were others from IBM, but I’m sorry to say I’ve forgotten their names). Of course, big thanks must also go to my fellow storage bloggers/tweeters – Rodney Haywood, Justin Warren, Ben Di Qual and Graeme Elliott. Without any of those people, the day could not have been as useful or interesting as it was.

And there you have it.

Now for the disclosures:

- EMC bought us coffee.

- EMC gave us folios with a pad and paper. I took one. The folio will end up in my cupboard with a bunch of other vendor provided folios from over the years.

- IBM gave us “leftover” neoprene laptop bags from a conference, that had a small pen and pad in it. My boyfriend claimed the neoprene bag.

- IBM bought us coffee.

- IBM provided lunch.

- HDS provided afternoon tea.

- HDS provided drip-filter coffee. I did not however in any way let the drip filter coffee colour my experience on-site. (Remember, I’m a coffee snob.)

- NetApp provided beer and wine. I had another engagement to get to that night, and did not partake in any.

Outstanding!

People who claim backup as “dead” have about as much a leg to stand on as those who proclaim tape dead, or disk dead in the wake of SSD. I’m still not sold on dedupe being worth the pricetag that comes with it.

Nice writeup, though it seems the statements I made around backup put you offside.

I wish I’d had more time to go into more detail on my position on backup and communicated more clearly. My problem with backup is specifically “old school” backup which relies on regular “bulk copy” operations, this architecture stopped scaling ages ago due mostly to the fact that its really hard to pull the data off the spindles in any kind of reasonable timeframe. There are also a number of other problems with the way backup systems are used (eg as archiving systems), which also causes problems

As I said at the presentation, every major backup vendor is moving towards using a data protection scheme based around some combination of some or all of the following

snapshots on the primary

replication of changed blocks

dense disk based storage for the backup repository.

Optional tape based backup of the repository.

NetApp SnapVault, EMC’s Avamar, Symantec Puredisk, Microsoft’s DPM are all examples of this, and for good reason, they solve the biggest problem that faces backup and data protection – how do I pull exponentially increasing amounts of data from ever decreasing numbers of physical disks.

As I pointed out in response to one of your questions, This is not to say that bulk copy backup methods and tape has no place in the data protection scheme. Tape as an “offline” medium, not in an autoloader, but truly offline in something like a fireproof safe, protects against almost all forms of data loss. This makes it useful for making periodic (e.g. monthly) D/R copies of the disk based dense repository, which is something I reccomend at every briefing I give, however it’s role as the primary method of data protection is rapidly coming to an end.

Backup, by no means is dead, but the bulk copy architectures designed in the 90’s dont scale effectively and actively work against dynamic rebalancing of datasets and workloads across datacenters.

I’d be happy to debate the finer points here on your blog, or put up a full explanation of my position on my blog by completeing my “Backup is Evil” posts I started here http://storagewithoutborders.com/2010/04/01/backup_is_evil_part1/

HDS can’t have a total business strategy if they provide drip-filter coffee… 😉

Backup to disk(with dedupe as an option) over the SAN and cloning to (offsite) tapes makes most sense for me. So you can avoid the shoeshine effect on tapes with slow clients, have fast random access times for restore and the savesets are copied to another media to a different location.

In my opinion, using primary storage space for snapshots and replication, can only be a additional security for higher demands (small restore and data loss time windows)

First the disclosures. I work for an organization that architects, implements and resells HDS and Netapp technologies.

IBM and EMC are enormous companies and the breadth of their offerings allows them to address a wide range of customer needs. IBM and EMC in particular, have always been strong in sales and marketing. Their ability to share their vision is in large part why they are so successful. This is not a criticism of these organizations I’m simply stating that they are very good at messaging.

I am not an IT Manager but I frequently interact with individuals that serve in this capacity to develop and implement solutions. I did not attend the SNIA blogfest so I have no idea what was presented but I do have a different perspective on these vendors. I am sure this is surprising given my affiliations!

When I look at EMC I see a company that primarily through acquisitions, has a long list of products. This allows them to address virtually any data storage or management need you might have but I am not sure that I equate that with having a great strategy. It certainly gives you a single source for all of your needs and the coveted “one throat to choke”. At the same time I have not seen where this gives organizations a significant advantage over those that choose to pursue a best of breed strategy.

It seems logical that a single provider, in particular if they own all of the technology, would be able to provide a more compelling solution. The products could be more tightly integrated and the support less fragmented, but evidently it is not that simple. In addition to HDS and Netapp I represent other organizations, some of which have large product lines and frequently acquire companies and technologies to expand or improve their offerings. What I have found is that in many cases, with the exception of the marketing materials, the products are not integrated. I cannot tell you the amount of Marchitecture I have had to go through to try and uncover exactly where and how the products are integrated, only to discover that it consists of a “link and launch button”. If you implement the technologies and need to call support you may find that although it is a single support number the support groups are completely separate and unfamiliar with anything other than their specific product. This results in the finger pointing that most people were trying to avoid in the first place. The only single throat available for choking is the sales rep and he’s not always a lot of help.

When I look at organizations such as HDS and Netapp they rely more on partnerships and less on acquisitions. Some will point to OEM relationships or partnering agreements and say that the products are just bolted together lacking any real integration. In some cases, in particular with software, this may be true. If you’re developing backup software you want to address the largest possible market and it may not provide any specific benefits when combined with one storage technology or another. But is that a bad thing? That also means that you can change storage vendors without the need to replace your backup software or lose functionality.

What I have seen is that most organizations that purchase everything from a single source do not do so because they believe that the technologies or vision are superior but rather because of FUD – Fear Uncertainty and Doubt. The customer believes that using technologies from multiple vendors will result in chaos and failure. To be clear if you are not selective in the technologies you choose or conscientious in your approach this could happen. What I am suggesting is that in many cases you can build superior all around solutions using best of breed technologies. This is not the same as saying “you can build superior solutions with any technology and feel free to incorporate as many as possible!”

This is where I believe HDS and Netapp fit. They do not have the range of products that EMC and IBM have to offer but instead rely on partnerships or the broader Technology market as a whole. I do not see this as a shortcoming – they are focused on being the best in the areas they address not being all things to all people.

Disclosure – EMCer here.

The question of backup is a funny one to me. I just can’t believe, intellectually that NetApp believes their own story. If that WERE true, they never would have bid on Data Domain, and I don’t believe for a second that it was done “to have EMC buy them”.

Au contraire, we both saw a HUGE demand (not at the exclusion of other approaches) at customers for target-based, inline dedupe that was effective, simple and worked.

I just don’t get how it’s possible to now say “ah – that’s rubbish” (or “evil”) when CLEARLY it’s not, and CLEARLY it’s not something that was believed by NetApp themselves fundamentally.

I’m not pooh-poohing their approach. I’m pooh-poohing the idea that there is ever a “one way, always” answer.

Re the question of acquisition. EMC has a ~$2B R&D budget, and as a proportion of our overall revenue, it’s at the high end of the high-tech scale. We have engineers around the globe. I would posit that they are no more, no less intelligent/effective than people at other companies. This has resulted in boatloads of “home grown innovation” (think virtual matrix, think scale-out commodity x86 storage, think automated non disruptive storage reconfig, think modular IO blade, think Atmos, think C4 – it’s a long long list).

The problem for any company is that you CANNOT be limited by your own rate of innovation – innovation and disruption occurs across the industry. Acquisition (of products, people, markets) is an important part of business.

I think that the ability for a company to have a DNA that enables BOTH internal innovation and acquisition (without intrinsic “tissue rejection”) is a good thing.

THAT ALL SAID – we’ve been spending a TON of R&D on solving the problem of “how can we make 1 (internal innovation) + 1 (acquisition) ! = “bunch of boxes with different UIs making things complex”. Customers want the benefits and flexibility often, but if they DIDN’T have to have different things to answer their problems, that would be goodness, and the best of both worlds.

Stay tuned on that front.

Thanks for your summary Preston. Your diagram summary was refreshing and interesting…yet after reading your article I realized I read something very different into the diagrams (and my perspective on those vendors).

Looking at the diagrams alone, I’d conclude that NetApp has a clearly defined offering, HDS is all over the place, and IBM and EMC are a stack of acquisitions and dev effort to complete the story.

The one vendor “superstack” you have drawn for IBM and EMC has always bothered me. Unification is critical – imagine a world where you couldn’t transfer data between your home PC and your work PC because Microsoft had different filesystems and protocols for home, smb and enterprise users?

Backup isn’t dead, tape isn’t even dead – but ‘backup’ is fading away to be thought of less about recovery and more about long term retention.

Disclaimer: I work for a SI with relationships with IBM, NetApp and HP storage.