We are approaching the point where it would be conceivable for someone to build a PB disk system in their home. It wouldn’t be cheap, but it’s a hell of a lot cheaper than at any point in computing history.

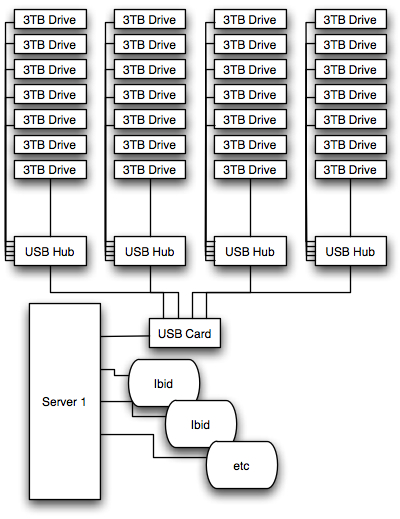

Do you think I’ve gone crazy? Do the math – using 3TB drives, you’d only need 342 drives to get to 1PB. If you wanted to do it really nasty, you could use USB drives, 7-port hubs and a series of PCIe USB cards.

You can get standard motherboards now with 8 PCIe ports. 8 x 4-port USB-2 cards would yield 32 incoming USB channels. Throw a 7-port USB hub onto the end of each of those channels, and you’d get 224 USB connections. Not quite 342, so expand your design a little bit, deploy 2 hosts, split the drives between them and throw in a clustered filesystem across them.

Violá! It’ll be a messy pile of cables and you’ll need decent 3-phase power coming into your house, but you’ll have a PB of storage! Here’s the start of the system diagram before I got too bored and realised I’d need a much bigger screen:

And if you haven’t lost your current meal laughing at this yet – I’ll state the brutally obvious. Performance would suck. For very, very large values of suck. Reliability would suck too, for equally large values of suck.

But you’d have a PB of storage.

That’s what it’s all about, isn’t it? Well, no. And we all know that.

So if we recognise that, when we look at a blatantly absurd example, why is it that we can be sucked into equally absurd configurations?

I remember in the early noughties, when the CLARiiON line got its first ATA line. The sales guys at the company I worked for at the time started selling FC arrays to customers with snapshots going to ATA disk to make snapshots cheap.

The snapshots were indeed cheap.

But as soon as the customers started using their storage with active snapshots, their performance sucked – for large values of suck.

And the customers got angry.

And there was much running around and gnashing of teeth.

And I, a software-only guy sat there thinking “who would be insane enough to think that this would have been OK?”

It’s the old saying – you can have cheap, good or fast. Pick two.

Sometimes, cheap means you don’t even get to pick a second option.

By now you’re probably think that I’m on some meandering ramble that doesn’t have a point. And, if you’re about to give up and close the browser tab, you’d be right.

But, if you went onto this paragraph, you’d get to read my point: if you wouldn’t use a particular server, storage array or configuration in a primary production server, you shouldn’t be considering it for the backup server that has to protect those primary production servers either.

No, not in scenario X, or possibility Y. If you wouldn’t use it for primary production, you don’t use it for support production.

It’s that simple.

And if you want to, feel free to go build that cheap and cheerful 1PB storage array for your production storage. I’m sure your users will love it – about as much as they’d love it if you used that 1PB array to backup your production systems and then had to do a recovery from it. That’s my point; it’s not just about buying something that performs for backup – it’s about having something that has sufficient performance for recovery.

So if someone starts talking to you about deploying X for backup, and X is a bit slower, but it’s a lot cheaper, just consider this: would you use X for your primary production system? If the answer is no, then you’d better have some damn good performance data at hand to show that it’s appropriate for the backup of those primary production systems.