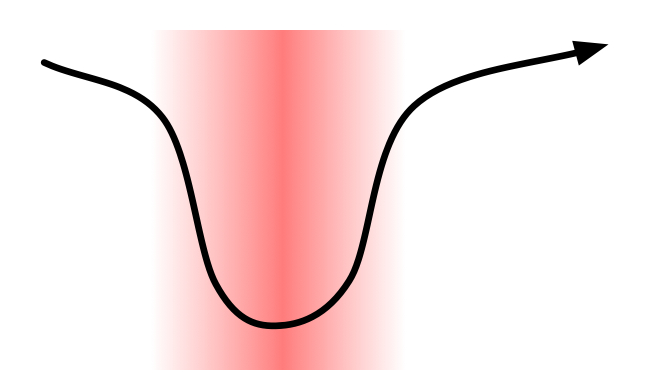

What’s the ravine?

When we talk about data flow rates into a backup environment, it’s easy to focus on the peak speeds – the maximum write performance you can get to a backup device, for instance.

However, sometimes that peak flow rate is almost irrelevant to the overall backup performance.

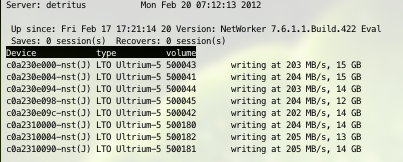

Many hosts will exist within an environment where only a relatively modest percentage of their data can be backed up at peak speed; the vast majority of their data will instead be backed up at suboptimal speeds. For instance, consider the following nsrwatch output:

That’s a write speed averaging 200MB/s per tape drive (peaks were actually 265MB/s in the above tests), writing around 1.5-1.6GB/s.

However, unless all your data is highly optimised structured data running on high performance hardware with high performance networking, your real-world experiences will vary considerably on a minute to minute basis. As soon as filesystem overheads become a significant factor in the backup activity (i.e., you hit fileservers, regular OS and application parts of the operating system, etc.), your backup performance is generally going to drop by a substantial margin.

This is easy enough to test in real-world scenarios; take a chunk of a filesystem (at least 2x the memory footprint of the host in question), and compare the time to backup:

- The actual files;

- A tar of the files.

You’ll see in that situation that there’s a massive performance difference between the two. If you want to see some real-world examples on this, check out “In-lab review of the impact of dense filesystems“.

Unless pretty much all of your data environment consists of optimised structured data which is optimally available, you’ll likely need to focus your performance tuning activities on the performance ravine – those periods of time where performance is significantly sub-optimal. Or to consider it another way – if absolute optimum performance is 200MB/s, spending a day increasing that to 205MB/s doesn’t seem productive if you also determine that 70% of the time the backup environment is running at less than 100MB/s. At that point, you’re going to achieve much more if you flatten the ravine.

Looking for a quick fix

There’s various ways that you can aim to do this. If we stick purely within the backup realm, then you might look at factoring in some form of source based deduplication as well. Avamar, for instance, can ameliorate some issues associated with unstructured data. Admittedly though, if you don’t already have Avamar in your environment, adding it can be a fairly big spend, so it’s at the upper range of options that may be considered, and even then won’t necessarily always be appropriate, depending on the nature of that unstructured data.

Traditional approaches have included sending multiple streams per filesystem, and (in some occasions) considering block-level backup of filesystem data (e.g., via SnapImage – though, increasing virtualisation is further reducing SnapImage’s number of use-cases), or using NDMP if the data layout is more amenable to better handling by a NAS device.

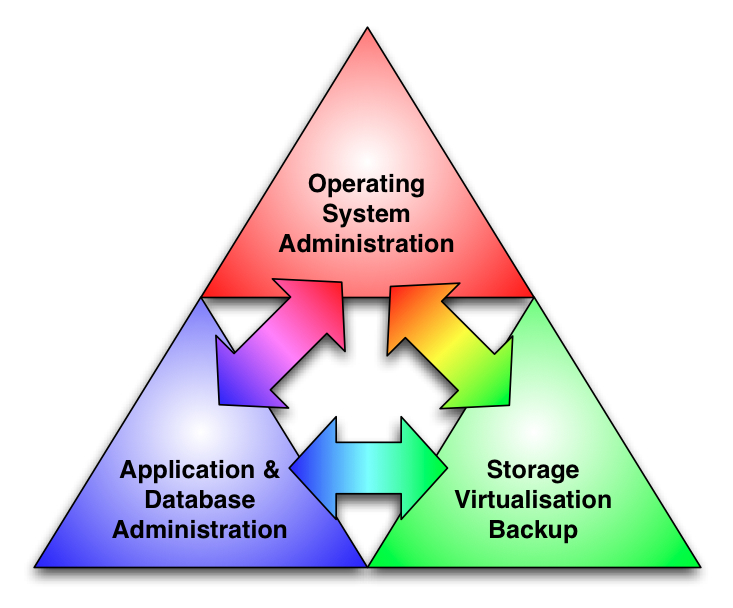

What the performance ravine demonstrates is that backup is not an isolated activity. In many organisations there’s a tendency to have segmentation along the lines of:

- Operating system administration;

- Application/database administration;

- Virtualisation teams;

- Storage teams;

- Backup administration.

Looking for the real fix

In reality, fixing the ravine needs significant levels of communication and cooperation between the groups, and, within most organisations, a merger of the final three teams above, viz:

The reason we need such close communication, and even team merger, is that baseline performance improvement can only come when there’s significant synergy between the groups. For instance, consider the classic dense-filesystem issue. Three core ways to solve it are:

- Ensure the underlying storage supports large numbers of simultaneous IO operations (e.g., a large number of spindles) so that multistream reads can be achieved;

- Shift the data storage across to NAS, which is able to handle processing of dense filesystems better;

- Shift the data storage across to NAS, and do replicated archiving of infrequently accessed data to pull the data out of the backup cycle all together.

If you were hoping this article might be about quick fixes to the slower part of backups, I have to disappoint you: it’s not so simple, and as suggested by the above diagram, is likely to require some other changes within IT.

If merger in itself is too unwieldy to consider, the next option is the forced breakdown of any communication barriers between those three groups.

A ravine of our own making

In some senses, we were spoilt when gigabit networking was introduced; the solution became fairly common – put the backup server and any storage nodes on a gigabit core, and smooth out those ravines by ensuring that multiple savesets would always be running; therefore even if a single server couldn’t keep running at peak performance, there was a high chance that aggregated performance would be within acceptable levels of peak performance.

Yet unstructured data has grown at a rate which quite frankly has outstripped sequential filesystem access capabilities. It might be argued that operating system vendors and third party filesystem developers won’t make real inroads on this until they can determine adequate ways of encapsulating unstructured filesystems in structured databases, but development efforts down that path haven’t as yet yielded any mainstream available options. (And in actual fact just caused massive delays.)

The solution as environments switch over to 10Gbit networking however won’t be so simple – I’d suggest it’s not unusual for an environment with 10TB of used capacity to have a breakdown of data along the lines of:

- 4 TB filesystem

- 2 TB database (prod)

- 3 TB database (Q/A and development)

- 500 GB mail

- 500 GB application & OS data

Assuming by “mail” we’ve got “Exchange”, then it’s quite likely that 5.5TB of the 10TB space will backup fairly quickly – the structured components. That leaves 4.5TB hanging around like a bad smell though.

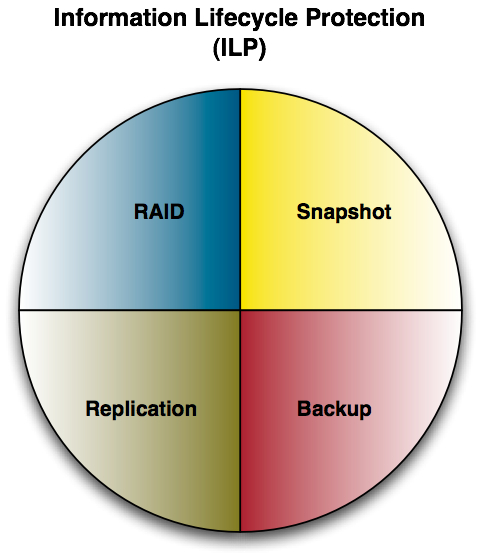

Unstructured data though actually proves a fundamental point I’ve always maintained – that Information Lifecycle Management (ILM) and Information Lifecycle Protection (ILP) are two reasonably independent activities. If they were the same activity, then the resulting synergy would ensure the data were laid out and managed in such a way that data protection would be a doddle. Remember that ILP resembles the following:

One place where the ravine can be tackled more readily is in the deployment of new systems, which is where that merger of storage, backup and virtualisation comes in, not to mention the close working relationship between OS, Application/DB Admin and the backup/storage/virtualisation groups. Most forms and documents used by organisations when it comes to commissioning new servers will have at most one or two fields for storage – capacity and level of protection. Yet, anyone who works in storage, and equally anyone who works in backup will know that such simplistic questions are the tip of the iceberg for determining performance levels, not only for production access, but also for backup functionality.

The obvious solution to this is service catalogues that cover key factors such as:

- Capacity;

- RAID level;

- Snapshot capabilities;

- Performance (IOPs) for production activities;

- Performance (MB/s) for backup/recovery activities (what would normally be quantified under Service Level Agreements, also including recovery time objectives);

- Recovery point objectives;

- etc.

But what has all this got to do with the ravine?

I said much earlier in the piece that if you’re looking for a quick solution to the poor-performance ravine within an environment, you’ll be disappointed. In most organisations, once the ravine appears, there’ll need to be at least technical and process changes in order to adequately tackle it – and quite possibly business structural changes too.

Take (as always seems to be the bad smell in the room) unstructured data. Once it’s built up in a standard configuration beyond a certain size, there’s no “easy” fix because it becomes inherently challenging to manage. If you’ve got a 4TB filesystem serving end users across a large department or even an entire company, it’s easy enough to think of a solution to the problem, but thinking about a problem and solving it are two entirely different things, particularly when you’re discussing production data.

It’s here where team merger seems most appropriate; if you take storage in isolation, a storage team will have a very specific approach to configuring a large filesystem for unstructured data access – the focus there is going to be on maximising the number of concurrent IOs and ensuring that standard data protection is in place. That’s not, however, always going to correlate to a configuration that lends itself to traditional backup and recovery operations.

Looking at ILP as a whole though – factoring in snapshot, backup and replication, you can build an entirely different holistic data protection mechanism. Hourly snapshots for 24-48 hours allow for near instantaneous recovery – often user initiated, too. Keeping one of those snapshots per day for say, 30 days, extends this considerably to cover the vast number of recovery requests a traditional filesystem would get. Replication between two sites (including the replication of the snapshots) allows for a form of more traditional backup without yet going to a traditional backup package. For monthly ‘snapshots’ of the filesystem though, regular backup may be used to allow for longer term retention. Suddenly when the ravine only has to be dealt with once a month rather than daily, it’s no longer much of an issue.

Yet, that’s not the only way the problem might be dealt with – what if 80% of that data being backed up is stagnant data that hasn’t been looked at in 6 months? Shouldn’t that then require deleting and archiving? (Remember, first delete, then archive.)

I’d suggest that a common sequence of problems when dealing with backup performance runs as follows:

- Failure to notice: Incrementally increasing backup runtimes over a period of weeks or months often don’t get noticed until it’s already gone from a manageable problem to a serious problem.

- Lack of ownership: Is a filesystem backing up slowly the responsibility of the backup administrators or the operating system administrators, or the storage administrators? If they are independent teams, there will very likely be a period where the issue is passed back and forth for evaluation before a cooperative approach (or even if a cooperative approach) is decided upon.

- Focus on the technical: The current technical architecture is what got you into the mess – in and of itself, it’s not necessarily going to get you out of the mess. Sometimes organisations focus so strongly on looking for a technical solution that it’s like someone who runs out of fuel on the freeway running to the boot of their car, grabbing a jerry can, then jumping back in the driver’s seat expecting to be able to drive to the fuel station. (Or, as I like to put it: “Loop, infinite: See Infinite Loop; Infinite Loop: See Loop, Infinite”.)

- Mistaking backup for recovery: In many cases the problem ends up being solved, but only for the purposes of backup, without attention to the potential impact that may make on either actual recoverability or recovery performance.

The first issue is caused by a lack of centralised monitoring. The second, by a lack of centralised management. The third, by a lack of centralised architecture, and the fourth, by a lack of IT/business alignment.

If you can seriously look at all four of those core issues and say replacing LTO-4 tape drives with LTO-5 tape drives will 100% solve a backup-ravine problem every time, you’re a very, very brave person.

If we consider that backup-performance ravine to be a real, physical one, the only way you’re going to get over it is to build a bridge, and that requires a strong cooperative approach rather than a piecemeal approach that pays scant regard for anything other than the technical.

I’ve got a ravine, what do I do?

If you’re aware you’ve got a backup-performance ravine problem plaguing your backup environment, the first thing you’ve got to do is to pull back from the abyss and stop staring into it. Sure, in some cases, a tweak here or a tweak there may appear to solve the problem, but likely it’s actually just addressing a symptom, instead. One symptom.

Backup-performance ravines should in actual fact be viewed as an opportunity within a business to re-evaluate the broader environment:

- Is it time to consider a new technical architecture?

- Is it time to consider retrofitting an architecture to the existing environment?

- Is it time to evaluate achieving better IT administration group synergy?

- Is it time to evaluate better IT/business alignment through SLAs, etc.?

While the problem behind a backup-performance ravine may not be as readily solvable as we’d like, it’s hardly insurmountable – particularly when businesses are keen to look at broader efficiency improvements.

1 thought on “Rage against the ravine”