One of the great features in NetWorker 8.1 was Parallel Save Streams (PSS). This allows for a single High Density File System (HDFS) to be split into multiple concurrent savesets to speed up the backup walk process and therefore the overall backup.

In NetWorker 8.2 this was expanded to also support Windows filesystems.

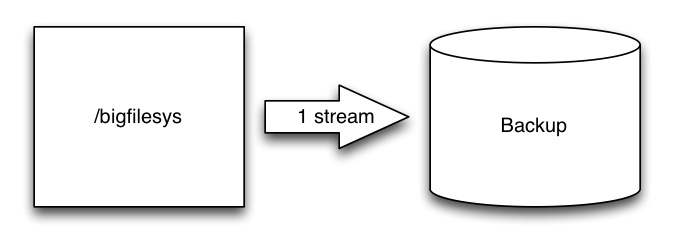

Traditionally, of course, a single filesystem or single saveset, if left to NetWorker, will be backed up as a single save operation:

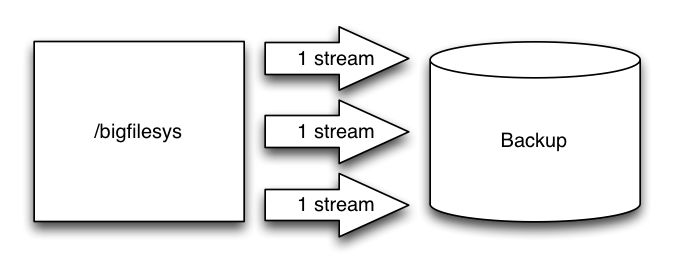

With PSS enabled, what would otherwise be a single saveset is split automatically by NetWorker and ends up looking like:

I’ve previously mentioned parallel save streams, but it occurred to me that periodic test backups I do in my home lab server against a Synology filesystem might be the perfect way of seeing the difference PSS can make.

Now, we all know how fun Synology storage is, and I have a 1513+ with 5 x Hitachi 3TB HDS723030ALA640 drives in a RAID-5 configuration, which is my home NAS server*. It’s connected to my backbone gigabit network via a TP-Link SG2216 16 port managed switch, as is my main lab server, a HP Microserver N40L with Dual AMD 1.5 Turion processors and 4GB of RAM. Hardly a power-house server, and certainly not even a recommended NetWorker server configuration.

Synology of course, curse them, don’t support NDMP, so the Synology filesystem is mounted on the backup server via read-only NFS and backed up via the mount point.

In a previous backup attempt using a standard single save stream, the backup device was an AFTD consisting of RAID-0 SATA drives plugged into the server directly. Here was the backup results:

orilla.turbamentis.int: /synology/homeshare level=full, 310 GB 48:47:48 44179 files

48 hours, 47 minutes. With saveset compression turned on.

It occurred to me recently to see whether I’d get a performance gain by switching such a backup to parallel save streams. Keeping saveset compression turned on, this was the result:

orilla.turbamentis.int:/synology/homeshare parallel save streams summary orilla.turbamentis.int: /synology/homeshare level=full, 371 GB 04:00:14 40990 files

4,000 less files to be sure, but a drop in backup time from 48 hours 47 minutes down to 4 hours and 14 seconds.

If you’re needing to do traditional backups with high density filesystems, you really should evaluate parallel save streams.

—

* Yes, I gave in and bought a home NAS server.

Very good data.

With source data disk being the less bottleneck during read, the more number of streams with NetWorker Parallel Save Stream does bring performance improvement. The improvement noticed here is 12x gain which is indeed very much positive news. In our setup we have noticed around 2-3x gain with parallel savestream for large data set of 2TB.

couple of questions for above results

-> which FS type being used on source read side?

-> Was there FS cache being cleaned between runs (if at all caching making any difference)?

Source is formatted EXT4 (standard Synology FS, I believe)

Destination FWIW is formatted XFS.

Processing was happening over an NFS v3 1Gbit mount point, and there was about a month and a couple of reboots in between tests.

Hi Preston,

thanks for your input and ongoing efforts to help NetWorker users to understand the product. I really appreciated that.

With respect to PSS I also got similar good results. I love this feature in general. However, there is one big issue which scares me:

The resulting save sets are treated total independently. They have different SSID.s

That can be really tricky because you cannot easily find out the total save set size.

But it can become very dangerous because dependencies are not checked yet. One can easily delete any of this save sets leaving a corrupted residual save set behind. I have opened an RFE to get this checked but of course you never know when this is ever going to be implemented.

So be a bit careful.