When it comes to extending to object storage for long term retention, you really can’t beat Data Domain. Cloud Tiering is built into the operating system, but smart backup applications such as NetWorker and Avamar can seamlessly work with Cloud Tier for movement and recall operations.

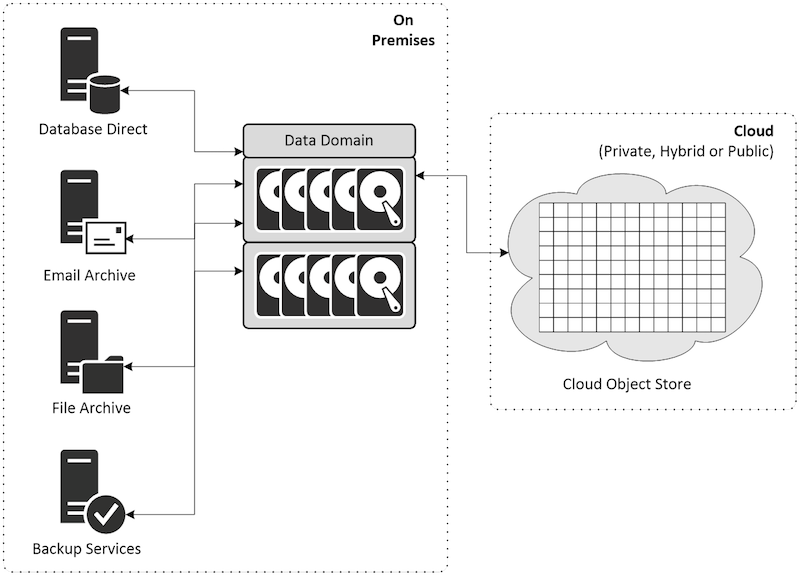

I’ve talked about Cloud Tiering in the past – below is a diagram that effectively shows the high level conceptual approach. You write to the Data Domain active tier, then when data is beyond a particular age, you can have it moved out to object storage. That object storage can be public cloud (e.g., AWS, Azure), or it can be to something suitable on-premises, such as Dell EMC Elastic Cloud Storage (ECS).

Fully integrated backup applications will handle that data movement for you, but even if you don’t have a fully integrated backup application, Data Domain can have the tiering configured at the Mtree level.

But how much space will you free up? That’s always the golden question.

Well, with DDOS 6.2, you’ve now got the option of testing it out yourself – and you don’t need Cloud Tier enabled, or licensed, on your Data Domain, in order to run that test. In fact, it’s so simple that it barely needs a blog article, except it’s important to know that this is available to you.

When working from the CLI, Data Domain Cloud Tiering is controlled via the data-movement command. As of DDOS 6.2, a new option has been added called an eligibility check. It works like this:

# data-movement start eligibility-check age-threshold nDays mtrees mtreeList

Here’s an example of it running on a 0.5TB DDVE running in my lab – as you can imagine, there’s not a lot of data to review here:

sysadmin@adamantium# data-movement start eligibility-check age-threshold 30 mtrees /data/col1/orilla

Data-movement eligibility-check started

Run data-movement status for the status

Now in this case, I’m checking for a single Mtree, that owned by my NetWorker server, and looking at what would happen if I turned on Cloud Tiering for all data older than 30 days.

Depending on how much data you have, your eligibility check may take a little while to run of course – the system is basically building up a profile of how much data could be freed from your active tier by enabling the movement time you’ve specified. Here’s the output of me checking the status, and the final output:

sysadmin@adamantium# data-movement status

Data-movement to cloud tier:

----------------------------

Data-movement eligibility-check:

28% complete; Elapsed time: 0:01:03

sysadmin@adamantium# data-movement status

Data-movement to cloud tier:

----------------------------

Data-movement eligibility-check was started on Jan 15 2019 20:27 and completed on Jan 15 2019 20:31

Cleanable active-tier space (post-comp): 14.50 GiB,

Files inspected: 3286, Files eligible: 893

So based on the age profile I’d given, the Data Domain expected that I’d be able to free up around 14.5GiB from my lab DDVE if I enacted that data movement policy. That doesn’t sound like a lot, and of course being a lab server that’s primarily because most data is kept on it for less time than a month, and longer running backups tend to be of very similar data – e.g., VMs that don’t change their content very much at all. (For instance, I’m getting 314.8x, or 99.7% deduplication on that DDVE based on repetitive backups.)

If you haven’t upgraded to DDOS 6.2 yet, this is a really good reason to get the upgrade done. Of course, whenever you go to upgrade something as fundamental as your Data Domain operating system, make sure you check out the compatibility guide before you do so and confirm your backup software is supported on the new version, or plan the bigger change accordingly.

Hey, while you’re here, don’t forget to check out Data Protection: Ensuring Data Availability.

Preston,

Having run the analysis can I safely assume that my cloud tier license and S3 storage requirement will be the same, so 14.5GiB in you above example, as the amount of active tier space freed up, as a starting point at least?

Regards

Guido

Hi Guido,

The object storage requirements and cloud tier licensing will be different from the amount freed up. For an existing system, you’d also do an assessment of the workloads that are on there to understand the full amount of data that will need to go into the object storage, and it’s that size that will be the Cloud Tier licensing requirement (as a starting point).

Cheers.