Over the weekend I wrote up a piece about how snapshots are not a valid replacement to enterprise backup. The timing of this was in response to NetApp recently abandoning development of their VTL systems, and subsequent discussions this triggered, but it was something that I’d had sitting in the wings for a while.

It’s fair to say that discussions on snapshots and backups polarise a lot of people; I’ll fully admit that I side with the “snapshots can’t replace backups” side of the argument.

I want to go into this in a little more detail. First I’ll point out in fairness that there are people willing to argue the other side that don’t work for NetApp, in the same way that I don’t work for EMC. One of those is the other Preston – W. Curtis Preston, and you can read his articulate case here. I’m not going to spend this article going point for point against Curtis – it’s not the primary point of discussion I want to make in this entry.

Moving away from vendors and consultants, another and very interesting opinion, from the customer perspective, comes from Martin Glassborow’s Storagebod blog. Martin brings up some valid customer points – that being snapshot and replication represents extreme hardware lock-in. Some would argue that any vendor’s backup product represents vendor lock in as well, and this is partly right – though remember it’s not so difficult to keep a virtual machine around with the “last state” of the previous backup application available for recovery purposes. Keeping old and potentially obsolete NAS technology running to facilitate older recoveries after a vendor switch can be a little more challenging.

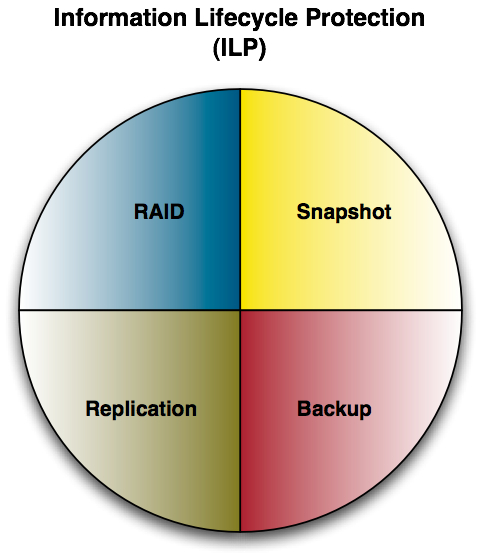

To get onto what I want to raise today, I need to revisit a previous topic as a means of further explaining my position. Let’s look for instance at my previous coverage of Information Lifecycle Management (ILM) and Information Lifecycle Protection (ILP). You can read the entire piece here, but the main point I want to focus on is my ILP ‘diagram’:

One of the first points I want to make from that diagram is that I don’t exclude snapshots (and their subsequent replication) from an overall information lifecycle protection mechanism. Indeed, depending on the SLAs involved, they’re going to be practically mandatory. But, to use the analogy offered by the above diagram, they’re just pieces of the pie rather than the entire pie.

I’m going to extend my argument a little now, and go beyond just snapshots and replication, so I can elucidate the core reasons why I don’t like replicated snapshots as a permanent backup solution. Here’s a few other things I don’t like as a permanent backup solution:

- VTLs replicated between a primary and disaster recovery site, with no tape out.

- ADV_FILE (or other products disk backup solutions) cloned/duplicated between the primary and disaster recovery site, with no tape out.

- Source based deduplication products with replication between two locations, with no tape out.

My fundamental objection in all of these solutions is the long term failure caused by keeping everything “online”. Maybe I’m a pessimist, but when I’m considering backup/recovery and disaster recovery solutions, I firmly believe that I’m being paid to consider all likely scenarios. I don’t personally believe in luck, and I won’t trust a backup/disaster recovery solution on luck either. The old Clint Eastwood quote comes to mind here:

You’ve got to ask yourself one question: ‘Do I feel lucky?’ Well, do ya, punk?

When it comes to your data, no, no I don’t. I don’t feel lucky, I don’t encourage you to feel lucky. Instead I rely on solid, well protected systems with offline capabilities. Thus, I plan for at least some level of cascading failures.

It’s the offline component that’s most critical. Do I want all my backups for a year online, only online, even with replication? Even more importantly – do I want all your backups online, only online, even with replication? The answer remains a big fat no.

The simple problem with any solution that doesn’t provide for offline storage is that (in my opinion), it brings the risk of cascading failures into play too easily. It’s like putting all storage for your company on a single RAID-5 LUN and not having a hot spare. Sure you’re protected against that first failure, but it’s shortly after the first failure that Murphy will make an appearance in your computer room. (And I’ll qualify here: I don’t believe in luck, but I’ve observed over the years in many occasions that Murphy’s Law rules in computer rooms as well as in other places.) Or to put it another way: you may hope for the best, but you should plan for the worst. Let’s imagine a “worst case scenario”: a fire starts in your primary datacentre 10 minutes after upgrade work has commenced on the array that receives replicated snapshots in your disaster recovery runs into problems with firmware, leaving that array inaccessible until vendor upgrades are complete. Or worse again, it leaves storage corrupted.

Or if that seems too extreme, consider a more basic failure: a contractor near to your primary datacentre digs through the cables linking your production and disaster recovery sites, and it’s going to take 3 days to repair. Suddenly you’ve got snapshots and no replication. Just how lucky does that leave you feeling? Personally, I feel slightly naked and vulnerable when I have a single backup that’s not cloned. If suddenly none of my backups were getting duplicated, and I had no easy access to my clones, I’d feel much, much worse. (And that full body shiver I do from time to time would get very pronounced.)

Usually all this talk of a single instance failure frequently leads proponents of snapshots+replication only to suggest that a good design will see 3-way replication, so there’s always two backup instances. This doubles a lot of costs while merely moving the failure point just a jump to the left. On the other hand, offline backup where there’s the backup from today, the backup from yesterday, the backup from the day before … the backup from last week, the backup from last month, etc., all offline, all likely on different media – now that’s failure mitigation. Even if something happens and I can’t recover the most recent backup, in many recovery scenarios I can go back one day, two days, three days, etc. Oh yes, you can do that with snapshots too, but not if the array is a smoking pile of metal and plastic fused to the floor after a fire. In some senses, it’s similar to the old issue of trying to get away from cloning by backing up from the production site to media on the disaster recovery site. It just doesn’t provide adequate protection. If you’re thinking of using 3-way replication, why not instead have a solution that uses two entirely different types of data protection to mitigate against extreme levels of failure?

It’s possible I’ll have more to say on this in the coming weeks, as I think it’s important, regardless of your personal view point, to be aware of all of the arguments on both sides of the fence.