The history of NetWorker and synthetic fulls is an odd one. For years, NetWorker had the concept of a ‘consolidated’ backup level, which in theory was a synthetic full. The story goes that this code came to Legato via a significant European partner, but once it came into the fold, it was never fully maintained. Subsequently, it was used sparingly at best, and not with some small amount of hesitation when it was required.

NetWorker version 8, however, saw a complete rewrite of the synthetic full code from the ground up – hence its renaming from the old ‘consolidate’ to ‘synthetic full’. Architecturally, this is a far more mature version of synthetic full than NetWorker previously had. It’s something that can be trusted, and it’s something which has been scoped from the ground up for expansion and continued development.

If you’re not familiar with the concept of a synthetic full, it’s fairly easy to explain – it’s the notion of generating a new full backup from a previous full and one or more incremental backups, without actually re-running a new full backup. The advantage should be clear – if the time constraints on doing regular or semi-regular full backups the traditional way (i.e., walking, reading and transmitting the entire contents of a filesystem) is too prohibitive, then synthetic full backups allow you to keep regenerating a new full backup without that incurring that cost. The two primary scenarios where this might happen are:

- Where the saveset in question is too large;

- Where the saveset in question is too remote.

In the first case, we’d call it a local-bandwidth problem, in the second, a remote-bandwidth problem. Either way, it comes down to bandwidth.

Synthetic fulls aren’t a universal panacea; for the time being they’re designed to work with filesystem savesets only; VMware images, databases, etc., aren’t yet compatible with synthetic fulls. (Indeed, the administration manual states all the “won’t-work-fors” then ends with “Backup command with save is not used”, which probably sums it up most accurately.)

For the time being, synthetic fulls are also primarily suited to non-deduplicating devices, and either physical or virtual tape; there’s no intelligence as yet in the process of generating the new full backup when the backups have been written to true-disk style devices (AFTDs or Boost); that being said, there’s nothing preventing you from using synthetic full backups in such situations, you’ll just be doing it in a non-optimal way. Of course, the biggest caveat for using synthetic full backups with physical or virtual tape is each unit of media only supports 1 read operation or 1 write operation; a lack of concurrency may cause the process to take considerably longer than normal Therefore, a highly likely way in which to use synthetic full backups might be reading from advanced file type devices or DD Boost devices, and writing out to physical or virtual tape.

The old ‘consolidate’ level has been completely dropped in NetWorker 8; instead, we now have two new levels introduced into the equation:

- synth_full – Runs a synthetic full backup operation, merging the most recent full and any subsequent backups into a new, full backup.

- incr_synth_full – Runs a new incremental backup, then immediately generates a synthetic full backup as per the above; this captures the most up-to-date full of the saveset.

This means the generation of a synthetic full can happen in one of two ways – as an operation completely independent of any new backup, or mixed in with a new backup. There’s advantages to this technique – it means you can separate off the generation of a synthetic full from the regular backup operations, moving that generation into a time outside of normal backup operations. (E.g., during the middle of the day.)

Indeed, while we’re on that topic, there’s a few recommendations around the operational aspect of synthetic full backups that it’s worth quickly touching on (these are elaborated upon in more detail in the v8 Administration guide):

- Do not mix Windows and Unix backups in the same group when synthetic fulls are generated.

- Do not run more than 20 synthetic full backup mergers at any one time.

- The generation of a synthetic full backup requires two units of parallelism – be aware of this when determining system load.

- Turn “backup renamed directories” on for any client which will get synthetic full backups.

- Ensure that if saveset(s) to receive synthetic fulls are specified manually, they have a consistent case used for them, and all Windows drive letters are specified in upper-case.

- Don’t mix clients in a group that do synthetic full backups with others that don’t.

As you may imagine from the above rules, a simple rule of thumb is to only use synthetic full backups when you have to. Don’t just go turning on synthetic fulls for every filesystem on every client in your environment.

A couple of extra options have appeared in the advanced properties of the NSR group resource to assist with synthetic full backups. (Group -> Advanced -> Options). These are:

- Verify synthetic full – enables advanced verification of the client index entries associated with the synthetic full at the completion of the operation.

- Revert to full when synthetic fails – allows a group to automatically run a standard full backup in the event of a synthetic full backup failing.

For any group in which you perform synthetic fulls, you should definitely enable the first option; depending on bandwidth requirements, etc., you may choose not to enable the second option, but you’ll need to be careful to subsequently closely monitor the generation of synthetic full backups and manually intervene should a failure occur.

Interestingly, the administration manual for NetWorker 8 states that another use of the “incr_synth_full” level is to force the repair of a synthetic full backup in a situation where an intervening incremental backup was faulty (i.e., it failed to read during the creation of the synthetic full) or when an intervening incremental backup did not have “backup renamed directories” enabled for the client. In such scenarios, you can manually run an incr_synth_full level backup for the group.

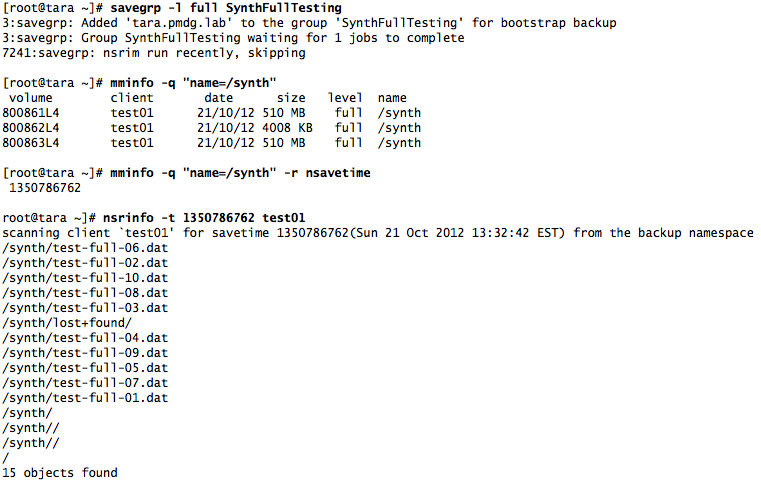

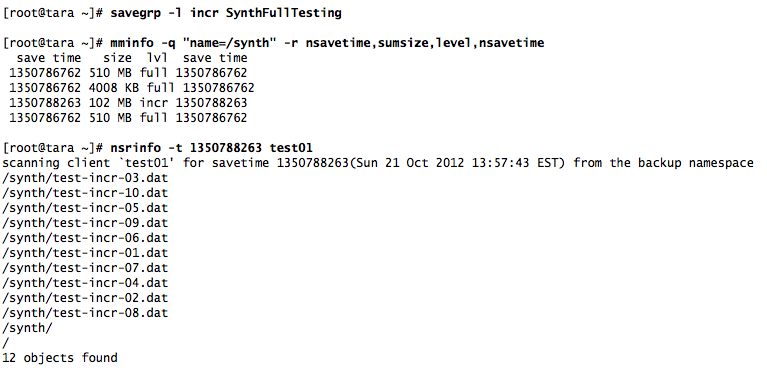

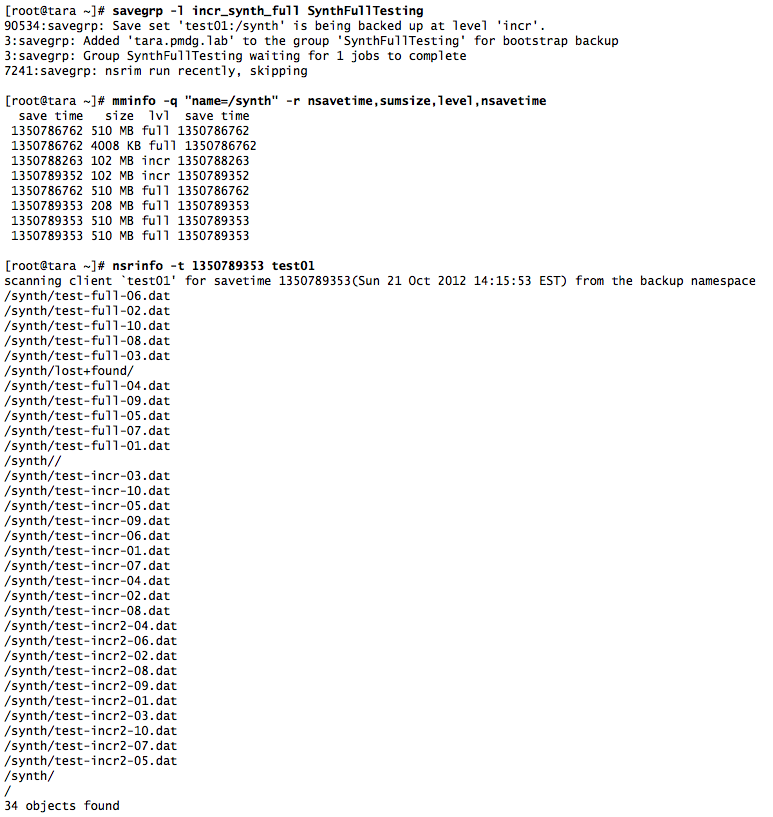

Following is an annotated example of using synthetic full backups:

In the above, I’ve picked a filesystem called ‘/synth’ on the client ‘test01’ to backup to. Within the filesystem, I’ve generated 10 data files for the first, full backup, then listed the content of the backup at the end of it.

In the above, I generated a bunch of new datafiles in the /synth filesystem before running an incremental backup, naming them appropriately. I then listed the contents of the backup, again.

Finally, I generated a new set of datafiles in the /synth filesystem and ran an incr_synth_full backup; the resulting backup incorporated all the files from the full backup, plus all the files from the incremental backup, plus all the new files:

Overall, the process is fairly straight forward, and fairly easy to run. As long as you follow the caveats associated with synthetic full backups, and use them accordingly, you should be able to integrate them into your backup regime without too much fuss.

One more thing…

There’s just one more thing to say about synthetic full backups, and this applies to any product where you use them, not just NetWorker.

While it’s undoubtedly the case that in the right scenarios, synthetic full backups are an excellent tool to have in your data protection arsenal, you must make sure you don’t let them blind you to the real reason you’re doing backups – to recover.

If you want to do synthetic full backups because of a local-bandwidth problem (the saveset is too big to regularly perform a full backup), then you have to ask yourself this: “Even if I do regularly have a new full backup without running one, do I have the time required to do a full recovery in normal circumstances?”

If you want to do synthetic full backups because of a remote-bandwidth problem (the saveset too large to comfortably backup over a WAN link), then you have to ask yourself this: “If my link is primarily sized for incremental backups, how will I get a full recovery back across it?”

The answer to either question is unlikely to be straight forward, and it again highlights the fact that data backup and recovery designs must fit into an overall Information Lifecycle Protection framework, since it’s quite simply the case that the best and most comprehensive backup in the world won’t help you if you can’t recover it fast enough. To understand more on that topic, check out “Information Lifecycle Protection Policies vs Backup Policies” over on my Enterprise Systems Backup blog.

Hi,

has anyone tested NDMP synthetic fulls with a NetApp filer? If so, could you recover?

Regards,

Hans-Christian

Given the administration manual explicitly states not to use synthetic full backups on NDMP savesets, I would advise against this. Even if (somehow) it works, it will be totally unsupported by EMC.

Anyone tried NWer V8synthetic fulls in an environment with a number (5 – 10) of Windows file servers each containing a number of relatively large partitions (500GB – 1TB) and each partition containing millions of files? Would be interested in knowing your experience or viablity of doing so.

Regards,

I’ve not personally tried that sort of configuration but there’s no reason why it wouldn’t work. (Note though that a key problem with dense filesystems is actually the walk time of the incremental backups.)

We are still using 7.6.3 and have vadp full and incremental backups running. Is it even worth running the incremental backups? Should we just do fulls more frequently?

Generally speaking if you’re wanting to use VADP I’d be recommending an upgrade to 8.x, where the VADP code and options are more advanced.

I do not agree with you regarding the bandwidth required to recover. Backups are performed on a daily basis, while complete recovery hopefuly never happens. When it happens, it’d be necessary to restore the complete backup to a removable disk or even a small NAS appliance that would then travel for 1-2 days. At least, you’ll find back your valuable data, even with a downtime and some manual work required.

Regards,

Christophe

You’re entitled to disagree of course, but I think you’re wrong. You’re basically gambling that you never need to do a full recovery, which isn’t really a good strategy in my view.

If however you took appropriate ILP design to substantially reduce the need to do a full recovery (e.g., some other form of replication to closer storage, etc., as well), then yes, at that point, you could work with reduced risk of having to do a full recovery.