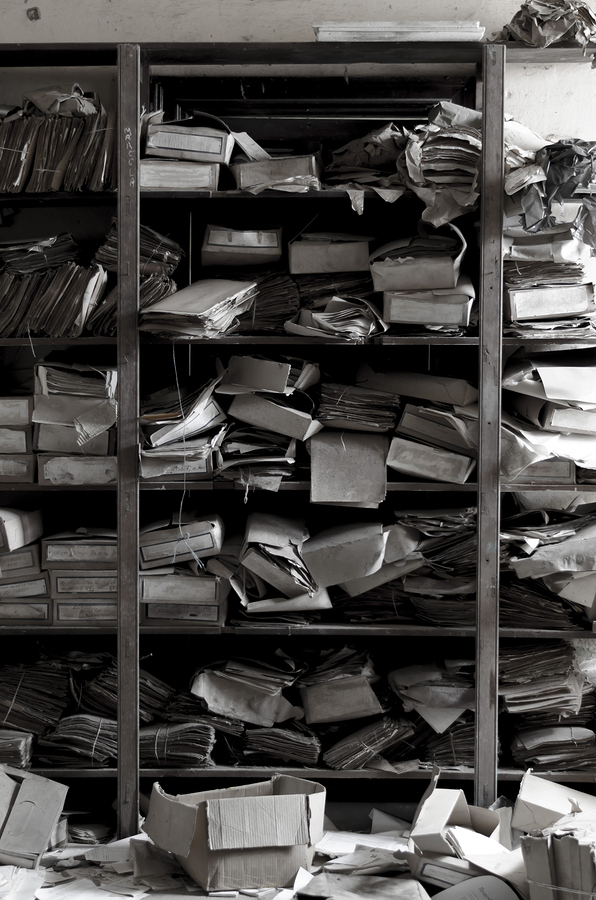

I’ve seen a lot of talk lately of data hoarding. Data can bring us new insights, new business opportunities, and…

There are three hard questions that every company must be prepared to ask when it comes to data: Why do…

Continuing on my post relating to dark data last week, I want to spend a little more about data awareness classification…

We’ve all heard the term Big Data – it’s something the vendors have been ramming down our throats with the same…

This is the third post in the four part series, “Data lifecycle management”. The series started with “A basic lifecycle“,…

This is part 2 in the series, “Data Lifecycle Management“. Penny-wise data lifecycle management refers to a situation where companies…

I’m going to run a few posts about overall data management, and central to the notion of data management is…