Years ago, one of the most common terms I’d hear in IT was “vendor lock-in”. It was, to all intents and purposes, the IT world’s ghosts-by-the-campfire or monsters-under-the-bed story. Imagine a situation where you have the same hardware and software vendor, or where you have the same primary storage and compute vendor. They might charge you more. That was, in effect, the existential threat seemingly posed by having a single vendor.

And maybe, for a time, it was a concern. But it also used to lead to some rather bizarre purchasing decisions, even sometimes extending into the integration space as well. “We can’t buy our tape library and our backup software from the same system integrator” was something I heard a few times … which always seemed good to the procurement department, but less helpful to the backup operations team when they were trying to resolve an issue that might be tape drive related or might be backup software related. Fingers at 40 paces!

The overall IT market has grown up a lot since then – on all fronts. In a mature market, businesses tend to want less complexity in their IT environment, and less risk of incompatibility. In fact, independent studies tend to show that as the number of vendors increase in an environment (particularly around offering a similar function, such as say, data protection), the risk and cost of data loss tends to increase in a fairly commensurate fashion, too. On the flip-side, vendors who can provide a greater breadth and depth of services to a business aren’t so much inclined to charge like a wounded bull, but to provide cross-functional pricing processes (look at the rise of enterprise license agreements, for instance) to enable flexible and adaptive consumption. Vendors (good vendors, that is) learn from their customers, and by getting more involved in their customers business, build up increased operational, tactical and strategic awareness of the market, which benefits everyone.

It’s ironic then, that the people I’m now most likely to hear whisper vendor lock-in like some horror story are the big-scale public cloud providers. “Come with us! You’ll never need to worry about vendor lock-in again!”

Huh?

Look, my opinion has evolved on public cloud quite a lot. Long-time blog readers will know that I was pretty savage relating to public cloud earlier on, and this was really driven by the simple fact that people would move their systems into public cloud and assume that everything would be protected, which was demonstrably false. Public cloud providers are, by and large, focused on providing infrastructure level protection. Data protection? That’s up to you.

Public cloud may very well be a way to avoid the 90s bogeyman of vendor lock-in, if your business still frets about such a thing, but does it come with its own risks? Well, yes. For want of a better term, I’m going to call it being a data hostage.

In many public cloud situations, you’re charged for data egress, of course. (It is, in fact, at times reminiscent of Master of the House from Les Miserables.) That’s not always the case, but it’s certainly the typical usage agreement most businesses end up having with the likes of AWS and Azure.

If your public cloud usage is more compute driven than data driven, that won’t actually be an issue, of course, but I want to touch on a particularly valid example that’s close to my heart: long term retention.

When public cloud first became popular, there was a lot of Cloud First! discussions taking place. That led to some interesting challenges – such as consuming an entire annual IT budget in just a few months. That’s not the public cloud provider’s fault, of course, but as the market has matured, it’s led to a more mature discussion and architectural style best referred to as Cloud Fit. This involves fully understanding a workload you’re evaluating for cloud purposes:

- How much CPU/RAM does it use?

- How much storage does it use?

- How long does the data need to be kept for?

- How will you protect the data?

- What is the chance of there being elastic resource requirements?

There’s more to it than that, of course. The point is to assemble some numbers that you can then plug into a cost analysis – how much will it cost to procure and deploy the infrastructure to run the workload on-premises, and how much will it cost to run it in public cloud? Let’s consider just a simple scenario – you might need to provide a service to the business, where the infrastructure costs associated with the service is $100,000 over a three year period of interest. Now, if the public cloud provider comes and tells you that those same resources will cost $5,000 a month to run in their environment, then doing a 1-month or even a 1-year TCO comparison is going to well and truly favour public cloud: $100,000 in month 1 for buy/build, or $5,000 for cloud. Over a year? $100,000 again for buy/build vs $60,000 for public cloud. You can see the tipping point approaching here, though: at month 20, you’ll have spent as much in public cloud as you would have for the on-premises solution, and by month 36, you’ll have spent 1.8x in public cloud than you would have for the on-premises solution.

Yes, there are soft costs to be factored in as well – I do get that. But like any ‘lease vs buy’ or ‘finance vs buy’ discussion, there’s going to be some price-points to be considered in a TCO analysis. It could be in the above example that a business will decide it’s OK that over time public cloud will cost more if it’s confident the pricing can be kept consistent, in the same way that someone buying a car will know that while it’s technically cheaper to pay $26,999 to ‘drive away’, it’s sometimes more convenient to pay $646.83 per month over 48 months, effectively paying a 15% premium for the benefit of not having to walk into a car yard with $27,000 in their bank account.

I mentioned data hostage before, and so far I’ve been talking more about monthly pricing, so I want to come back to that cost. Let’s consider an enterprise long term retention requirement. Let’s say that you have a 1PB environment (front end TB – FETB), of which 20% requires long term retention. (I’m optimistically assuming that some level of data classification has been performed here.)

There’s two ways to do long term retention out of backups – monthlies retained for the entire retention period, or monthlies retained for 12 months, and yearlies retained for the longer retention period. I’m going to assume the model where there’s a focus on ensuring recovery from any individual month, and we’re looking at standard financial industry rules of 7 years retention.

So that’s 200TB a month being pushed out, and you need to keep it for 7 years. I’m also going to assume a 10:1 deduplication ratio for that data, on average. Just to keep things real, we’re going to assume a 10% annual growth rate on that data set; assuming the 10% annual growth rate is regular on a monthly basis, at the end of month 84 your post-deduplication monthly LTR volume will be almost 40TB, and the total amount of storage you’ll have consumed in LTR data is almost 2.42 PB. (By the way, since you’re paying for all that data each month that’s stored, the cumulative bill is quite interesting. A quick run through standard AWS S3 costs at the end of year 7 shows a cumulative cost of almost $2.2M US. That’s not factoring in any of the metacosts – puts and gets, etc.)

Aside: Of course, you might be thinking that’s still better than investing in on-premises hardware, but that’s only if you’re doing an OpEx (Cloud) vs CapEx (On-Premises) comparison, and you’re happy with a higher final cost to avoid up-front full purchase. That again, is a legacy approach to procuring on-premises hardware, since a variety of models now exist to provide varying levels of payment flexibility for what would have otherwise been a traditional CapEx purchase.

Second Aside: You might also think I’m being unfair mentioning costs of AWS S3 rather than Glacier … but Glacier does not represent an access model that works with deduplicated data protection (anyone who tells you different is lying – either to themselves, or you), and if the product is well architected, deduplication to S3 will cost less than compression to Glacier.

But here’s the rub: at the end of the first 7 years (when presumably you can start contemplating the deletion of year-1/month-1 data), you’ve got 2.42 PB sitting in your public cloud provider. What happens if the price goes up, or there’s a business decision to switch from cloud provider A to cloud provider B, or perhaps even a decision to return that data to the datacentre? In fact, your price is only going to continue to go up, since any data that can expire out of the storage will be eclipsed by new data that’s going into the storage. (E.g., you might be able to purge all of year-1/month-1 at 20TB, but you’ll then be adding month 85 at almost 40TB, so while there’ll be a reduction in the month-to-month cost increase, it’s hardly steady-state.)

So, if you need to pull that data out, you’re going to need some huge connectivity to do it quickly – 5TB a day for instance will take 484 days to retrieve. (That would be streaming at almost 58MB/s non-stop.)

That’s where the term data hostage comes into play. Unless someone in the business is remarkably brave and says you can disregard your legal compliance requirements to keep that data, it’s going to take you months or years to retrieve all the data, while still paying a substantial monthly fee (yes, potentially decreasing over time if you can delete the data as you move it back), or you’re facing a 7-year decreasing monthly cost as you age the data off and continue to pay the cloud vendor.

That makes the old bogeyman-fear of vendor lock-in trivial! If you buy a storage array from a vendor and after 3 years say “You know what, we’re going to use someone else”, the vendor won’t delete all the data you’re using on the array. If you’re not paying support/maintenance, you’ll be hoping you don’t have any systems failures, etc., of course, but you don’t instantly lose access to the data just because you’re no longer paying the vendor for yearly maintenance/support. In the public cloud space, if you stop paying your bill, the data gets deleted. So you have to keep paying or legally, you may find yourself in a precarious position. (Suddenly the ROI on your solution, instead of being Return On Investment looks more like Risk Of Incarceration.)

Congratulations, you’re a data hostage.

It seems implausible that the IT infrastructure of the future will be anything but hybrid cloud for most enterprise organisations. There’ll be a mix of cloud-like solutions running on-premises, and where it’s cloud-fit, there’ll be some stuff running in public cloud, too. So I’m not in any way telling you not to look at public cloud … I’m just suggesting that as you build up the amount of data in public cloud, you’re not so much avoiding vendor lock-in, you’re risking putting yourself into a data-hostage situation.

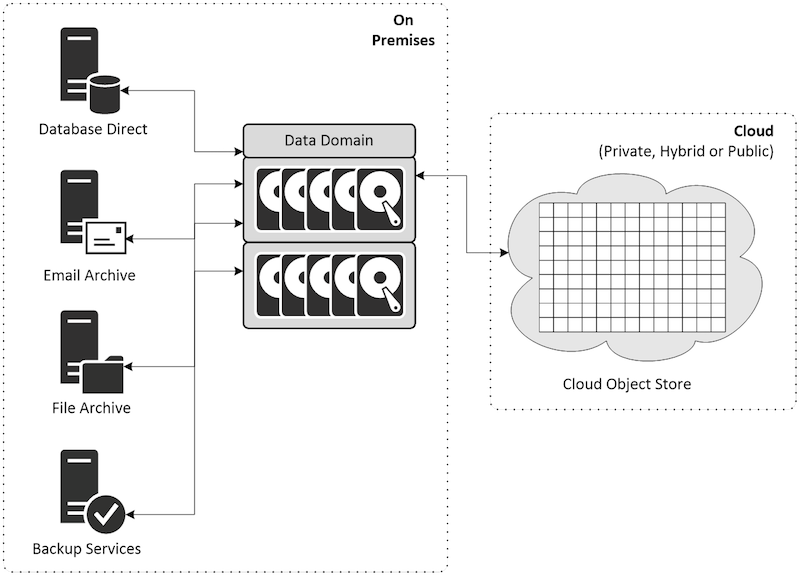

What this means for long term retention – from a data protection perspective – is that you really do need to have a data management plan for the entire lifecycle of the data. You may very well, in those situations, realise you’re better off deploying object storage on-premises, not only for cost, but to maintain full control over your data – and maximise your flexibility.