Particularly if you’re using Data Domain Boost within your NetWorker environment, most of the time your storage nodes exist simply as device access brokers. That is, rather than having the NetWorker server talk to the Data Domain, work out what path a client direct host should use, then tell the client the details, you configure storage nodes to perform that brokering. Either way, there’s no data passing through the NetWorker server or the storage node, but it’s about letting the NetWorker server stay focused on directing the overall datazone.

If all your NetWorker devices (backup, clone and long term retention) are Data Domain Boost devices, it doesn’t matter where those devices are created, since any cloning NetWorker does will be back-ended to the Data Domain systems for deduplicated replication anyway.

While we’d usually try to optimize a configuration to allow end-to-end Data Domain (e.g., leveraging Cloud Tier, automatically pushed out from Data Domain for long term retention), for various reasons not all customers can do this. So sometimes you’ll end up in situations where backups to Boost devices are subsequently being cloned to tape, or out to object storage via CloudBoost, or something else again.

If you’re cloning from Data Domain to something other than Data Domain though, you’re going to be rehydrating the data. And at that point, you want to be paying attention to where your Data Domain Boost devices exist.

Case in point: let’s say you have a virtualised NetWorker server, and you’re cloning your long term retention backups out to tape. That means you’re going to have a physical storage node somewhere. However, you might have created the Data Domain Boost devices on the NetWorker server itself. That would mean, in a cloning operation, that the NetWorker server (virtualised) will handle the receipt of the rehydrated data stream before sending it to the storage node.

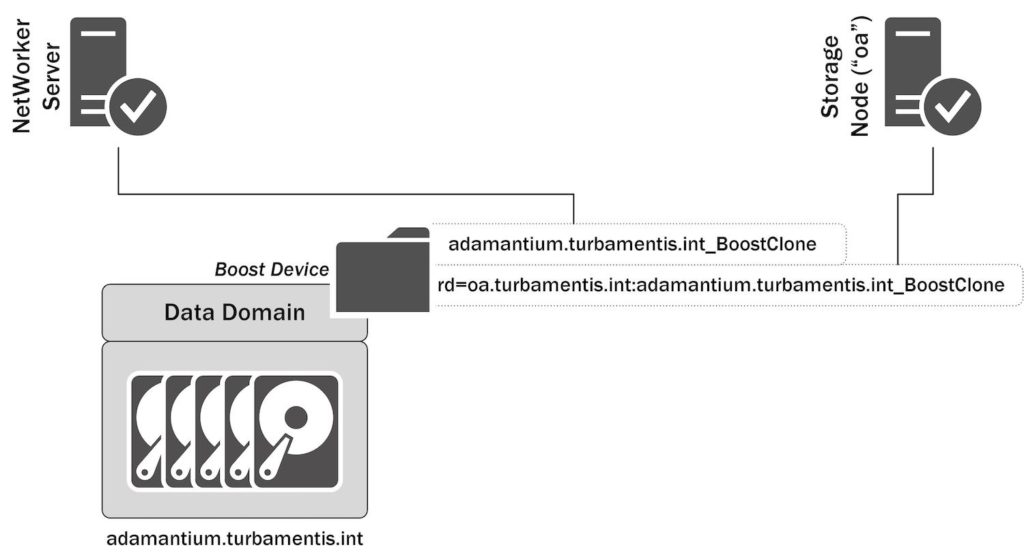

But there’s a better way. With Boost devices you can quite literally have your cake and eat it, too. Here’s what it effectively looks like:

In this scenario, I’ve got a Data Domain (“adamantium”) which, when it was created, was created as a Boost device residing on the NetWorker server. Now though, I want to be able to access that Boost device from my storage node, “oa”. It’s more than possible: you can actually access the same Boost device from multiple storage nodes.

The advantage of such a configuration is simple: my NetWorker server is virtual, but I’ve got a physical storage node here. If I did want to clone the data residing on this device to something else, by dual-mounting the device to the storage node, I can offload that full data copy onto another server in my environment, rather than loading up the performance requirements of the backup server.

This doesn’t even have to be about moving data to another type of device, by the way. Think of a scenario where you’ve got a DDVE or a small Data Domain to service a DMZ environment. You don’t want the hosts in the DMZ to have any way of accessing a Data Domain servicing the main environment, but you still want to clone the backups done in the DMZ to a primary Data Domain. So that’s just as easy to accommodate: dual-mount the Boost device(s) on the DMZ Data Domain to a storage node in the DMZ, and to a storage node in the main environment. The storage node in the main environment would then also mount Boost devices from one of your main Data Domains, and handle the cloning.

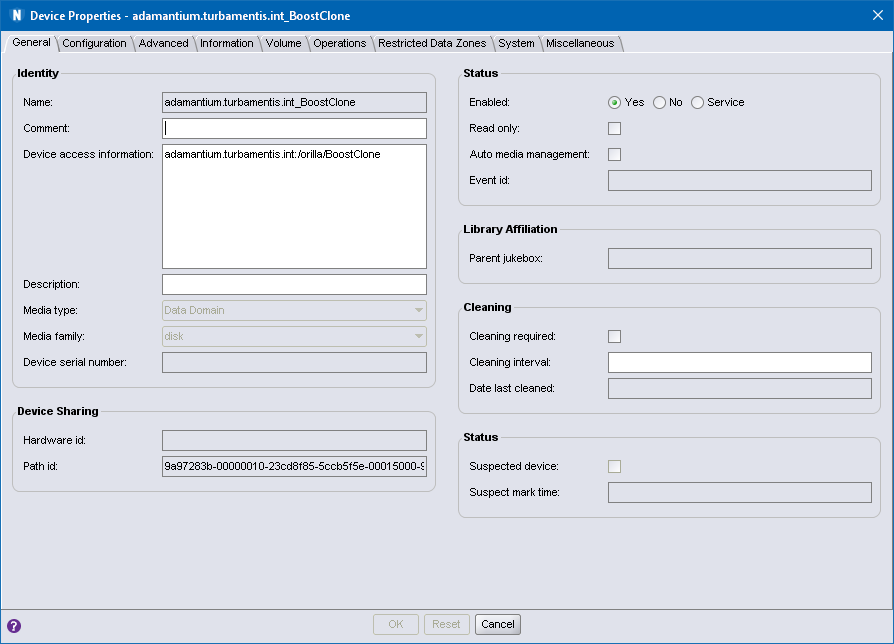

To see how the setup of this works in my environment, I created the above configuration. So to start, here was the original Data Domain device:

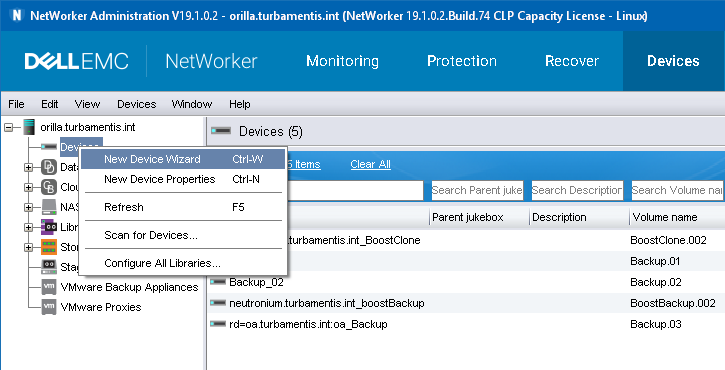

In this instance, because there’s no ‘rd=’ at the start of the device name, the device is clearly owned by the storage node processes running on the NetWorker server itself. So, how do we create a device onto the storage node? It’s pretty straight forward. Start by launching the new device wizard:

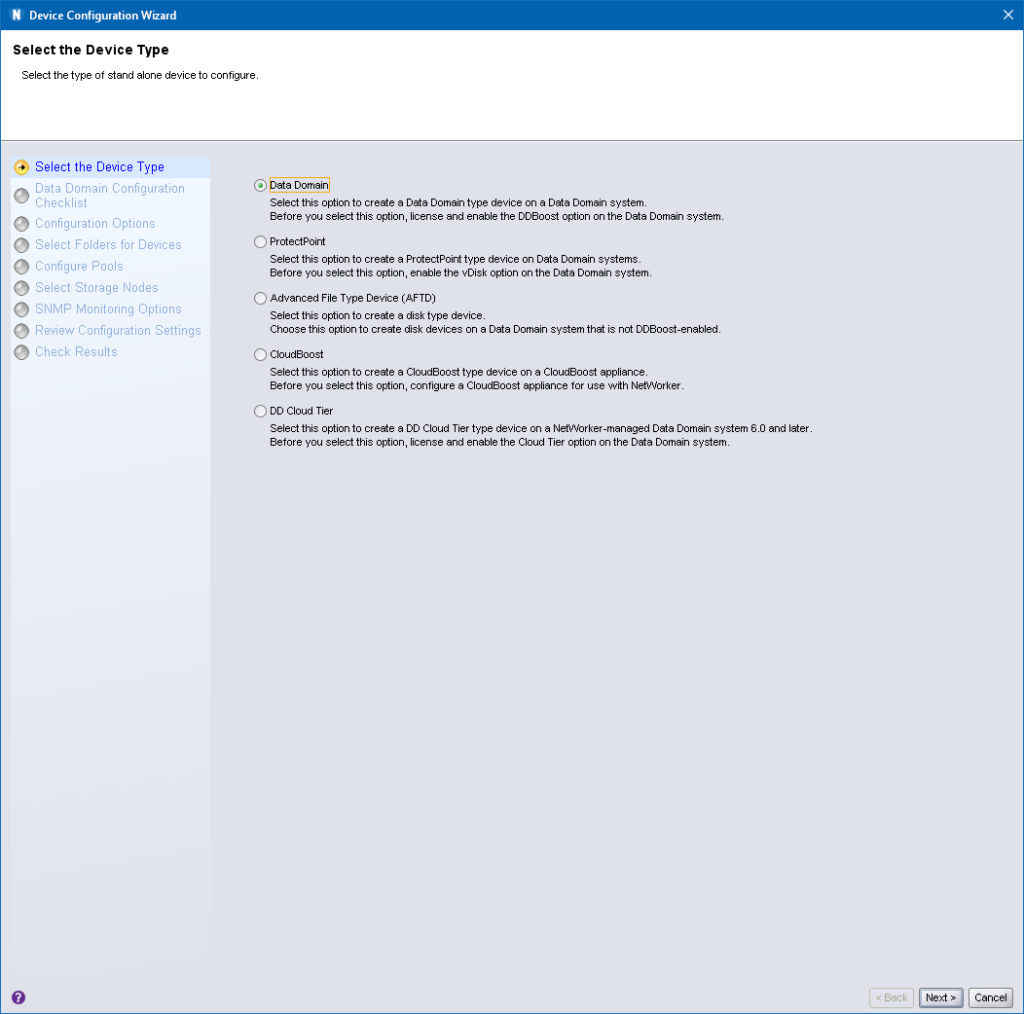

Within the new device wizard, you’ll obviously want to choose that you’re going to create a Data Domain device before clicking Next:

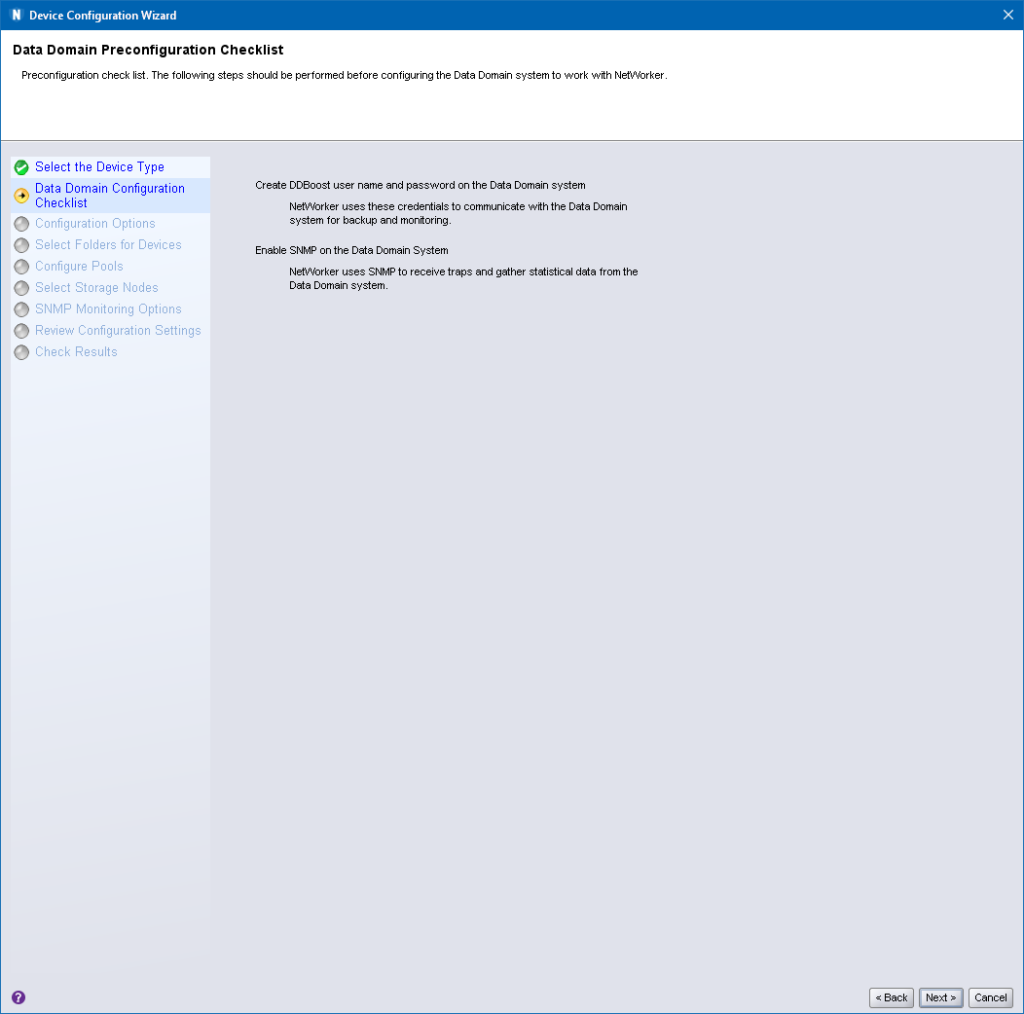

You’ll get the standard check list warning here about setting up the device and SNMP. Here’s probably a good hint as well to use this time to log onto the Data Domain (if you don’t have these details stored) and confirm what the username and password for Boost for the NetWorker server is.

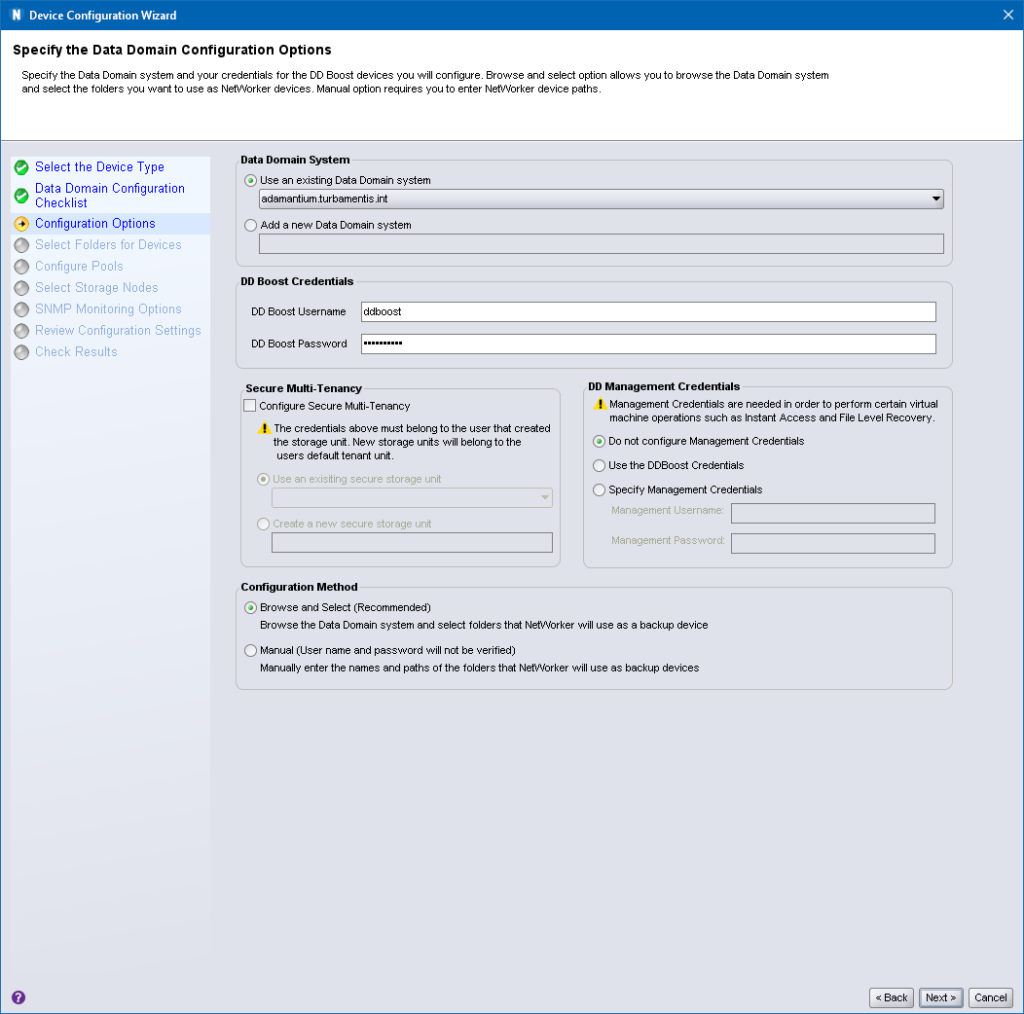

The next step is that you’ll be prompted to choose your Data Domain (or create a new one – but in this case, we’re creating a secondary mount for an existing Data Domain), and enter the system access credentials:

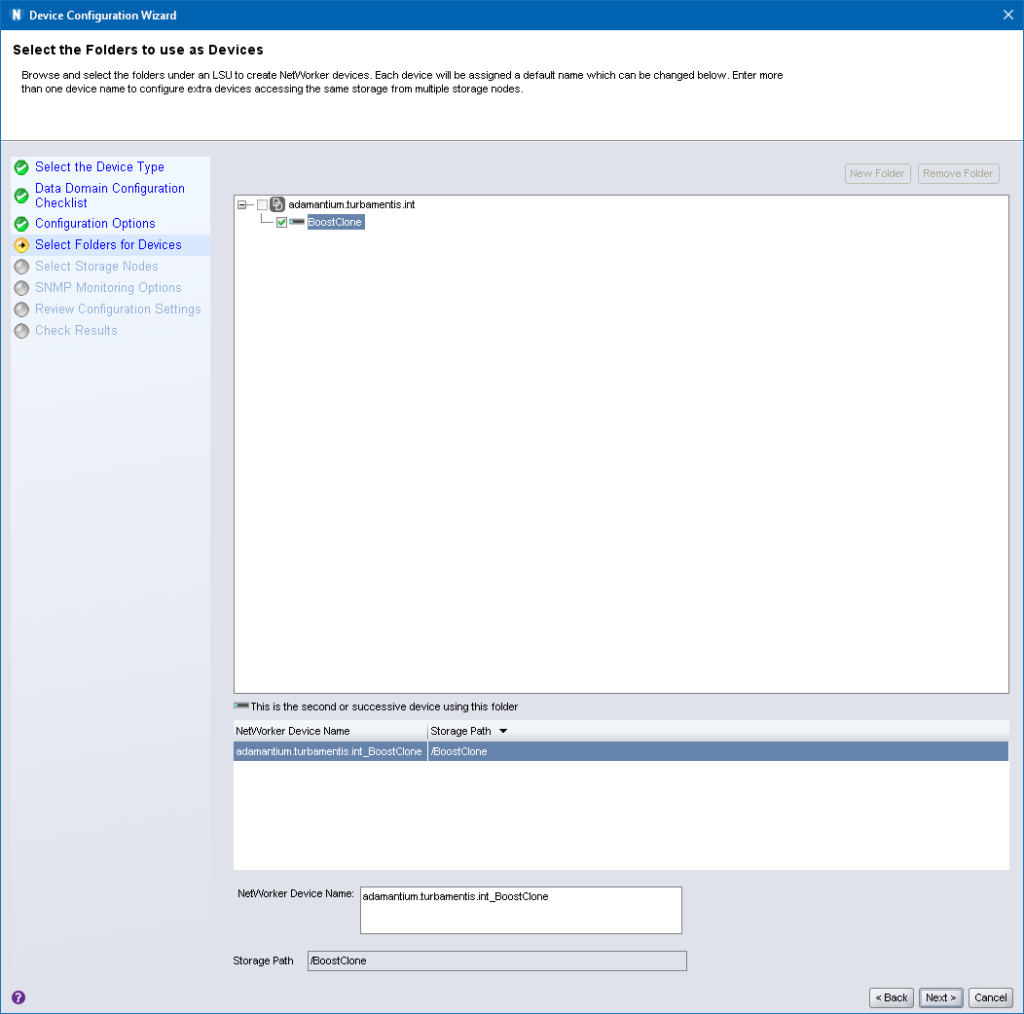

It’s on the “Select Folders for Devices” tab that we do things differently. Don’t create a new folder. Instead, select the folder that already resides on the Data Domain (if there’s multiple, then select the one associated with the specific Data Domain device you want to dual-mount.)

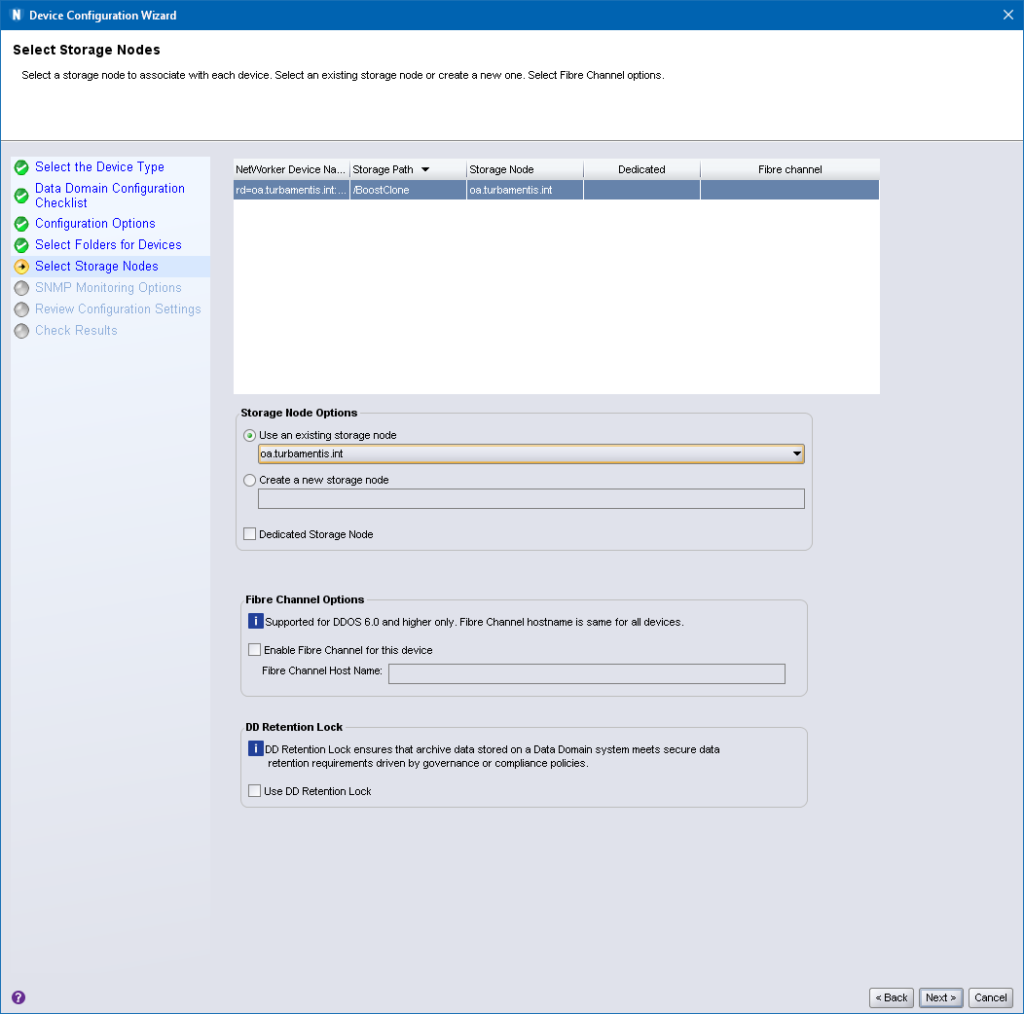

On the next screen, you’ll select the storage node that will access the device. In this case, I changed it from the NetWorker server to the storage node “oa”:

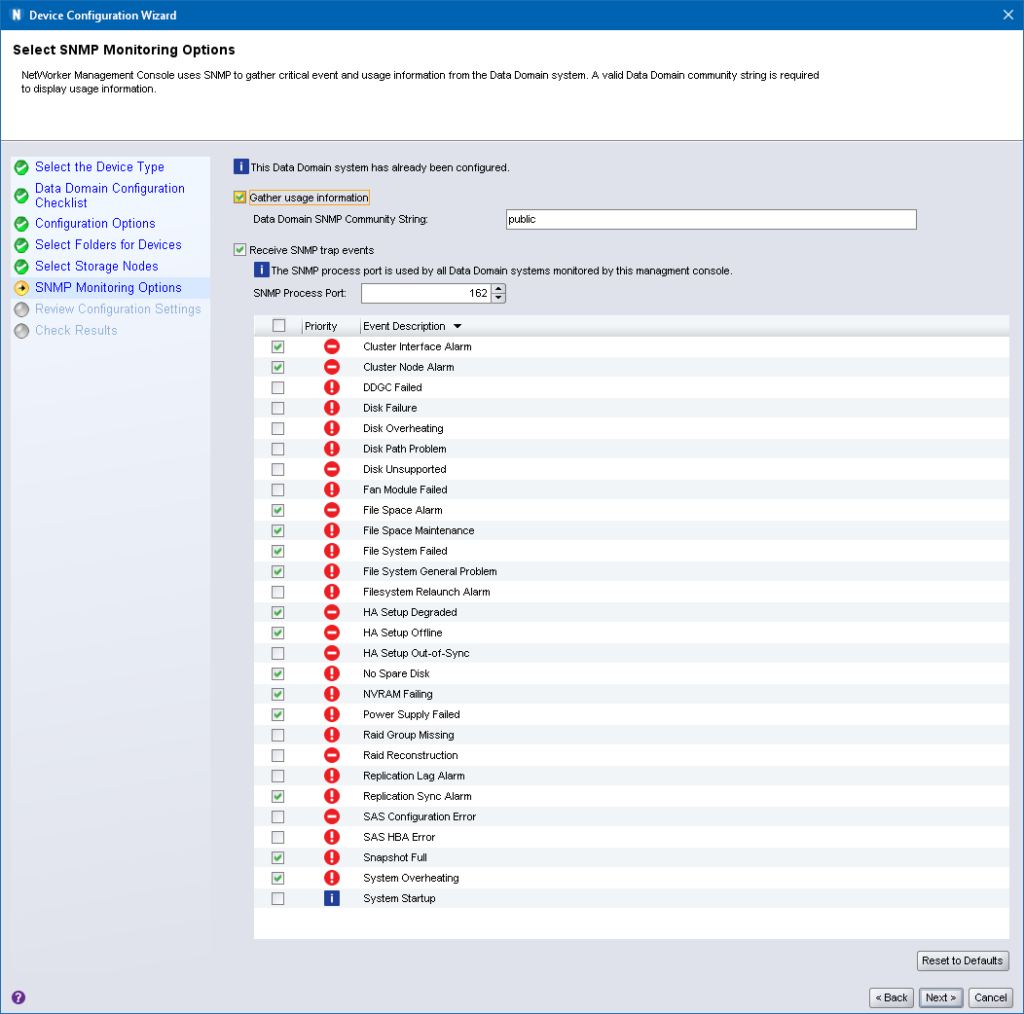

Next, you’ll be prompted to confirm SNMP settings. Because the Data Domain system already exists in your NetWorker environment, NetWorker should pre-fill all these details in for you:

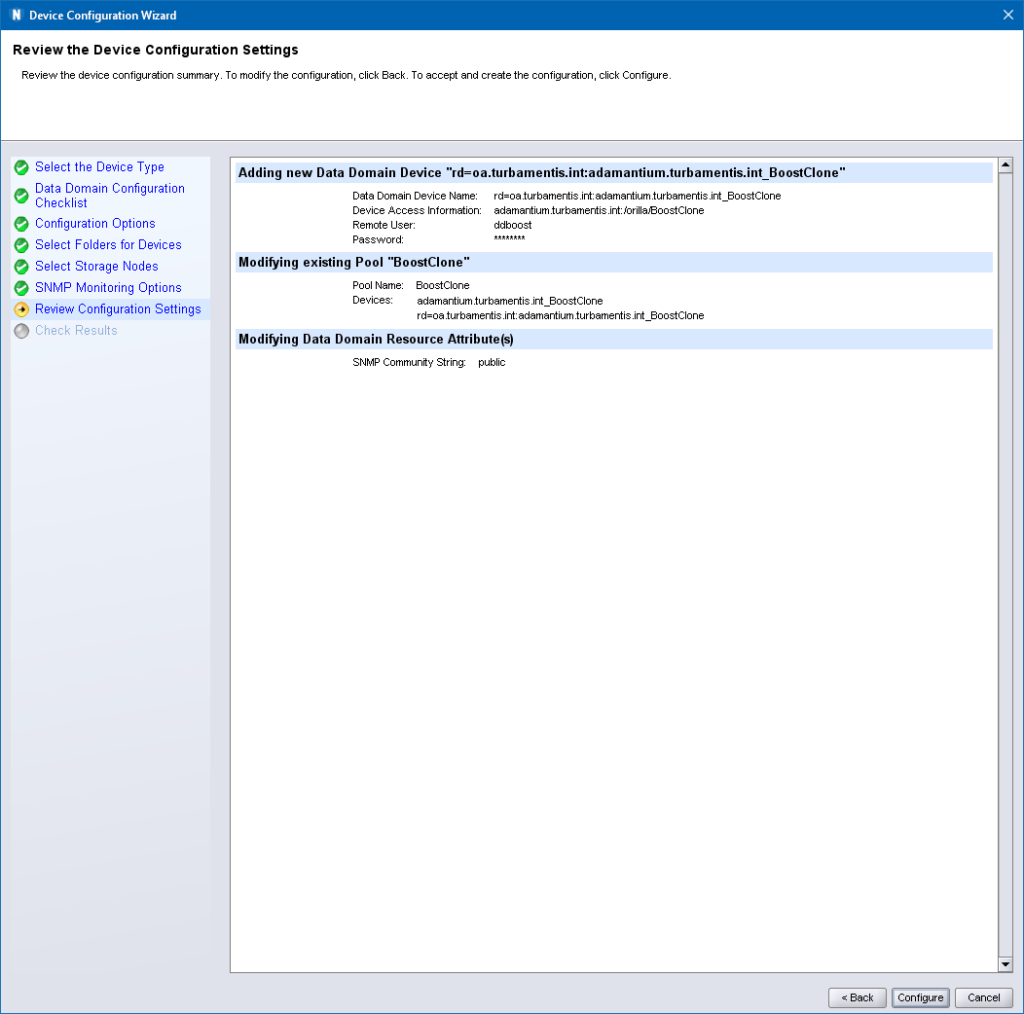

Next to last, you’ll be prompted to confirm your settings:

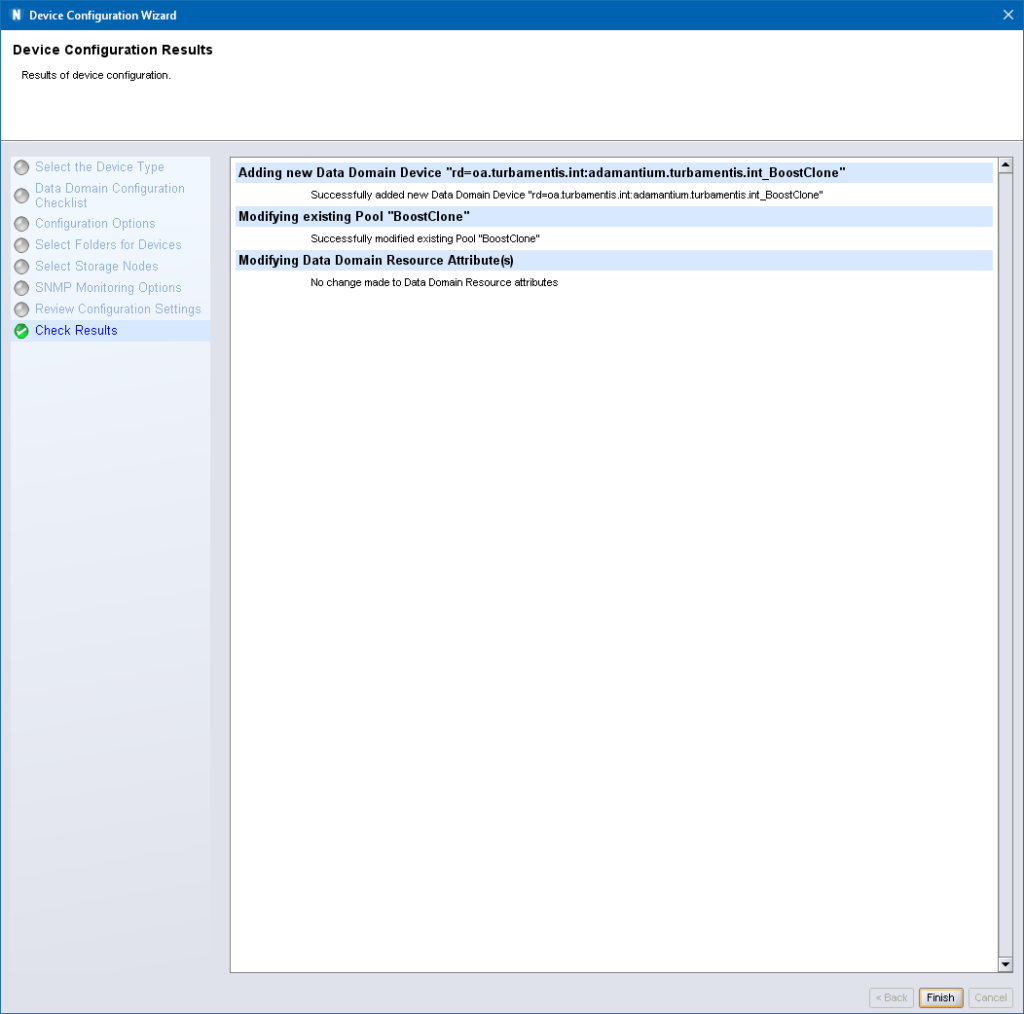

Finally, after clicking Configure, so long as the storage node and Data Domain can talk to each other, you should get a successful result:

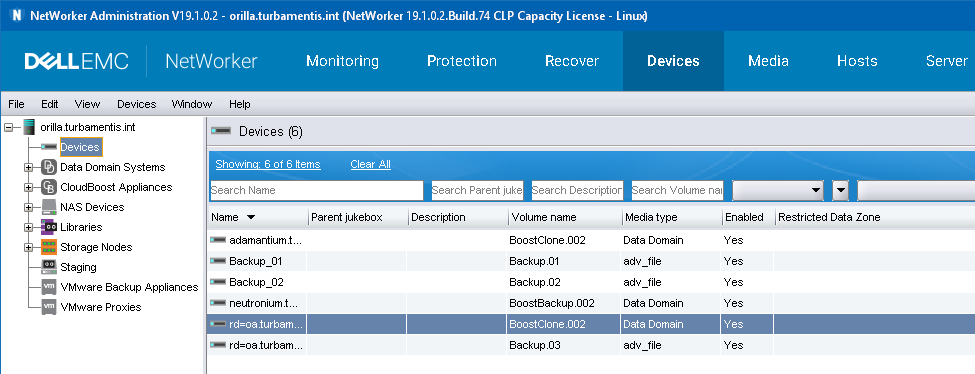

Once you click Finish to dismiss the Wizard, you’ll need to mount the volume on that device (remember, mount, not label – it’s already labelled and has content), and after that, you’ll have the Boost device on your NetWorker server, and a storage node:

And there you have it: the same Boost folder/device content accessible from two different storage nodes/systems within the NetWorker environment. This is really useful to do for a variety of purposes, including:

- Limiting the number of devices you want to create/manage in NetWorker

- Controlling where data is rehydrated from (recoveries and clones)

- Enabling more secure access to Boost devices when supporting a mix of different security zones within your network

for scalability (as depending on the NW version a ddboost device can either handle 60 or nowadays 100 sessions), you can “share” the same volume even multiple times on the same NW storage node. So when you really need scalability and hundreds of sessions towards the same pool, you don’t even require multiple ddboost volumes, in the exact same way you can share the volume on the same storage node. Just name the device differently (e.g. ddm01_nmm_1, ddm01_nmm_2, ddm01_nmm_3, etc for a pool containing NMM backups) but select the same ddboost device folder when creating a new device. so the device access information is the same for all of them.