Regular blog visitors will know that I think legacy applications and operating systems have a negative impact on the datacentre. I usually think of legacy as being systems which the originating vendor have ceased to support. Examples of legacy systems include:

- Oracle 7

- Windows NT 4

- Windows 2000

- AIX 5.3

- Solaris 2.6

(For what it’s worth, I classify systems like Banyan Vines, SCO Unix and Netware slightly differently – I think of those more as zombie systems since they’ve effectively outlived their vendors when you still see them around. They still present a challenge, but it’s a challenge that comes up less frequently since there’s more acceptance of their state.)

There’s a lot of thinking that interoperability between the various cloud types and private infrastructure is slowing modernisation, and I get that logic, but my personal feeling is that legacy systems also play a part. They form an anchor within the datacenter that can hold back other services and functions.

From my perspective, there’s a particular order that you’d approach when dealing with legacy systems, viz.:

- Refactor: Legacy systems represent a risk to the business. They should be eliminated by refactoring or migrating the service to something supported. This does get done a lot of the time, but there are always those malingering systems that no-one is prepared to cough-up the budget for.

- Virtualise: This has mixed benefits. You eliminate having to deal with maintenance-by-eBay challenges for the underlying hardware, but virtualisation platforms also eventually close the door on support for legacy systems.

- Hand-holding: If you can’t refactor/migrate and you can’t virtualise, you’ll be left having to hand-hold the system. That means trying to maintain some old spare parts (see, maintenance-by-eBay), and in some extreme cases, even keep staff around just to look after that system. (Though that’s more likely going to be from zombie systems.)

When a system can be refactored, presumably you’ll end up with something that you can perform data protection against using modern tools. When a system can be virtualised, you should be able to at least do image-based backup and recovery for it.

Hand-holding creates the bigger challenge: just how are you going to back it up, without holding back your entire data protection environment?

In some cases, you’ll end up maintaining a legacy backup platform just to provide that service. In others, you might use ‘simple’ in-built backup mechanisms (e.g., tar/gz on Unix platforms, NT Backup on older Windows platforms), writing to network mapped storage. However, sometimes these systems can be so old that even getting them to talk to network mapped storage can be a pain! (NT4 for instance only talks the original SMB protocol which isn’t compatible with modern CIFS shares.)

So what if you had to bite the bullet and get something ancient to backup into your current environment?

I got to thinking about this recently and thought … can I get Windows NT 4 to backup to a current release of NetWorker? Now, this was before NetWorker 19.2 came out, so I did my testing work gainst NetWorker 19.1.

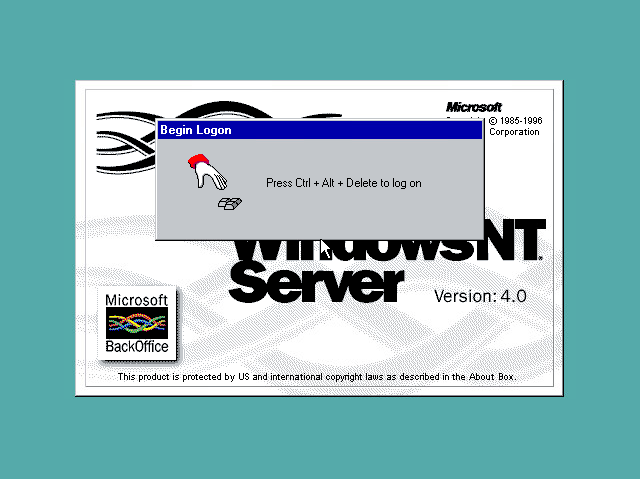

NT4 was first released in 1996 and received its last update (NT4 Service Pack 6a) in 2001. So it’s well and truly elderly in terms of legacy platforms that you might encounter in a datacenter.

Now, the last version of the NetWorker client I could find that I could get to successfully install in NT was NetWorker 7.2.2, which was released in 2006.

Side Note

I found it funny that for years after Windows 2000, then 2003 were released, I’d find myself lamenting about how Microsoft moved all the controls around and changed interface elements so much between releases. Going back to NT4 was a rude awakening. And let’s not get started about every folder opening up a new explorer window! You definitely get used to features, such as the ability to unzip folders and easily connect to file shares. In some ways, NT4 was like stumbling back onto an 8-bit computing environment.

So, I first suspected I’d have some challenges against me when I tried to use the NMC Wizard to create a client for the NT4 system … it sat there on the initial contact attempt to the host spinning and waiting … and eventually I looked over at the NT4 console and realised the NetWorker client services had crashed!

I did feel a little like Scotty in Star Trek IV at this point, but I was determined to press on.

I configured the client manually – and of course, a client that old isn’t going to have the Data Domain Boost library embedded in it, or support client direct. So I configured it with client-direct turned off in its properties, and configured it to use a storage node – first writing to AFTD, and then more ambitiously to a Boost device mounted on the storage node. (Both worked, once I could get the backup to run.)

After getting the client configured, I started a backup … which promptly failed. The reason, of course, is that a modern NetWorker server sends a variety of arguments to the ‘save’ command that requires a more modern client to understand them. (A minimum of NetWorker 7.4.)

Would I be defeated? I was taught from an early stage of my career that being able to write scripts is an essential IT activity, and I think my original sysadmin team would have all thrown their shoes at me in disgust if I’d have given up this early. There had to be some way of getting it all to work.

I verified quickly enough that I could do a manual save from the command line on the client – getting the backup to go to the right pool, with a specified command line. The NetWorker 7.2.2 client’s winworkr.exe GUI wasn’t going to cut it for recovery purposes — it just didn’t want to work with NT4. However, I could recover using the recover.exe command-line option.

So, backup and recovery worked, but being able to do a manual backup alone is boring, right? Equally, I could have a simple DOS batch file that would replace the save command (e.g., “savent4.bat”) which just called the appropriate save arguments – but, this would hard-code the pool and retention time, etc., into the save command. Worse, it would encode the save-set name as well!

At this point, I really rolled up my sleeve, and my husband endured a day of me cursing as I wrestled mightily with all the failures and limitations that were NT4 DOS/Batch commands. How anyone managed to do anything with NT4 DOS/Batch commands was beyond me. Essentially, I had to be able to parse the save arguments being received by the NetWorker client and strip out the ones I was interested in, such as:

- The name of the saveset

- The pool

- The retention time

- The level, and if the level was non-full, the anchor save time

Suffice it to say that NT4’s DOS/Batch command scripting in terms of parsing command-line arguments did not endure itself to me. After a solid day of effort, I was ready to give up and declare myself defeated.

It was so mind-numbingly annoying, I thought to myself the next morning in the shower … the argument parsing that I needed to do was so simple that it’d take me less than 10 minutes to write a Perl script to do it.

Light-bulb moment! A bit of quick searching, and yes, Strawberry Perl still has installers that can run on NT4!

Trying to call a Perl script directly as the backup command didn’t seem like a good place to start, so I wrote a simple batch script, “savepmdg.bat” and made that the backup command for the NT4 client. That script looked like this:

@echo off

setlocal

PATH=C:\TEMP\strawberry-perl-5.8.8.2\perl\bin;Program Files\Legato\nsr\bin;C:\WINNT;C:\WIN

NT\SYSTEM32

set OUTPATH="C:\Program Files\Legato\nsr\bin"

echo %* 1>%OUTPATH%\args.txt

perl "C:\Program Files\Legato\nsr\bin\save.pl" %*

OK, so the trick then would be the save.pl script. Normally I’d work with the Perl Getopts package, but for something this simple and predictable, I didn’t need to go that far – I could just do a read of ARGV instead:

my @saveArgs = @ARGV;

my $server="localhost";

my $pool="Default";

my $level="full";

my $browse = "tomorrow";

my $retention = "tomorrow";

my $masquerade = "";

my $timestamp = "";

# Process this way to avoid having to hard-code all possible options

my $argCount=@ARGV+0;

for (my $i=0; $i<$argCount; $i++) {

if ($ARGV[$i] eq "-s") {

$server=$ARGV[$i+1];

}

if ($ARGV[$i] eq "-b") {

$pool = $ARGV[$i+1];

if ($pool =~ /\s/) {

$pool = "\"" . $pool . "\"";

}

}

if ($ARGV[$i] eq "-t") {

$timestamp = " -t " . $ARGV[$i+1] . " ";

}

if ($ARGV[$i] eq "-l") {

$level = $ARGV[$i+1];

}

if ($ARGV[$i] eq "-w") {

$browse = $ARGV[$i+1];

}

if ($ARGV[$i] eq "-y") {

$retention = $ARGV[$i+1];

}

if ($ARGV[$i] eq "-m") {

$masquerade = " -m " . $ARGV[$i+1] . " ";

}

}

my $savesetName = $saveArgs[-1];

my $command = "save.exe -LL -q -s $server -l $level -b $pool -w \"$browse\" -y \"$retention\" $masquerade $timestamp -N $savesetName $savesetName";

system($command) or exit(1);

exit(0);

Once the script was in place, it was time for a good old-fashioned huzzah! (Although quietly, it was very early in the morning.)

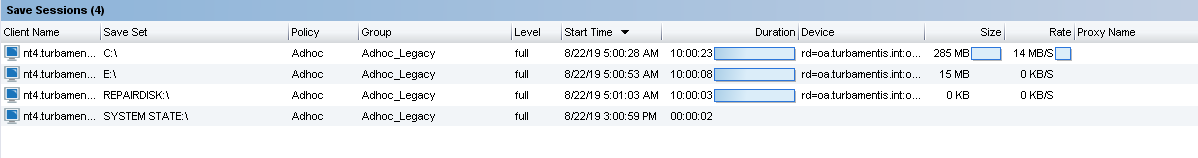

With this in place, I was able to run backups from NetWorker 19.1 of a NetWorker 7.2.2 client on Windows NT4, with the NetWorker server still able to assign pool, level, retention time, etc., automatically. In fact, here’s what it looked like – the little Perl script and batch file having been called, with the savesets being correctly enumerated:

There are a few lessons to take from this:

- Don’t be nostalgic for NT4.

- NT batch file ‘programming’. Ugh.

- You’re still better off, wherever possible, refactoring/migrating your legacy systems and applications.

- Automation is still king: If you’re prepared to put in a little effort, you can get the efforts you need out of a product built as a framework.

So what’s the advantage of this over say, keeping an old NetWorker 7.5, or 7.6, server installed somewhere? Well, that server is obviously out of support – you’ll probably be using rusty old hardware that’s also under a maintenance-by-eBay support contract, too. At least, in a configuration like this, you’re running up to date server code, on a modern system (probably even, like in my case, a virtualised system) so that you’ve got a good level of control over the environment. Oh, and that NT4 system isn’t acting as an anchor, dragging back transformation within your datacenter.

All in all, it was a nostalgic and fun experiment.

I’ve always wanted to try this but have never had the time. The applications may be limited but it’s great to know that it’s possible. Thanks for doing this Preston.