Where we’re at

A few weeks ago I posted a (lengthy) Perl script (and explanation) for running deduplication analysis against NetWorker savesets written to Data Domain devices.

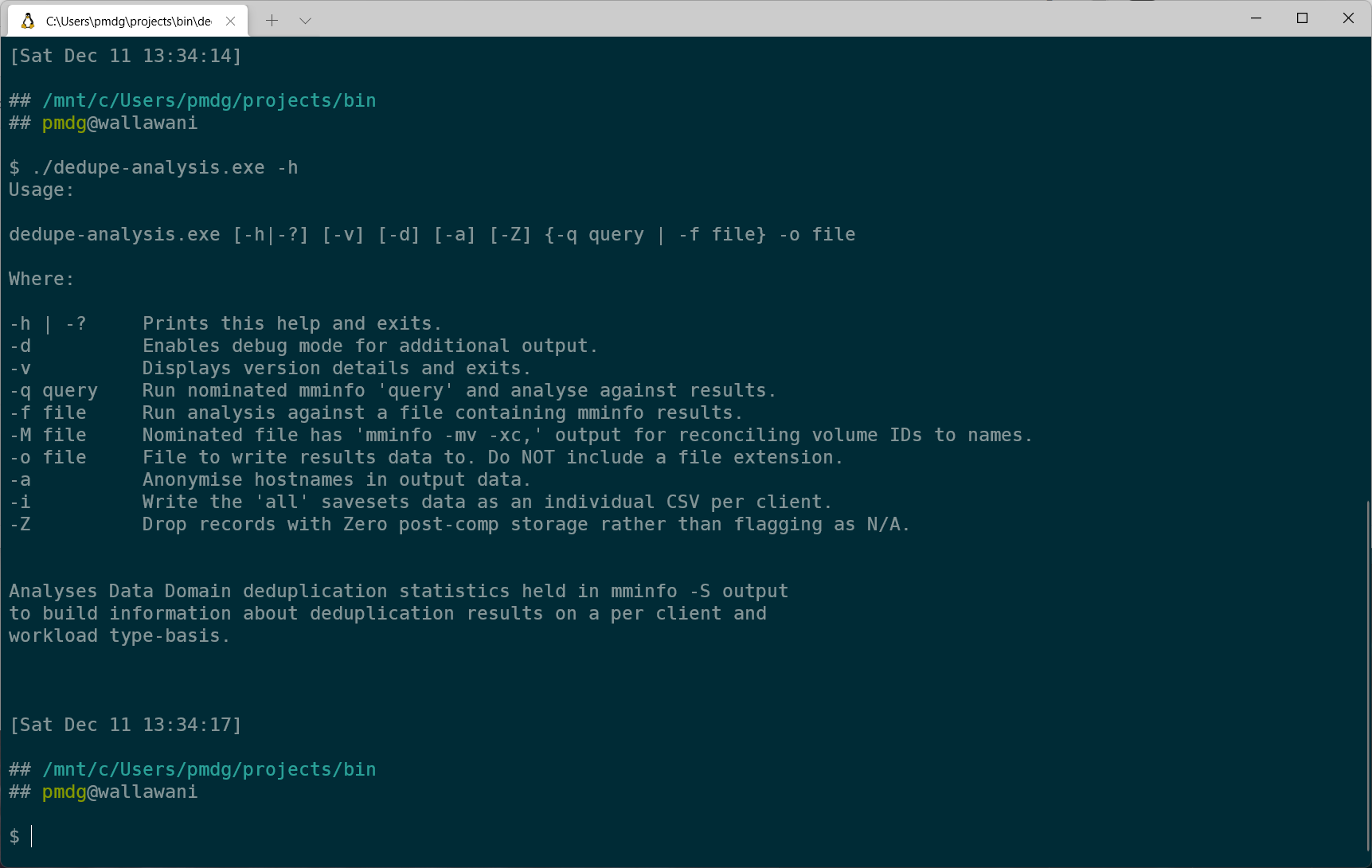

Since then I’ve been tinkering with the script and I’ve made some modifications — and made it easier for you to use, even if you don’t have a Perl environment running on your machine.

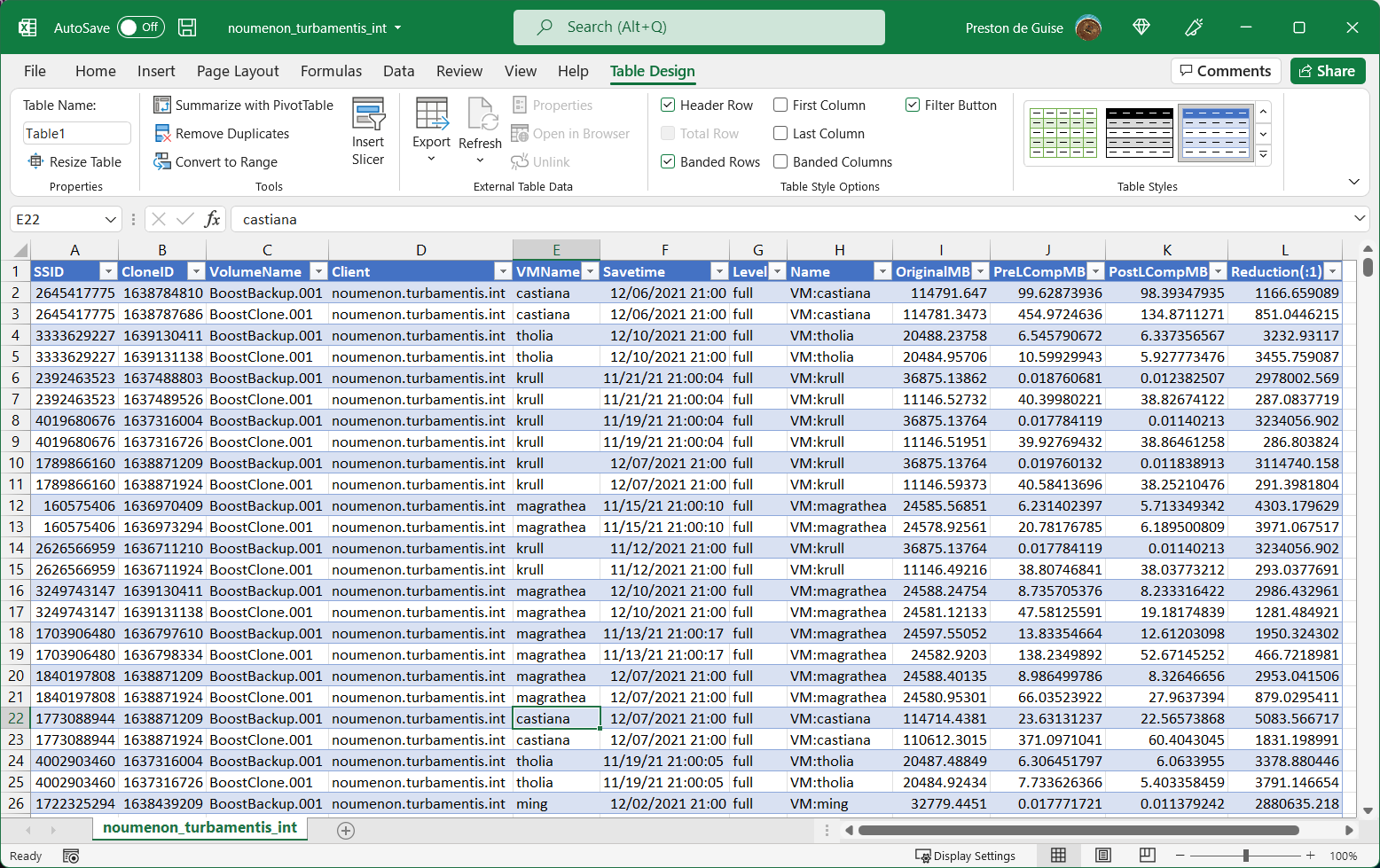

My original script irked me because there was no mapping between volume ID and volume names, which I’ve addressed in this version. If you run it on the NetWorker server, it will automatically do the mapping for you. If you’re running it against gathered data, you can also grab output from “mminfo -mv -xc,” from the NetWorker server and point the utility at that. With this gathered, you’ll get volume names associated with all the statistics – such as:

Finally, as you may have spotted from the command line usage in the first graphic and intro — the utility is now compiled – and for Windows and Linux. So you can just download it and run against your environment. And remember — there’s no need to run it on the NetWorker server; you can run whatever mminfo query you want so long as you generate the output with the -S option, transfer it to another machine, and run it there.

If you don’t want to run it on the NetWorker server, here’s what you’d do:

- On the NetWorker server, run:

- An mminfo query generating -S output – e.g., “mminfo -avot -S” – writing that to a file/directory that’ll have plenty of free space.

- An mminfo query generating the volume list on the server: “mminfo -mv -xc,“, writing that file

- Transfer the two files across to your workstation.

- Run the appropriate invocation of dedupe-analysis. For example:

- Let’s say your mminfo -S output is saved as: mminfo_S.txt

- You have mminfo -mv -xc, output saved as: mminfo_mv.txt

- You want per-host details as well as the rollups.

- You want the files/directories generated to be prefixed with MyNSRServer

- You’d run: dedupe-analysis -f mminfo_S.txt -M mminfo_mv.txt -i -o MyNSRServer

Downloads

You can download an executable version of the deduplication analysis tool for your platform below. I’ve included MD5 checksums for both the zip files, and the included binaries.

- Linux:

- Zip file MD5: 5ee89e693d5060b2bb4567dec1d05cd4

- Binary file MD5: b7512b9032bcd073309c8682afc1f230

- Zip Download

- Windows:

- Zip file MD5: 00d0d20b9516f38541ebcda0455ae1ff

- Binary file MD5: 9ea914d643806be9a3e7cf8c49df2c34

- Zip Download

Happy deduping!

Hi, i dont get any results from the script :/ It seems to not identiy the sizes in the output:

Release v1.2

Networker 19.4.0.2 Build 127

DEBUG: srv-vmware-vc.xxxx.local,VM:DRU-007 (ClID 1639254031)

DEBUG: Original Size: 4364113.59 MB

DEBUG: Pre-LComp: 0.00 MB

DEBUG: Post-LComp: 0.00 MB

Debug: Reduction: N/A:1

DEBUG:

DEBUG: srv-vmware-vc.xxxxx.local,VM:APP-1364157609 (ClID 1639515952)

DEBUG: Original Size: 9500535.95 MB

DEBUG: Pre-LComp: 0.00 MB

DEBUG: Post-LComp: 0.00 MB

Debug: Reduction: N/A:1

DEBUG:

DEBUG: srv-vmware-vc.xxxx.local,VM:DOM-004 (ClID 1639517767)

DEBUG: Original Size: 113253.78 MB

DEBUG: Pre-LComp: 0.00 MB

DEBUG: Post-LComp: 0.00 MB

Debug: Reduction: N/A:1

DEBUG:

DEBUG: srv-vmware-vc.xxxxx.local,VM:APP-6601868096 (ClID 1639013868)

DEBUG: Original Size: 9912578.45 MB

DEBUG: Pre-LComp: 0.00 MB

DEBUG: Post-LComp: 0.00 MB

Debug: Reduction: N/A:1

DEBUG:

DEBUG: srv-vmware-vc.xxxx.local,VM:APP-6601868096 (ClID 1639029634)

DEBUG: Original Size: 9912578.45 MB

DEBUG: Pre-LComp: 0.00 MB

DEBUG: Post-LComp: 0.00 MB

Debug: Reduction: N/A:1

DEBUG:

The debug output is showing that at least those saveset details are yielding zero in the pre-comp and post-comp fields. This was something I encountered for very small sets of data when working on the updated version of the script. If it’s happening for all your saveset entries you may wish to talk to support to find out why. Run a “mminfo -S” for one of the backups and look for the “ss data domain dedup statistics”.

If the statistics have been correctly populated, you should see an entry like this:

*ss data domain dedup statistics: “v1:1639821611:21483559540:6902578:6697733”

The fields are split by a colon, and going from left to right they are:

– v1

– The clone ID

– The logical/original saveset size (NetWorker’s view of the size)

– The Data Domain pre-comp value

– The Data Domain post-comp value

The tool needs those values to be populated to work, and usually they are (if you’re using the Data Domain as a Boost device); if you’re getting zero’s for all your data, it may be worthwhile chatting with support to get to the bottom of why that information isn’t being collected.

Cheers.