Introduction

It’s that time of the year when pundits usually (badly) predict what’s going to happen next year. It’s an even bigger time for predictions when we roll into a new decade1.

I personally think there’s a lot of value in looking at where we’ve come from as a starting point.

2010 Landscape

Entering 2010, we still had NetWorker 7.6, and Avamar 5.0. Avamar 5 introduced things like support for VMware ESX 4.1, support for Gen3 3.3TB Avamar Nodes (this was pre-Data Domain integration), RAIN support for NAT, and a host of other functions.

NetWorker 7.6, which had been introduced in November 2009, gave us support for features such as ATMOS devices, VMware visualisation, better reporting of deduplication (remember, in those versions, deduplication was via an Avamar storage node), and lots of back-end enhancements.

Current Data Domain models at the start of the decade included the Data Domain 120 (released 2008), 660, 690 and 880 (all released 2009). In the second half of 2010 came the Data Domain 140, 610, 630, 860 and 890 appliances. The Data Domain 890, the biggest of the beasts back then, scaled from 32TB to a whopping 384 TB, using 1 or 2TB drives. To reach that capacity using 2TB drives, you’d have had to configure 12 x 30TB shelves – a fully configured DD890 would occupy almost an entire rack.

Onwards and Upwards

2011 saw the release of Avamar 6, which was the first Avamar version to support Data Domain as a back-end storage target for the Avamar system. That was limited to some workloads initially, but immediately businesses saw the potential to scale their deduplicated backup environment significantly higher than a multi-node Avamar system could achieve. Up until that time, Avamar had been single-node or RAIN only. A single-node configuration saw a standalone Avamar server with both control and storage. The RAIN configurations were ‘X+1’ – X data storage servers and 1 utility node. The data storage servers collaboratively shared the load for storing and receiving backups – RAIN was a play on ‘RAID’, but referred to Node redundancy. (Of course, individual nodes used RAID as well – but in a RAIN configuration Avamar could lose a node without compromising backups.)

NetWorker stayed on 7.6 for a little while, getting a host of service pack releases in the interim. Version 8 was introduced in August 2012 (I covered it here). v8 made some fundamental changes to how advanced file type devices were handled, inserting a new management daemon to reduce the amount of work a NetWorker server had to do to communicate with devices, removing the need for the ‘read-only’ shadow device, and allowing multiple concurrent nsrmmd services accessing the AFTD. It also introduced client direct, which allowed clients to write directly to disk-based backup devices without needing a storage node license.

The Gorilla In The Room

2012 also saw the release of the next series of Data Domain systems – the 160, 620 and the 990. Data Domain 990 was – and still is a powerful workhorse. The 990 supported around 570TB usable storage, or just over 1PB if you configured it with extended retention.

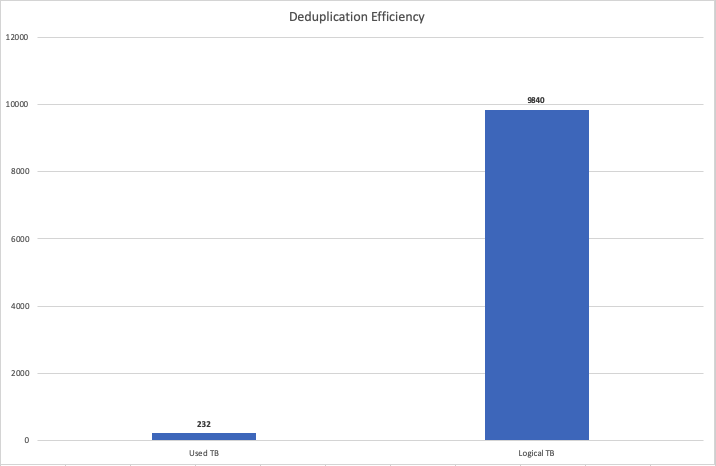

I’ve got customers still using 990s now. How’s that for reliability? A data protection storage platform released in 2012 still powering enterprise data protection workloads at the end of 2019. Some of those systems have been up and running for over 2 years, and even on a ‘smaller’ system I’ve got a customer storing almost 10PB of backups on just 232TB of storage – a deduplication ratio over 40:1. On hardware released almost 8 years ago.

I love those graphs – they emphasise just how much storage you’re saving, and to be able to show those figures for an investment made 7+ years ago is even more impressive.

And the DD160 – my oh my, what a popular little system that was. Ideal for branch offices, the DD160 was a 2RU system that only scaled to around 4TB usable, but it was perfect for replacing tape in those smaller sites where you’d be lucky to find a single IT administrator.

Keep on Keeping On

Full Data Domain integration with Avamar was achieved in the Avamar 7 system series – Avamar 7 was introduced in July 2013. This meant that businesses wanting to scale beyond the 16+1 maximum for an Avamar RAIN configuration could now pivot to single-node Avamar systems attached to Data Domains for all their workloads.

For customers not yet ready to pivot to Avamar writing to Data Domain, 2013 saw the release of the Gen4S Avamar nodes – these scaled to 7.8TB usable per node – a 16+1 grid would deliver almost 120TB usable storage. (This was certainly nothing to be sneezed at given the deduplication levels Avamar achieves.)

To me, one of the biggest changes in Avamar 7 was the elimination of the blackout window. Pre-v7, Avamar had a “blackout” time to perform system maintenance tasks where backups weren’t really supported. Avamar 7 swept that away and allowed you to pretty much use the system 24×7. Avamar also got the Backup and Recovery Manager, a simple dashboard viewer showing system status on login to the GUI, and introduced policy-based replication.

2013 also saw the next Data Domain refresh cycle – introducing 3TB drives and the DD2500, 4200, 4500 and 7200 models.

Data Protection Advisor got a big boost in 2013 with the release of DPA v6, which brought in a more modern interface, tighter integration with VMware, NAS, SAN systems, and enhanced analysis. This also saw the introduction of DPA’s dashboards, designed to provide at-a-glance access to key operational and compliance data for an environment.

NetWorker moved to v8.1 in 2013, getting additional functionality parallel save-streams for Linux/Unix filesystem protection, and block-based backup for Windows servers. It was also the introduction of VBA, which gave NetWorker high-performance image-based backups without the need for pesky physical proxies. Other updates included virtual synthetic fulls, NAS snapshot management, and the introduction of nsrdr for easier disaster recovery of the NetWorker server.

From Little Things, Big Things Grow

2014 saw the introduction of the Data Domain 2200. This was the successor to the Data Domain 160 and proved equally popular in all those remote/branch sites. The DD2200 could grow to around 22TB but could start as low as 4TB usable without loss of performance or reduction from RAID-6 – it used license locking for the low capacity while still providing sufficient drives to achieve performance and RAID-6 functionality, with a hot-spare. (This also made the first capacity upgrade quite simple if you started at the lowest size.)

2014 saw the release of Avamar 7.1, which covered a bunch of currency updates at the time, the introduction to the vCloud Director Plugin, support for backing up entire Isilon clusters, and a bunch of VMware updates.

It wasn’t just Avamar that got an update – NetWorker got an increase to 8.2, including support for Instant Access, Windows got parallel save-streams, and Linux got block-based backups.

Oh, and I started contracting for EMC. Relatively minor in the grand scheme of things, but for me, it was pretty important.

The Gorilla in The Room Gets A Big Brother

2015 saw the introduction of the Data Domain 9500, which could scale to 720TB usable. Data Domain also got the DS60 shelves – packing up to 60 x 4TB drives in a single 5RU enclosure.

Avamar 7.2 was released in 2015, and that saw functionality such as the proxy deployment manager (taking the guesswork out of deploying VMware backup proxies), REST API, vSphere 6 support, and a bunch of other features.

ProtectPoint was introduced in 2015, too. What’s that, you want a full backup of a 40TB database in under 30 minutes? ProtectPoint had you covered! (Still does, too. It’s now fully folded into PowerProtect, in addition to being accessible in NetWorker.)

NetWorker 9 appeared just at the end of 2015 – and boy, what a release that was! It completely pivoted NetWorker’s configuration engine, making it far easier to align with business service catalogues, and you can see my original post about it here. At the time, I called it “the future of backup”. (It was also one of those rare posts that got linked to from The Register, which is always a fun achievement.)

Oh, and I joined EMC as a full-time employee. (That was effectively the culmination of a 10-year dream. I’ve jokingly referred to this blog as the longest job application letter of all time.)

Oh, and Dell bought EMC in 2015, too. I know some customers said “what will happen!?”, but if you look at the evolution of data protection systems since the acquisition, it’s clear that it’s a fantastic result for everyone.

Bigger and Better

2016 was the year of big hardware updates. Avamar got Gen4T nodes, which introduced 10Gbit networking into the product line. (The great thing about deduplication, of course, is that you don’t need anywhere near as much networking in order to get the throughput you need.)

We also got updated Data Domain models – the DD6300, 6800, 9300 and 9800 systems. The 9800 was the first Data Domain that could scale to 1PB of usable storage in the active tier. (While competitors might insist they got to 1PB first, it’s worth remembering that none of those competitors could achieve 1PB in a single deduplication storage pool. 1 x 1PB deduplication pool delivers orders of magnitude better storage efficiency, with a lot less management than 60+ x 16 TB deduplication pools!)

It was also the year of Avamar 7.3, which introduced the customer-installable Avamar Virtual Edition, the introduction of support for OpenStack, and the new Avamar Data Migration Enabler (ADMe). By that stage, the Avamar Data Protection Extension for VMware’s private cloud functions was going gangbusters.

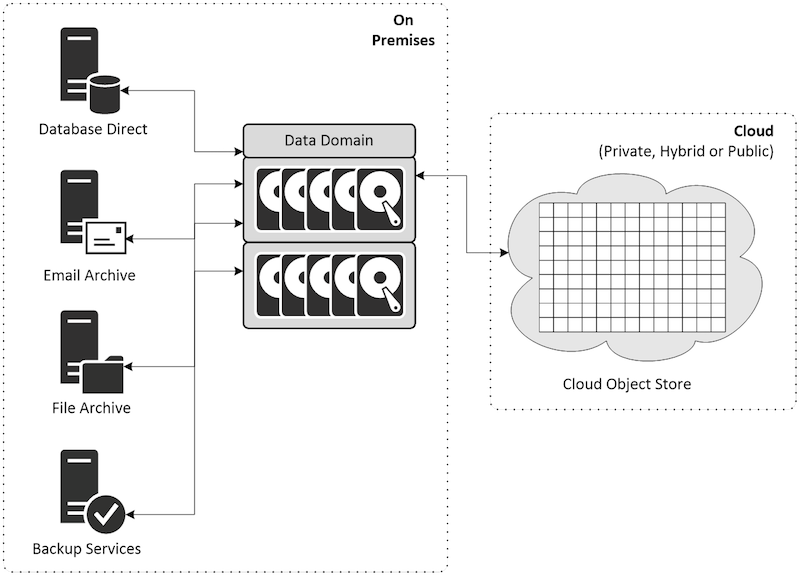

Data Domain didn’t just get hardware updates – while DDOS updates are regularly delivered, 2016 was the year Cloud Tier was first introduced. This allowed systems to start moving long term retention backups off the active tier onto object storage, either on-premises or in the public cloud.

NetWorker pivoted in 2016 with 9.1 to the vProxy system, faster, more efficient more scalable than VBA. (In fact, as we head into 2020, a NetWorker server just using a few vProxies can protect 20,000+ virtual machines in your environment.) We also got Cloud Tier support and a Data Protection Extension for vRealize for NetWorker. (The REST API for NetWorker was introduced in NetWorker 9.0.1.)

Build, or Buy?

2017 saw the introduction of the Integrated Data Protection Appliances. The DP5300, DP5800, DP8300 and DP8800 were all introduced in 2017, and these converged backup platforms let businesses move away from the classic “build” model for data protection. After all, what brings value to the business – building a data protection platform from scratch, or having something wheeled into the datacentre, and, a couple of hours later, start to write production backups?

We got Avamar 7.4 in 2017, which introduced Cloud Tier support, under-the-hood changes that massively boosted (no pun intended) replication capabilities, up to 32 concurrent Instant Access sessions, and all the sorts of currency updates you’d expect for new products.

NetWorker got v9.2, which saw functionality such as SQL application-consistent image-based backup of virtual machines, and support for Data Domain Retention Lock (a great ransomware defence!).

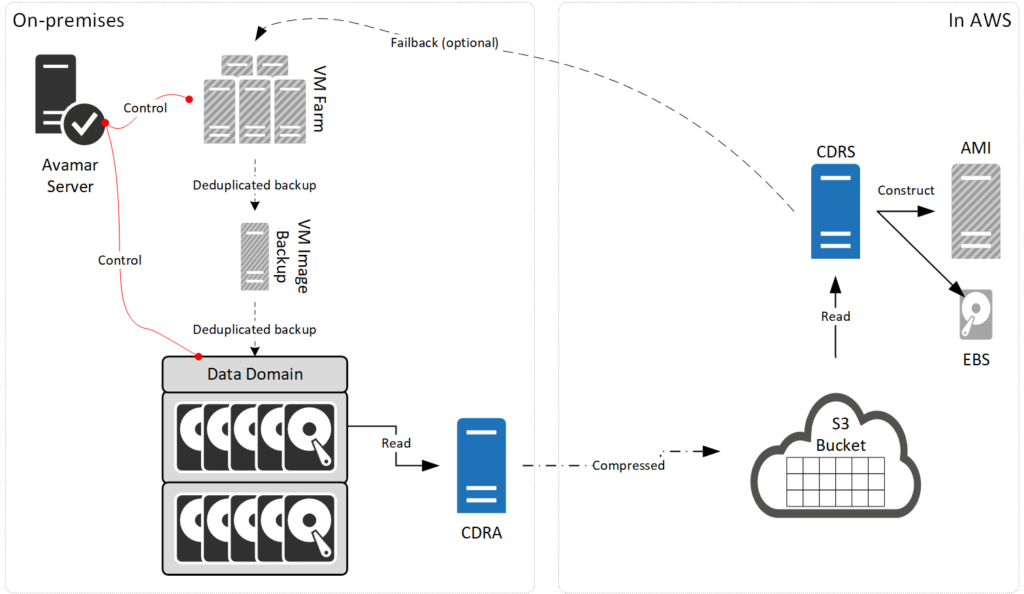

We also got Data Domain Cloud DR in 2017, which lets you use the public cloud as a disaster recovery facility for some of your workloads.

Cloud DR has come a long way since then, but everything starts somewhere!

Oh, and I released my second book on data protection. I may have mentioned it, once or twice?

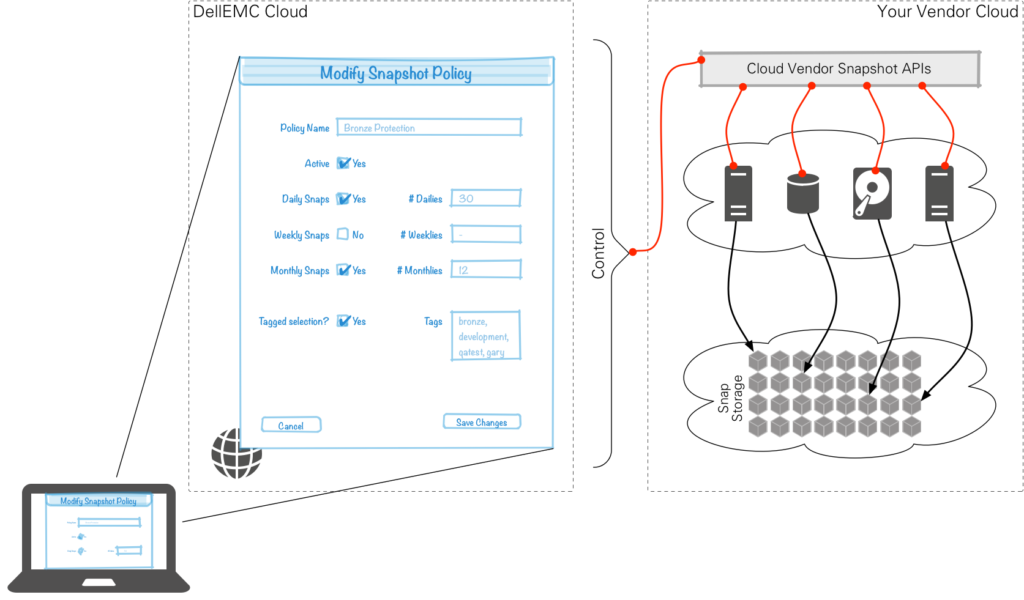

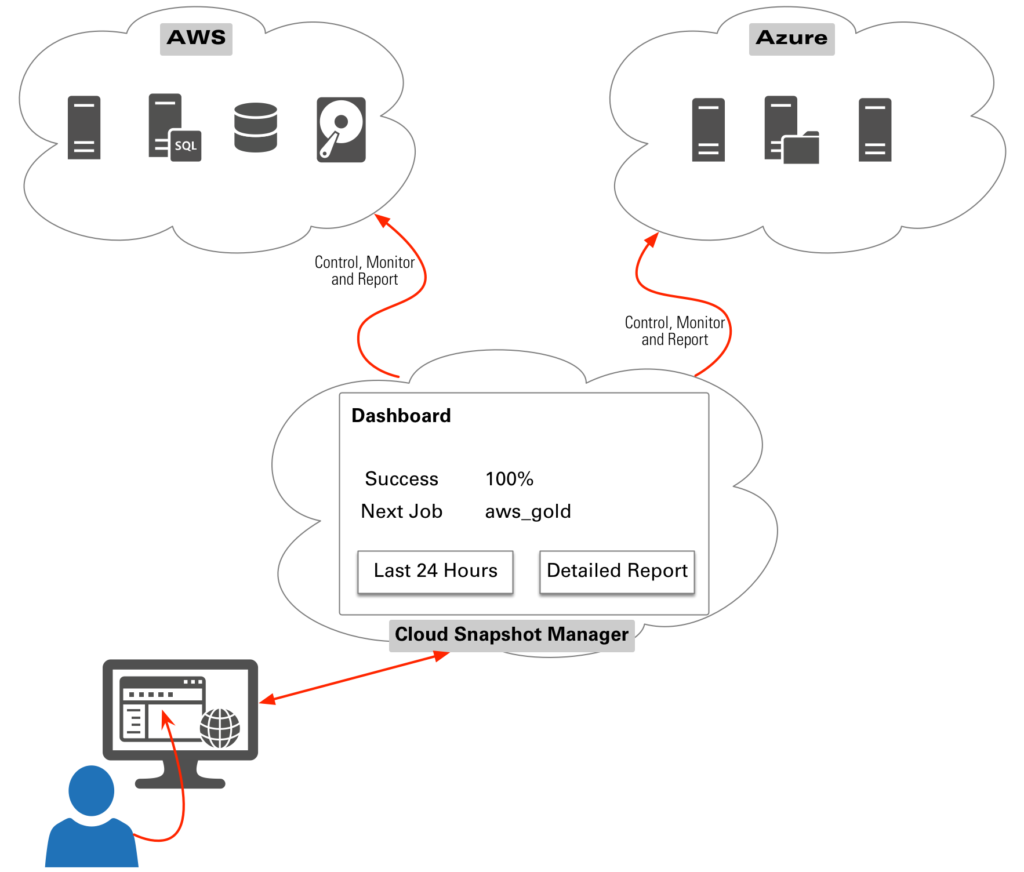

I almost forgot! 2017 was the year Cloud Snapshot Manager was introduced.

A SaaS portal itself with nothing for you to install, Cloud Snapshot Manager (CSM) provided a means of taking control of AWS snapshots, providing enterprise governance over the end-to-end process. CSM has grown in leaps and bounds since then, and more than one customer has achieved enough savings to pay for their CSM subscription in the first month by standardising the management of snapshots across dozens or more AWS accounts from a single console.

Super Backup in a Box that’s Really Quite Bodacious

You didn’t think IDPA was just going to stop at converged appliances, did you? Well, this is a retrospective, so you already know the answer, of course. 2018 saw the introduction of the IDPA 4400, which could scale initially from 24TB up to 96TB usable in a single 2RU enclosure, featuring all backup services, storage, cloud DR and cloud tiering capabilities. And yes, I literally did a blog post about it at the time, calling it Super Backup in a Box that’s Really Quite Bodacious. And it really is quite a beautiful little system. To streamline upgrades, the DP4400 could be shipped with 24TB and then get capacity upgrades via licensing alone, in 12TB increments, all the way up to 96TB. (All the performance but none of the hassle about having to send anyone to probably a remote site just to install a hard drive or two!)

While the DP4400 was initially released with a starting size of 24TB, it was eventually updated to have a starting size of just 8TB, making it an ideal hyperconverged backup appliance for remote and branch offices.

2018 was a year of big little things – it wasn’t just the DP4400 that was introduced, but also the Data Domain 3300, replacing the Data Domain 2200. The DD3300, supporting up to 32TB usable, packs quite a performance punch and supports Cloud Tier, too!

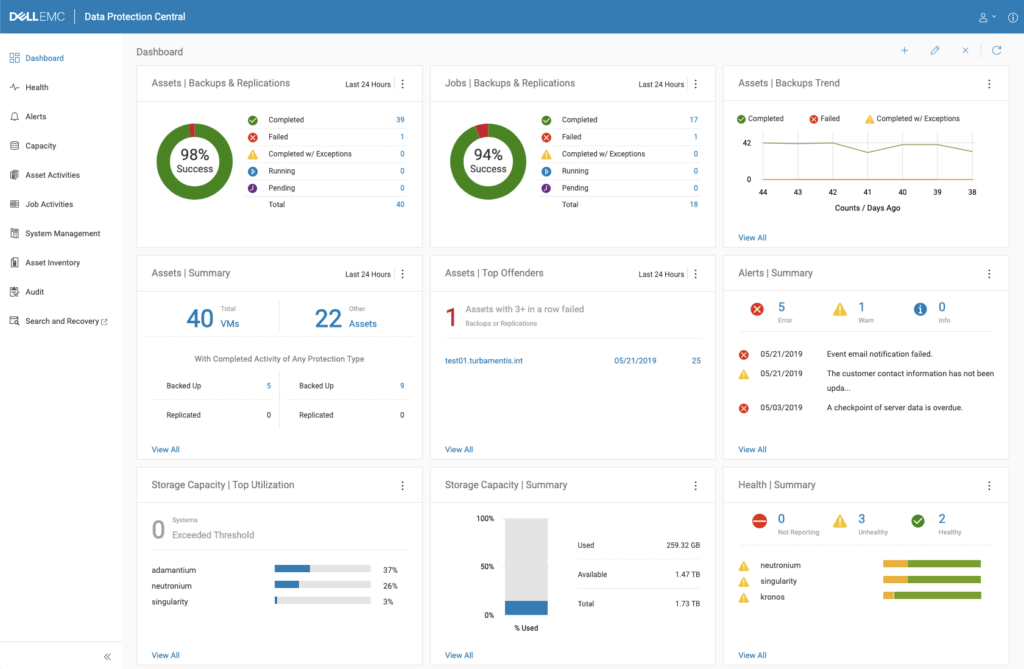

We also saw the consolidated release of Data Protection Software 18.1. Rather than releases being staggered between Avamar, NetWorker, Data Protection Advisor and so on. We also saw the release of Data Protection Central, which absolutely streamlines the management and monitoring of data protection environments, especially if you’ve got multiple backup servers (Avamar and/or NetWorker) and Data Domain in play.

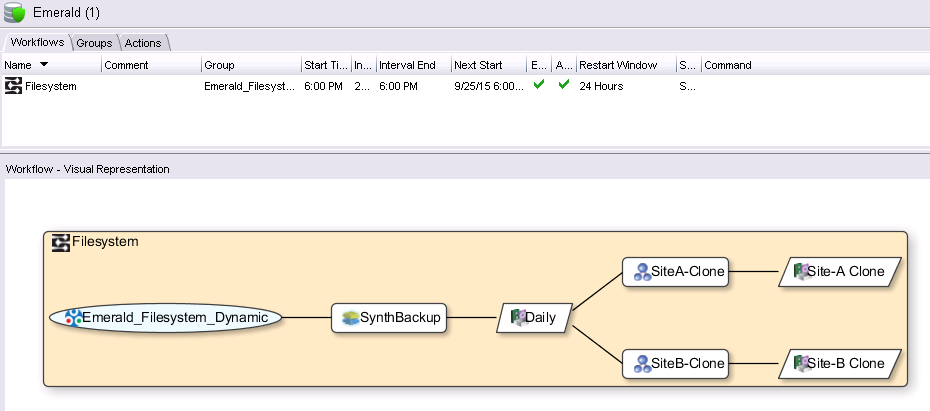

Avamar 18.1 introduced things like a 16TB AVE, support for deployment on KVE, direct recall from Cloud Tier, and easier deployment into the public cloud. Data Protection Advisor 18.1 was the start of the journey to an HTML5 interface for that product. NetWorker 18.1 also got the start of its HTML5 interface, saw the introduction of dynamic policies, and a substantial increase to the REST API. (For each product, that was just the tip of the iceberg.)

In fact, a consolidated release of the Data Protection Software didn’t happen just once in 2018, there was also Data Protection Software 18.2. DPC 18.2 got a significantly revamped dashboard, making it all the more useful. Avamar 18.2 got RBAC introduced into the HTML5 UI, integration of Cloud DR into AUI, and a lot more. NetWorker 18.2 saw vProxy able to absorb and recover VBA backups, policy management in its HTML5 UI, an SNMP v2c MIB, more REST API work again, and yes, a whole bunch more.

Cloud Snapshot Manager gets regularly updated, but 2018 saw some huge updates, including the capability to manage Azure snapshots as well as AWS, all again from a single console.

And We’re Almost up to Date

If anything, we’re seeing the pace pick up now. There’s been Data Protection Software 19.1, and Data Protection Software 19.2 – see my Avamar 19.2 write-up here, and my NetWorker 19.2 write-up here, for example. Data Protection Central and DPA also got updates as part of those roll-outs, too. Avamar is now pretty much 100% HTML5-based from an interface perspective, and NetWorker and DPA are both closing in on that goal, as well. Data Protection Central has always been HTML5-based.

We also saw some amazing new protection storage systems introduced – PowerProtect DDs, the 6900, 9400 and 9900 now include hardware acceleration for compression, allowing the systems to squeeze an extra 30% or so out of the same workloads, without any additional impact to clients of the environment. The 9900 can scale to 1.25PB of usable storage, and using 8TB drives, achieves all this (plus Cloud Tier elements) within a single rack.

Oh, I also released this book. You may have noticed me mentioning it from time to time, too:

But, at the risk of sounding like a TV infomercial, that’s not all. The biggest release of 2019, the one that is going to set the shape for data protection for the next decade was PowerProtect. You see, PowerProtect is built from the ground up to meet the sorts of data protection scale we’re having to consider into the next decade: how does a single backup administrator look after backups for 5,000 physical systems and 50,000 virtual systems? (Or more.)

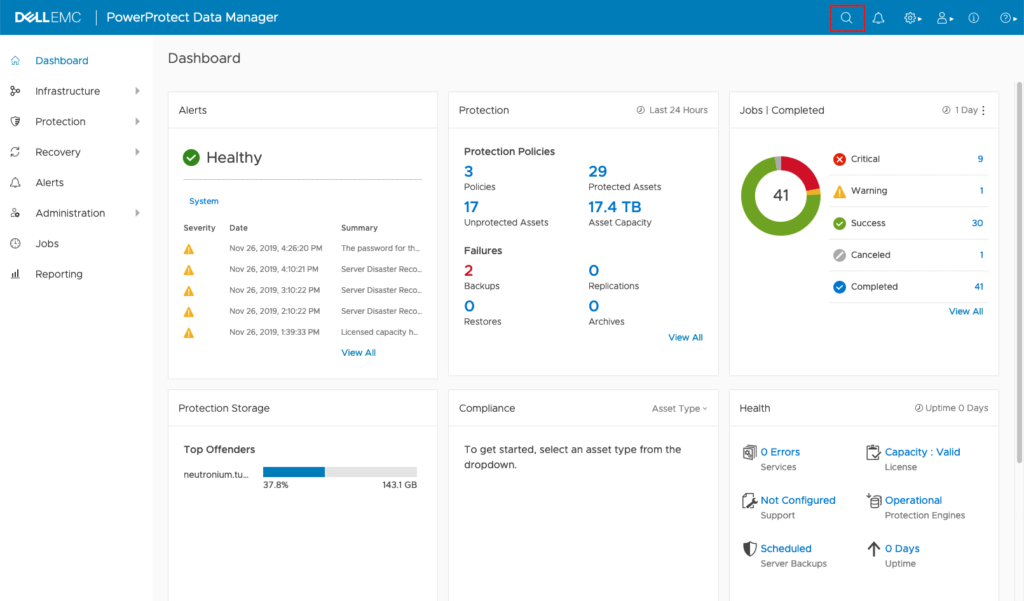

PowerProtect isn’t just a software platform though. You can certainly deploy PowerProtect within your environment and start protecting workloads within a few clicks (honestly, the install is that simple): that’s the PowerProtect Data Manager (PPDM). But there’s also a hardware appliance series, too: the X400. That’s a multi-dimensional backup platform featuring all the hardware and software together, either featuring hybrid or all-flash storage. We used to talk about data protection products being either monolithic or framework, but PPDM is something new again – modular. The architecture is new and exciting, allowing rapid updates – so much so that it’s not just been one release of PowerProtect this year, or two, but three. PowerProtect is already growing in leaps and bounds – 19.3, just released, has added support for Kubernetes protection, and included automatic indexing of virtual machine backups that runs at ludicrous speed.

Now, I know in Space Balls, ludicrous speed had some challenges, but that’s not the case with PowerProtect. And it’s not just PowerProtect itself providing ludicrously fast data protection and management of your secondary copies – it’s the development pace, too. That’s three major releases in a single year.

I’m Missing Some Bits

Wrapping up the look back, I know I’ve missed stuff. I didn’t cover the application direct functions introduced, enabling database administrators to write directly to Data Domain without a backup product involved, for instance. I’ve also not covered off RecoverPoint or RecoverPoint for Virtual Machines, and I wouldn’t even attempt to cover all the changes in snapshot and replication technology over the past decade, either! Yet all of those contributed to the stellar contribution Dell EMC has made to data protection within the industry over the past decade.

We’ve also seen a significant pivot in the past decade on licensing. At the start of the decade, it was quite common to still see feature-based licensing in NetWorker installs. Avamar focused on back-end TB licensing (you paid for the amount of storage you were writing to). NetWorker moved to front-end TB capacity licensing, and Avamar was able to as well, once it could access Data Domain. There was also a move away from singular product licensing (e.g., just NetWorker), to suite based licensing. You could buy 1TB of backup software licensing and use that for Avamar, NetWorker, Application direct, etc., and it would enable access to DPA, as well. There was then the pivot to socket-based licensing – ideal in hyper-virtualised environments, or environments where the socket count is low but there could be considerable infrastructure growth.

What about Me?

It’s been a big decade for me, personally, too. My (now) husband and I moved to Melbourne, Victoria from the Central Coast, NSW in 2011. Anyone who has made an interstate move will know that it’s pretty disruptive, and our move certainly covered disruption fairly well. I had my second and third books published in the decade (next year I’ll be introducing my fourth book to the world). Australia finally caught up with the changing winds of public perception in 2017, declaring marriage legal between same-sex couples, so after 22 years of being together, my husband and I tied the knot at the end of November 2018 – surrounded by lots of friends gathered from over two decades together. This blog itself turned 10 in 2019 as well. Hundreds of articles posted for over a decade, initially just about NetWorker, but as you’ll have noticed in more recent times, significantly expanded now.

But, balancing those out, the last couple of years from this decade have been quite hard in other areas. Pets are a double-edged sword: they bring such joy and fulfilment into our lives, but they also live much shorter lives than us. Both our cats started having significant health problems in 2018 and we now just live each day as it comes, treasuring each moment. My father, too, has been suffering from cancer for the past several years and there is now only one inevitable outcome from that. We’re constantly surrounded by mortality in one way or another, but when it lingers for months, then years, like the sword of Damocles over loved ones, the mental health challenges that come from that wait are profound and very real.

One thing they don’t tell you about working in sales: it’s so easy to measure your personal worth based on the momentary success or failure that is a deal. That’s something I’ve particularly found challenging this year – when you’ve lived your entire life with significant levels of imposter syndrome, that’s not the best combination. If you’re thinking about going into sales, by all means, do it – it’s richly rewarding and highly engaging. But watch for those lows, too.

Any Predictions?

Do I have any predictions about where data protection is going to go in the next 10 years? I’m not going to do significant crystal ball gazing, but I’ll make some notes on the most obvious ones that you need to be keeping in mind:

- As businesses move increasing numbers of workloads to Software as a Service in the public cloud, this is going to create increased pressures to ensure that data is adequately protected. Expect to hear there is no standard regularly and loudly. What’s more, SaaS providers don’t really want you to be able to do full data extracts of your environment, which is something full backup and recovery services provide. Prediction: There will be significant friction between customers, SaaS providers and regulatory bodies over the coming decade on this front.

- We’ll continue to hear less about tape. Lots of people rush to declare tape dead. I’m not going to say that, but it’s clear to me that tape, as an original destination for protection data, is dwindling. What I also expect to see into the coming decade is a reduction in use of tape as a secondary target. “Data is the new oil”, they say. Data is valuable, and having large swathes of data sitting on tape that can’t be accessed unless it’s physically loaded will start eating away at businesses that need to drive greater use out of their biggest asset. Prediction: Tape will continue to move to the extremely niche use-case scenario.

- Ransomware is here to stay. So here’s one I prepared earlier … Prediction: Backup servers will become a threat vector. It will become increasingly imperative to design a robust data protection solution that provides multiple layers of invulnerability from ransomware/malicious insider attacks. Also, expect to see the decline of data protection services just installed on top of a regular operating system: this coming decade will see an increasing focus on the data protection appliance – in part because of the additional protection they provide from ransomware.

- I can’t stress this one enough … the single biggest focus of IT infrastructure (regardless of whether it’s traditional, private, hybrid or in the public cloud) is automation. Prediction: Automation, and the evolution of automation, will be a concept that dominates data protection for the next decade. (I alluded to this in my first post of 2019, and I stand by that.)

I’m not going to go beyond those predictions. As I tell customers when they say they want to buy 5 year’s worth of data protection storage up-front: a year is a long-time in data protection. Reliably predicting exactly where you’ll be in 3, 5 or 10 years from today is an exercise in diminishing returns. Instead, it’s important to be adaptable.

And if you want an example of how adaptable a data protection company can be, just look back over everything Dell EMC has achieved in the past decade.

Buckle up, it’s going to be an exciting ride in the 2020s.